For many years, Tesla CEO Elon Musk has promised that his EV maker’s so-called “Full Self-Driving” software would one day make cars safer by taking flaws human drivers out of the equation.

But as Rolling Stone‘s Miles Klee found out firsthand, the company’s plagued FSD software, an optional $15,000 add-on, is still far less confidence-inducing compared to having a person behind the wheel.

Klee was driving a Model 3 owned by one of Tesla’s most outspoken critics, Dan O’Dowd, a billionaire software magnate who went as far as to run for office to publicly oppose Musk’s “‘self-driving’ experiment.” O’Dowd established the Dawn Project, which is dedicated to banning “unsafe software” from “safety-critical” infrastructure, including transportation.

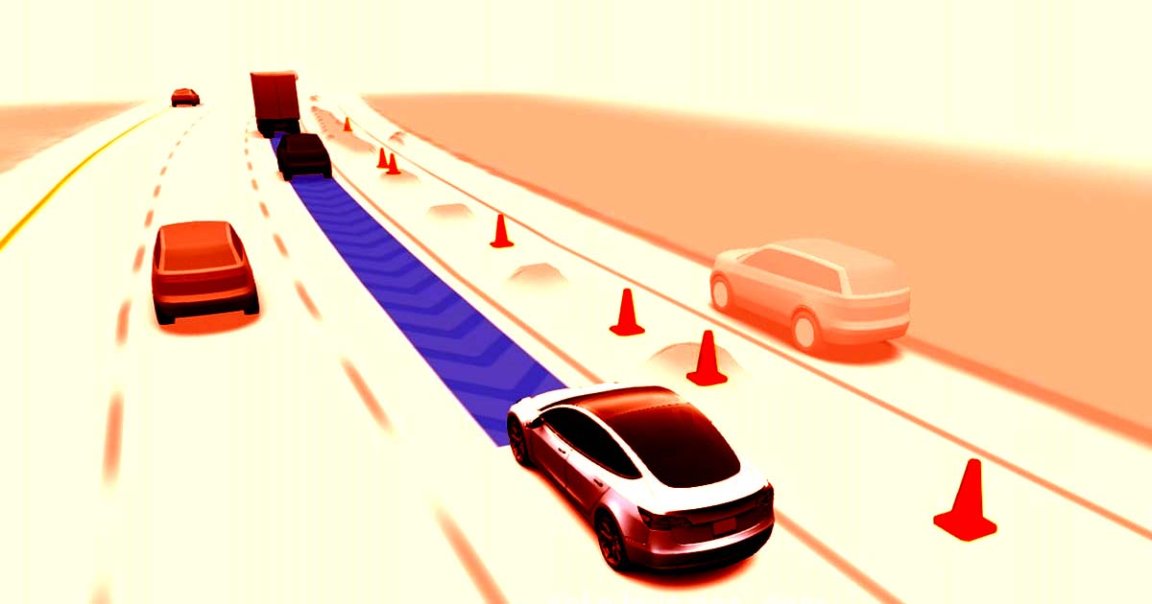

Klee’s chauffeur Arthur Maltin, who works for a PR firm that represents the Dawn Project, pointed out some striking inaccuracies and blindspots in the way Tesla’s FSD system “sees” the world around it, which it represents on the main dashboard screen mounted next to the steering wheel.

“Very impressive when you first look at it,” he told Klee, “but then if you actually start to pay attention to it, and look at what’s actually on in the world, like here, these two women, there’s one person represented. If you watch, the cars will sort of appear and disappear. And parked cars move around.”

According to Maltin, that’s due to the system’s hardware limitations. Instead of relying on the conventional LiDAR systems used by Tesla’s competitors, Musk has doubled down on exclusively using cameras for the vehicles’ driver assistance software. These video feeds are then analyzed by a neural network to translate them into an understanding of physical space around the vehicle.

Even Tesla warns that otherwise common “poor weather conditions” including “direct Sun” can hamper the software.

“What’s really bad for it is sunset or sunrise,” Maltin told Rolling Stone. “When it’s got the sun in its eyes, it will sometimes just put a big red warning on the screen. ‘Take over, take over, help me!'”

Despite its misleading name, Tesla’s “Full Self-Driving” software still requires drivers to be ready to take over at any point. That’s despite the company’s mercurial CEO promising that self-driving cars will be a thing by “next year” for a full decade.

The software has also come under intense scrutiny by regulators, with the National Highway Traffic Safety Administration investigating hundreds of injuries and dozens of deaths linked to the software. Earlier this year, the regulator concluded that drivers using FSD were lulled into a false sense of security and “were not sufficiently engaged in the driving task.”

During Klee’s test ride, Maltin had to intervene several times, with the vehicle almost crashing into a recycling bin or a plastic bollard. It even ran a stop sign on a highway on-ramp.

In a prior setup staged by Dawn Project, the vehicle even ignored a stop sign extended from a yellow school bus and some red flashing lights, plowing through a small child mannequin right afterward.

In short, is Tesla’s cameras-only approach really safe for public roads? Given the damning evidence — and plenty of data suggesting drivers are indeed lulled into a false sense of security — the company still has a lot to prove when it comes to Musk’s vision of a “self-driving” future.

More on FSD: Tesla Makes Moves to Roll Out Its Controversial “Full Self-Driving” Tech in China