Beware, Recruiters!

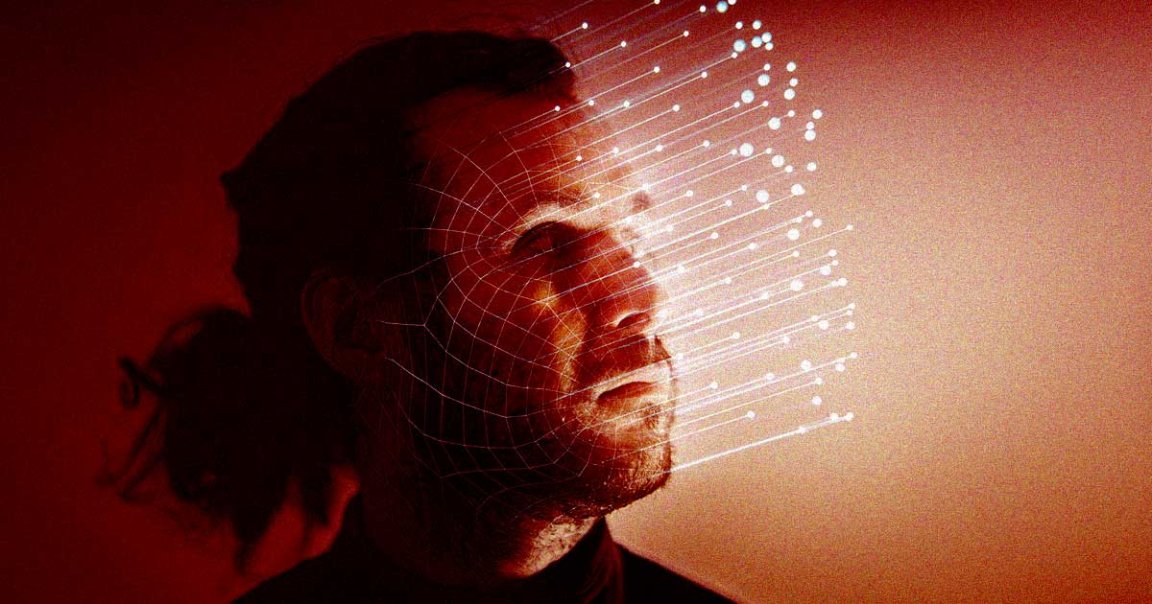

People aren’t just using impressive deepfakes to troll Robert Pattinson, create endearing videos of a lookalike Margot Robbie, or to sing onstage as famed pessimist Simon Cowell. Yesterday, the FBI issued an ominous warning, spotted by Gizmodo, that deepfakes — coupled with stolen personal information — are increasingly being used to apply for a variety of work from home jobs.

Needless to say, this is some next-level identity theft. Thus far, the FBI is saying that reported incidents have mostly been in IT, computer science, and data-related fields. In some ominous cases, the positions applied for were roles with direct access to private intel about customers PII, financial information, and corporate IT databases — which doesn’t exactly feel coincidental.

Deepfake It

Of course, there are reasons beyond plain ol’ data theft that might lead someone to try and land a job by way of uber-convincing AI technology. As TechCrunch points out, it could be a way for a non-US citizen to get paid in dollars, or more nefariously could be a means for a rival country to glean useful intelligence.

Luckily, if you’re in the process of interviewing potential work from home employees, there are some tells to watch out for. According to the FBI, voice-spoofing mechanizations used by the imposters are usually a little off — the audio sometimes didn’t sync quite right with the interviewee’s lips, and at times, inadvertent sneezes or coughs weren’t reflected in the visual.

While deepfake tech has made some serious advancements in recent years, tricking someone during a live interview process is still an enormously tall order. Still, it’s for exactly that reason — how far deepfakes have come, and where they might be able to go — that employers in affected fields should be wary.

READ MORE: FBI Says People Are Using Deepfakes to Apply to Remote Jobs [Gizmodo]

More on deepfakes: New App Makes You Sound like Morgan Freeman in Real Time