This article is part of a series about season four of Black Mirror, in which Futurism considers the technology pivotal to each episode and evaluates how close we are to having it. Please note that this article contains mild spoilers. Season four of Black Mirror is now available on Netflix.

My Avatar, Myself

The crew aboard the U.S.S. Callister, a spaceship not unlike Star Trek’s Enterprise, detects the presence of an intergalactic nemesis. They band together and, with the winning combination of technological sophistication and the captain’s bold tactics, defeat him. The crew celebrates by cheering captain Robert Daly, who makes out with the three female members of the crew as a reward.

This is, as you might have guessed, a fantasy of Daly’s making. In real life, he’s a sniveling, spineless software developer. The fantasy of the spaceship, we later learn, is in his immersive video game, in a version he built just for himself. The crew is populated with people from Daly’s job, synthesized into the game with a physical form and personality that mirrors their real-life personas, via their sequenced DNA (Daly swiped a bit of their genetic code from the workplace). For the crew, the fantasy is a nightmare. Daly is cruel and abusive, and they are sentient in the game, able to feel every bit of pain he inflicts.

The show presents a vision of the future in which it’s possible to recreate an individual’s personality and transplant it into a digital avatar using nothing more than a DNA sample. This isn’t yet possible. Technology, however, might soon change that. Before that happens, we would be wise to answer some ethical questions about how to properly treat these digital renderings of ourselves.

The Next Generation of Videogames

“We are able to reinvent ourselves through avatars that we create in virtual worlds, in massively multiplayer online role-playing games, and in profiles that we use on Facebook and elsewhere,” David Gunkel, a professor at Northern Illinois University, told Futurism. “Those avatars are a projection of ourselves into the virtual world, albeit a rather clumsy one, and one that is nowhere near what’s envisaged in the science fiction scenario.”

There are two aspects of these futuristic digital avatars that go well beyond what we currently have and make them seem like real people: their behavior and their appearance.

The behavior aspect can be surprisingly simple. Like anything that involves machine learning, avatars can be trained within limited parameters, and the software can iterate based on that.

More than a decade ago, Microsoft designed a learning-based system used in a number of driving-based video games. It’s no fun to play against opponents that seem superhuman, so the engineers created Drivatar, a system designed to gather data on how real humans play the game to then imbue its artificial opponents with human-like (fallible) abilities. Drivatar would capture aspects of a human player’s driving style, like the way they would take a corner, or at which point in a turn they would brake. Then the system would replay those moves as the human driver would, effectively producing a snapshot of how that individual might compete.

“It gave us a quite a nice milieu for developing AI, because it’s relatively constrained – you’re driving a car round a track, it’s not open world human behavior – yet you do have a certain richness of content in the way a car drives, particularly when cars interact,” Mike Tipping, who played a key role in developing the technology, told Futurism. The ultimate goal of a system like Drivatar is allowing someone to play a game against a computer opponent and recognize behaviors of a friend or rival.

The digital avatars we see in U.S.S. Callister are a much more advanced version of the same idea. But deriving our behavior from our genetics is inefficient, if not impossible. Our best shot at making a digital recreation of an individual’s personality would be applying machine learning to a large data set that outlines a person’s thoughts, speech, and behavior.

“At the moment, it is difficult to imagine being able to clone someone’s mind/consciousness from a scientific perspective,” Kevin Warwick, the deputy vice chancellor of research at Coventry University told Futurism “But that’s not to say it will be always impossible to do so.”

Human beings are very good at spotting entities that aren’t quite human — many of us are all too familiar with the uneasiness of the Uncanny Valley. And DNA-based digital renderings still leave much to be desired; one test conducted by the New York Times, zero out of 50 staff members were able to identify a DNA-generated image of another staff member, although other tests fared a bit better.

That being said, digital imaging capabilities are getting better all the time. Researchers recently came out with a tool that changes the weather effects in video footage using AI. Human faces might be more difficult to fake, but an advance like this shows it’s far from impossible to do so.

So digital renderings will look and behave a lot more like the real people upon which they’re based. And it’s increasingly likely that scientists will also figure out how to upload consciousness. Combine the three, and we start to get into some murky ethical territory.

Artificial Individual

The moment a digital clone is made, it diverges from the person upon which it’s based. They become separate entities. “At a specific instance in time they would be identical, but from the moment of cloning they would diverge (to some extent at least) through their different experiences and surroundings,” Warwick said. “By this I mean there will be physical changes in the brains involved due to external influences and life.”

Lawmakers will have to decide whether that digital rendering is considered property, or given personhood. “There’s debate about who owns the avatars that are created in a massively multiplayer online role-playing game,” said Gunkel. “Some providers of the service say the avatar is yours and what you do with it is your choice — you own it, you decide if it lives or dies, etcetera. In other circumstances, the provider of the service has more restrictions over who owns the avatars.”

Decisions like that will help decide more nuanced policies, like “human rights” for digital entities prohibiting their mistreatment or torture, or whether they have the right to “die.”

Then there’s the question of whether committing crimes against digital renderings is, in fact, a crime. “The question of whether abusing the virtual version of something, or a robotic version of something, deflects violence to the robot and taking it away from people, or whether it encourages that kind of behavior… it’s a really old question and one we’ve never been able to solve,” Gunkel said.

“[The digital sphere] makes it easier to cross the line,” said Schneider. “It can cause disrespect for humans.” She compares the situation to acting abusively toward a non-conscious android, arguing that normalizing such actions sets a harmful precedent for the way humans are to interact with one another.

Moderating Mods

As soon as we make rules about how to operate digital technology, people figure out how to violate them. We jailbreak smartphones; we use a browser’s incognito mode to illegally stream movies. As technology becomes more powerful, abusing it could have more dire consequences. Misuse of technology in the past has shown it’s very difficult to prevent this kind of activity.

“There is always a way to break the lock, there is always a way around the regulation,” said Gunkel. “As William Gibson said years ago, back in the early days of the internet, the street finds its own use for things.”

It’s safe to assume that technology will be misused. But it’s impossible to predict exactly how it will be misused before it happens.

“No matter what kind of stringent regulations are put in place by governments, or technological or regulatory controls are imposed by the industry, chances are users will hack them and make them do things they were never designed to do,” explained Gunkel.

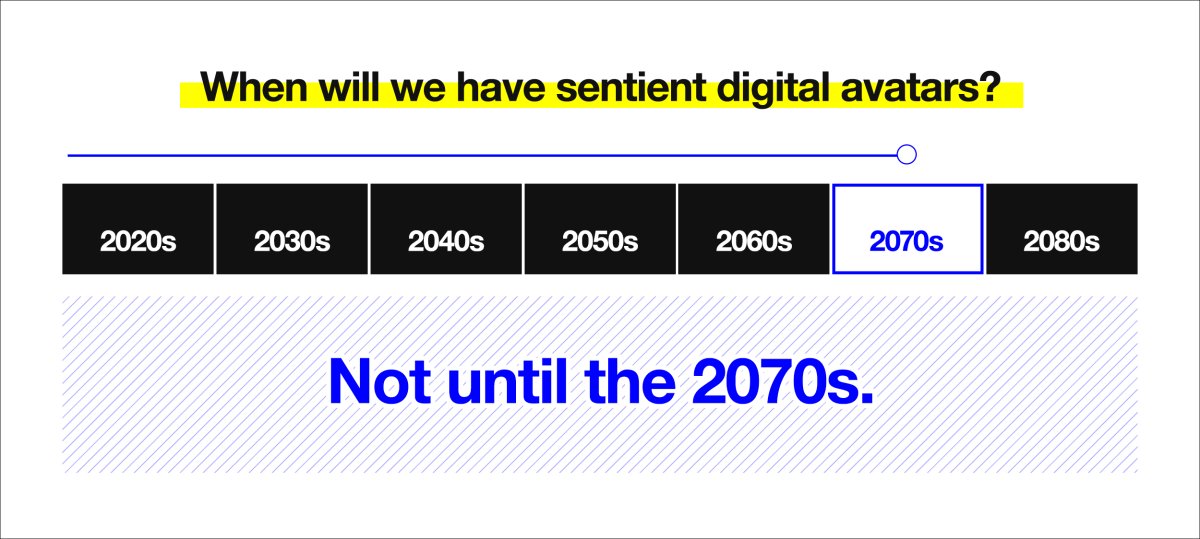

Can AI be classified as a new category of living being? Most would agree that it can’t, at least in its current form. But the field is advancing at such a rapid pace that this could change quickly. It might be decades before we can create an AI that’s conscious in any meaningful way. However, the capacity for humans to mistreat a non-sentient AI might accelerate the need for nuanced ethical debates about how these entities should be classified.

There are persistent fears that, one day, AI might rule over us. For the time being, however, they’re firmly under our control. As we occupy that position of power, we need to think carefully about how we want our relationships with artificial beings to play out.