Salk researchers and collaborators have discovered that the human brain has a higher memory capacity than was originally estimated. This insight into the size of neural connections could have great implications on better understanding how the brain is so energy efficient.

“This is a real bombshell in the field of neuroscience,” says Terry Sejnowski, Salk professor and co-senior author of the paper, which was published in eLife. “We discovered the key to unlocking the design principle for how hippocampal neurons function with low energy but high computation power. Our new measurements of the brain‘s memory capacity increase conservative estimates by a factor of 10 to at least a petabyte, in the same ballpark as the World Wide Web.”

Sizing Them Up

So how exactly does this all work?

Each of your memories and thoughts are the result of patterns of electrical and chemical activity in the brain. An axon from one neuron connects with the dendrite of a second neuron. The majority of the activity occurs when these branches interact at certain junctions, known as synapses. Neurotransmitters travel across the synapse to tell the neuron how to behave. The neuron can then send the message out to thousands of other neurons.

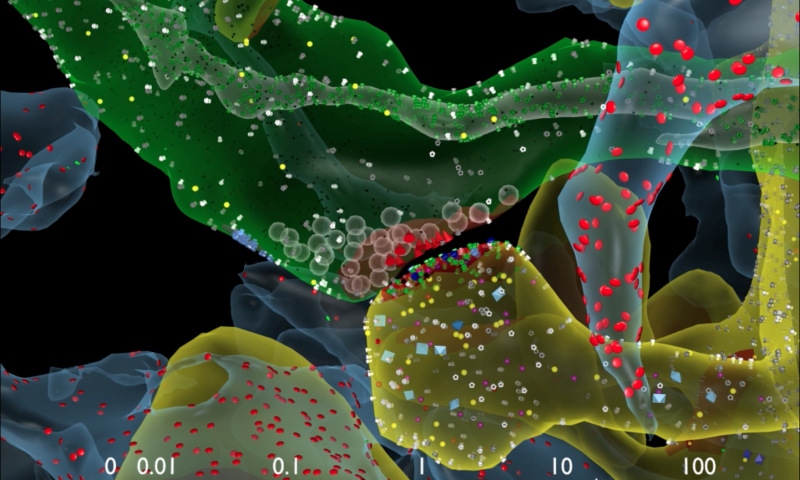

Synapses are still kind of a mystery to scientists. To learn more about how they function, the Salk team built a 3D reconstruction of rat hippocampus tissue, the memory center of the brain. During the study, they noticed something unusual. Neurons seemed to be sending duplicate messages to each other.

At first, the researchers didn’t think this was a big deal. This kind of duplicity occurs about 10 percent of the time in the hippocampus. But Tom Bartol, a Salk staff scientist, had an idea.

If the team was able to measure the differences between two very similar synapses, such as these, they might be able to more accurately classify synaptic sizes. Before the study, they had only been classified as small, medium, and large.

“We were amazed to find that the difference in the sizes of the pairs of synapses were very small, on average, only about eight percent different in size. No one thought it would be such a small difference. This was a curveball from nature,” says Bartol.

Because the memory capacity of neurons is dependent upon synapse size, this eight percent difference turned out to be a key number the team could then plug into their algorithmic models of the brain to measure how much information could potentially be stored in synaptic connections. Using this, the team determined there could be about 26 categories of sizes of synapses. This is 10 times more sizes than previously thought.

In computer terms, 26 sizes of synapses correspond to about 4.7 “bits” of information. Previously, it was thought that the brain was capable of just one to two bits for short and long memory storage in the hippocampus.

Unreliable Synapses and Brain Efficiency

Understanding that synapses can hold more memory than previously thought was an important conclusion. However, scientists still didn’t understand how the brain was so efficient considering hippocampal synapses are notoriously unreliable.

Researchers believe that synapses might be constantly adjusting to average out their success and failure rates over time. To test this, researchers used their new data and a statistical model to find out how many signals it would take a pair of synapses to get to that eight percent difference.

“Every 2 or 20 minutes, your synapses are going up or down to the next size. The synapses are adjusting themselves according to the signals they receive,” says Bartol.

Technological Impact

“Our prior work had hinted at the possibility that spines and axons that synapse together would be similar in size, but the reality of the precision is truly remarkable and lays the foundation for whole new ways to think about brains and computers,” says Harris. “The work resulting from this collaboration has opened a new chapter in the search for learning and memory mechanisms.”

The findings also offer a valuable explanation for the brain’s surprising efficiency. The waking adult brain only generates as much power as a dim light bulb. The Salk discovery could help computer scientists build ultra-precise, but energy-efficient, computers, particularly ones that employ “deep learning” and artificial neural nets—techniques capable of sophisticated learning and analysis, such as speech, object recognition and translation.

“This trick of the brain absolutely points to a way to design better computers,” says Sejnowski. “Using probabilistic transmission turns out to be as accurate and require much less energy for both computers and brains.”