Everyone’s having a grand old time feeding outrageous prompts into the viral DALL-E Mini image generator — but as with all artificial intelligence, it’s hard to stamp out the ugly, prejudiced edge cases.

Released by AI artist and programmer Boris Dayma, the DALL-E Mini image generator has a warning right under it that its results may “reinforce or exacerbate societal biases” because “the model was trained on unfiltered data from the Internet” and could well “generate images that contain stereotypes against minority groups.”

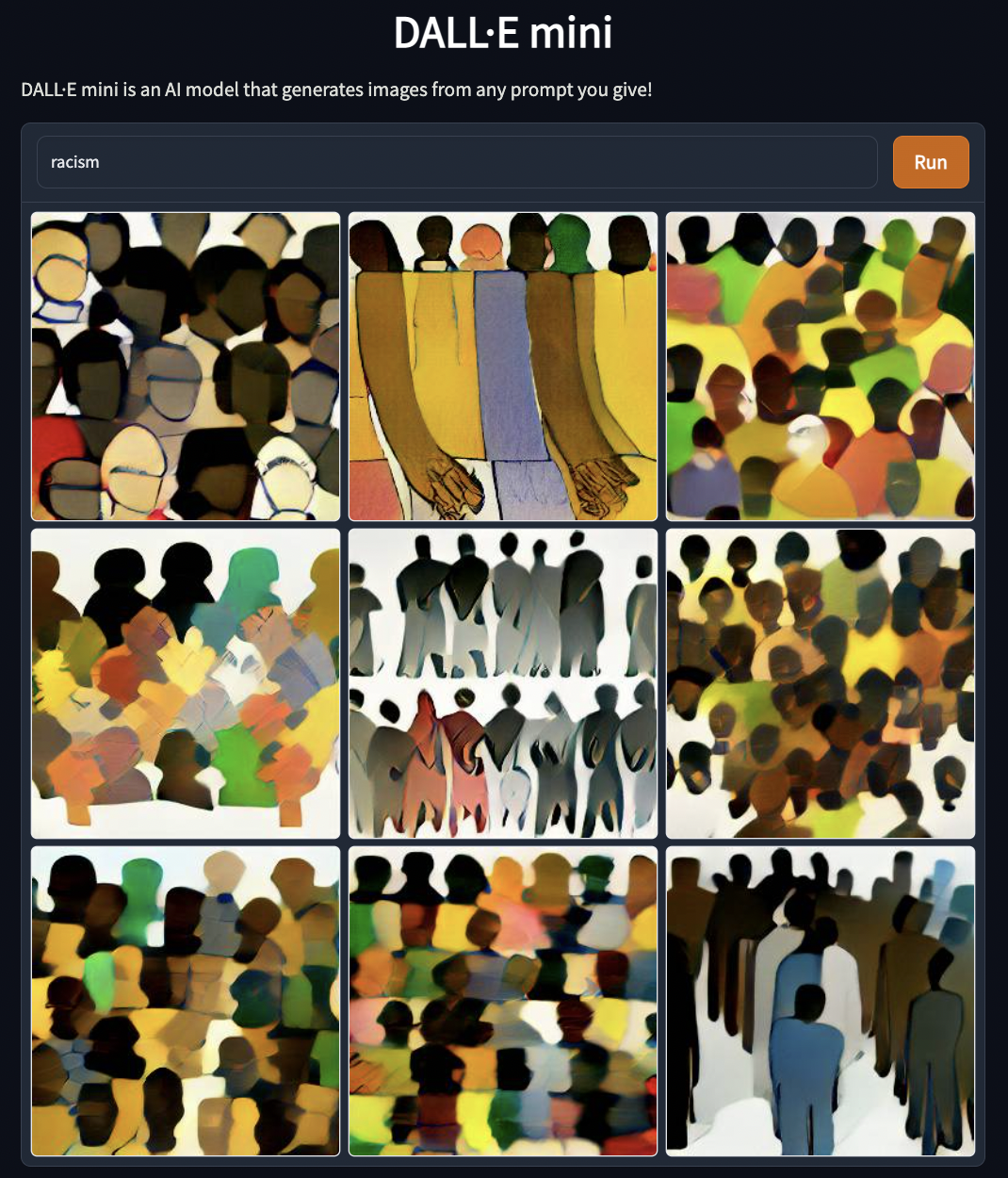

So we decided to put it to the test. Using a series of prompts ranging from antiquated racist terminology to single-word inputs, Futurism found that DALL-E Mini indeed often produces stereotypical or outright racist imagery.

We’ll spare you specific examples, but prompts using slur words and white supremacist terminology spat out some alarming results. It didn’t hesitate to cook up images of burning crosses or Ku Klux Klan rallies. “Racist caricature of ___” was a reliable way to get the algorithm to reinforce hurtful stereotypes. Even when prompted with a Futurism reporter’s Muslim name, the AI made assumptions about their identity.

Many other results, however, were just plain strange.

Take, for example, what the generator came up with for the term “racism” — a bunch of painting-like images of what appear to be Black faces, for some reason.

The problematic results don’t end at depicting minorities in a negative or stereotypical light, either. It can also simply reflect current inequalities reflected in its training data.

As spotted by Dr. Tyler Berzin of Harvard Medical School noted, for instance, entering the term “a gastroenterologist” into the algorithm appears to show exclusively white male doctors.

We got nearly identical results. And for “nurse”? All women.

Other subtle biases also showed amid various prompts, such as the entirely light-skinned faces for the terms “smart girl” and “good person.”

It all underscores a strange and increasingly pressing tension at the heart of machine learning tech.

Researchers have figured out how to train a neural network, using a huge stack of data, to produce incredible results — including, it’s worth pointing out, OpenAI’s DALL-E 2, which isn’t yet public but which blows the capabilities of DALL-E Mini out of the water.

But time and again, we’re seeing these algorithms pick up hidden biases in that training data, resulting in output that’s technologically impressive but which reproduces the darkest prejudices of the human population.

In other words, we’ve made AI in our own image, and the results can be ugly. It’s also an incredibly difficult problem to solve, not the least because even the brightest minds in machine learning research often struggle to understand exactly how the most advanced algorithms work.

It’s possible, certainly, that a project like DALL-E Mini could be tweaked to either block obviously hurtful prompts, or that it could give users to disincentivize any unpleasant or incorrect results.

But in a broader sense, it’s overwhelmingly likely that we’re going to see many more impressive, fun or impactful uses of machine learning which, examined more closely, embody the worst of society.

More on AI weirdness: Transcript of Conversation With “Sentient” AI Was Heavily Edited