Future Society

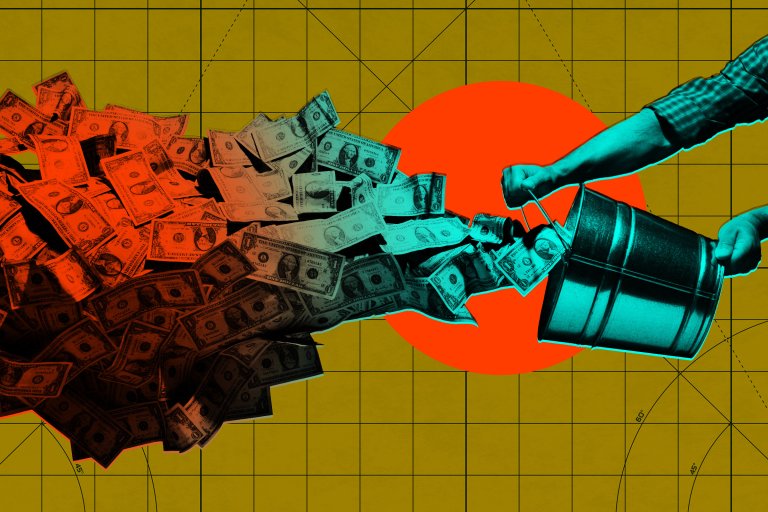

Technology allows us to live longer, work less, and know more than ever before. But what will our not so future society do once most of our labor force is replaced by computers or robots? Who has the final say in how knowledge is used and who has access to it? These questions are just a snapshot of the issues we will have to face in the future. We’ll track the policies, predictions, and philosophies that are steering us into the world of tomorrow.