Seen from our planet, the flash could be brighter than a multitude of suns — and might be followed by a ruined ozone layer, an upsurge in radiation, and a wave of cancers and mutations in humans and other creatures that would crest for hundreds or even thousands of years.

It’s not the premise of Hollywood’s latest apocalypse flick. These disasters could follow if a supernova ignited close to Earth — say, within 30 light years of our planet.

This month, NASA awarded $500,000 to a research team based in part at the University of Kansas to make the most painstaking assessment ever of the potential damage from a near-Earth supernova.

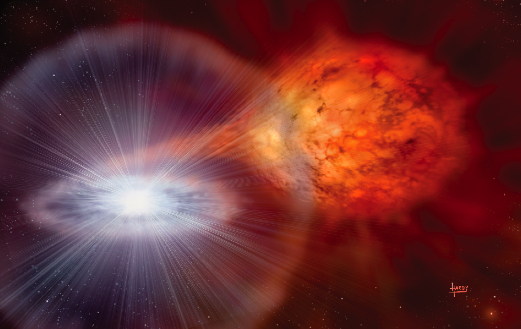

“A supernova is the explosion of a star, which comes at the end of the ‘life’ of large stars, when they collapse after running out of fuel,” said Adrian Melott, professor of physics and astronomy, who leads the KU Astrophysics Biology Working Group that earned the grant. “But, there can be other types, sometimes set off by the merger of two stars.” Melott is working with Andrew Overholt of MidAmerica Nazarene University and Brian Thomas of Washburn University — both KU alumni — to perform computer modeling and data analysis on supercomputers such as the National Science Foundation’s Teragrid.

In part, the team’s predictions will hinge on evidence of previous supernovae. According to Melott,

This 2.5 million-year event is the only one we have concrete evidence of, and it’s nearly enough to affect the Earth and give us a dose of radiation and possibly climate change without being a mass killer. There has been iron-60 found in mud cores taken from the ocean bottom, about 2 to 2.5 million years old. Iron-60 is a radioactive isotope that basically can only have been dumped there by a supernova possibly 150 light years away. The nearest in recorded history was about 7,000 light years away.

If humans lived to tell the tale, the consequence of a nearby supernova would change life for eons.

“We’d begin to get radiation effects,” said Melott. “Depletion of the ozone layer and resulting danger from ultraviolet light is common to this as well as many other astrophysical radiation events. There would be an increase of cosmic rays for hundreds or thousands of years, some of which would increase radiation on the ground — such as muons or neutrons. This might increase the cancer and mutation rates. Some have argued that it may change the cloud formation rate or the rate of lightning, leading to climate change.”

But how likely is such an occurrence?

While a supernova isn’t expected to explode in our galactic neighborhood anytime soon, the KU researcher said that supernovae occur in the Milky Way on a surprisingly regular basis. “There are 2 or 3 per century in our galaxy,” he said. “On average, you’d get one within 200 light years every million years or so, and less often for closer ones. The killer events within 30 light years are likely every few hundred million years. Most of them would be easily spotted — but there is a type from merger events that could happen with no warning, as they are due to the merger of dead stars that we don’t see.”

In the three-year study, the researchers will rely on other data on supernovae from NASA space missions such as Swift, Chandra, GALEX and Fermi to estimate photon and cosmic ray intensity, solar and terrestrial magnetic field effects and atmospheric ionization. The KU team will greatly improve on past estimates and produce the first detailed models linking all of these effects together. Melott said that in a worst-case scenario, a close supernova could bring on a large-scale die-off of life on Earth.

“It can happen,” he said. “It probably has. However, there is as yet no concrete evidence that a specific extinction is connected to a supernova.”

That article comes to you from The University of Kansas. Original article can be found here.