Many of today’s self-driving cars use automated systems that work in tandem with a collection of sensors and cameras. For example, Tesla’s Autopilot relies on radar and other sensors as well as a suite of eight cameras. However, none of these cameras can tell the driverless car what’s around a corner — an ability that researchers from the Massachusetts Institute of Technology (MIT) have developed with a new camera system they call CornerCameras.

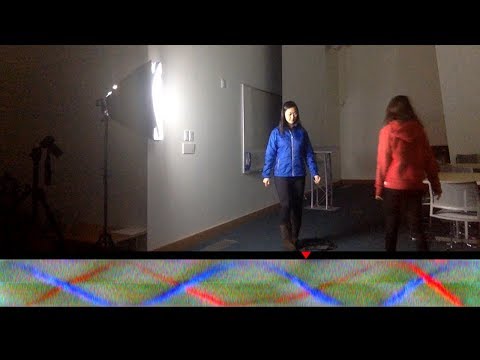

In a study published online, these researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) described the algorithm behind CornerCameras. Where regular vision, whether it be biological or mechanical, relies on light, CornerCameras captures subtle changes in lighting. Specifically, they spot what the researchers called “penumbra” — a shadow created by a small amount of light that’s reflected on the ground directly at the camera’s line of sight from objects obscured around a corner.

CornerCameras is able to piece together the subtle changes from these shadows into some sort of image, which it uses to tell the location of the object. “Even though those objects aren’t actually visible to the camera, we can look at how their movements affect the penumbra to determine where they are and where they’re going,” lead author Katherine Bouman said in a press release.

It’s fairly obvious how such a system could improve the ability of autonomous vehicles to see on the road. “If a little kid darts into the street, a driver might not be able to react in time,” Bouman added. However, currently, CornerCameras needs to make some improvements. For one, the technology doesn’t work in extremely low light conditions and the algorithm gets confused by changes in lighting. “While we’re not there yet, a technology like this could one day be used to give drivers a few seconds of warning time and help in a lot of life-or-death situations.”