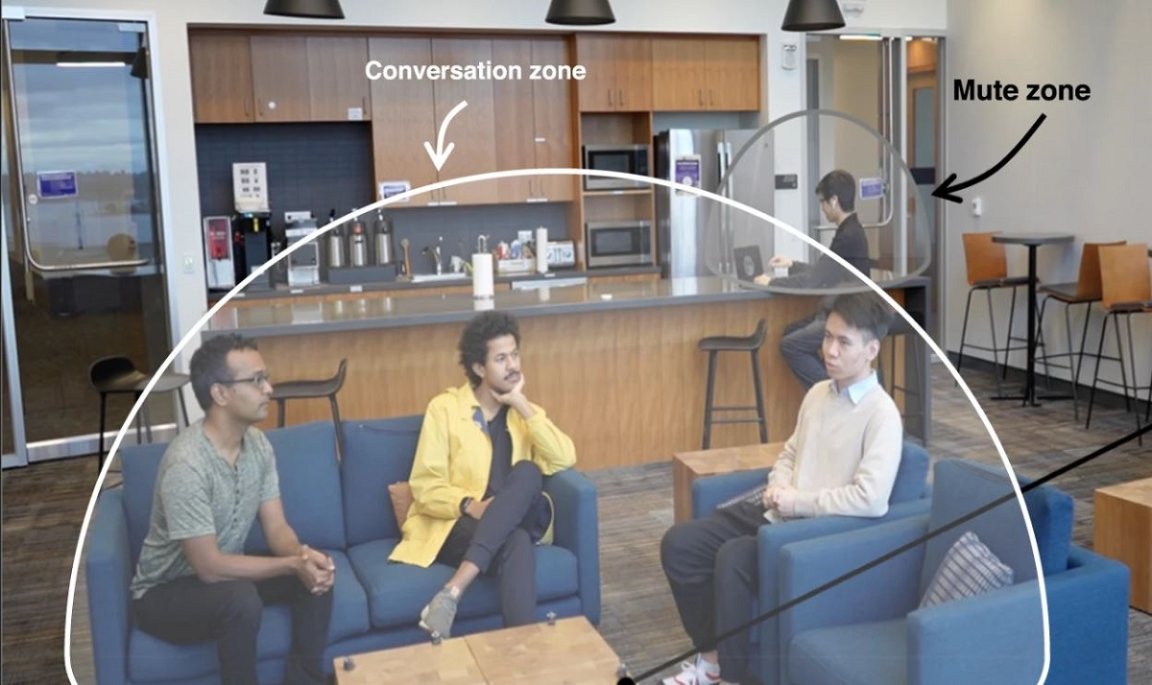

Cone of Silence

A new speaker can rearrange its seven “self-deploying” microphones to partition a room into so-called “speech zones,” allowing it to track and identify different voices, even as they move.

Even better, the researchers behind the invention say this pinpoint localization allows them to not only separate simultaneous conversations, but mute noisy zones — or annoying guys — on command, for applications like video conferences in meetings.

As detailed in a recent study on the work, published in the journal Nature Communications, the unorthodox speaker comprises what’s known as a robot swarm. The self-deploying microphones come as thimble-sized robots that communicate with each other, moving on their tiny wheels to different points on their own like diminutive Roombas, and returning to a charging station when needed.

“For the first time, using what we’re calling a robotic ‘acoustic swarm,’ we’re able to track the positions of multiple people talking in a room and separate their speech,” said co-lead author of the study Malek Itani at the Paul G. Allen School of Computer Science & Engineering, in a statement.

Sounding Seeking

To navigate their environment, the prototype bots use a technique akin to high-frequency echolocation, the researchers say.

The mobility that affords is crucial. By spreading the microphones out as far as possible, the neural network that processes the data can make more precise calculations. For now, though, the robots are limited to roaming tabletops, as they are only able to localize in 2D space.

“We developed neural networks that use these time-delayed signals to separate what each person is saying and track their positions in a space,” explained co-lead author Tuochao Chen at the Allen School, in the statement. “So you can have four people having two conversations and isolate any of the four voices and locate each of the voices in a room.”

In the Zone

Chen’s claims are borne out by the results of real-world experiments.

The researchers tested the robot swarm in places like offices and kitchens while three to five people were talking, with the system having no prior knowledge of the locations or the voices.

In spite of those obstacles, the device was still able to localize the voices 90 percent of the time, within 1.6 feet of each other. The median error, meanwhile, was even lower at just under six inches for all scenarios. Pretty tight.

Its speed slightly lets it down, however. On average, the system takes 1.82 seconds to process three seconds worth of sound — which might make video conferences a little clunky.

Next up, the researchers want to apply these muting and separation techniques in physical space, in real time, by using its localizing microphones to do what a noise canceling headphone does in your ears — but to an entire room.

More on robots: NASA Wants to Send Humanoid Robots to Mars