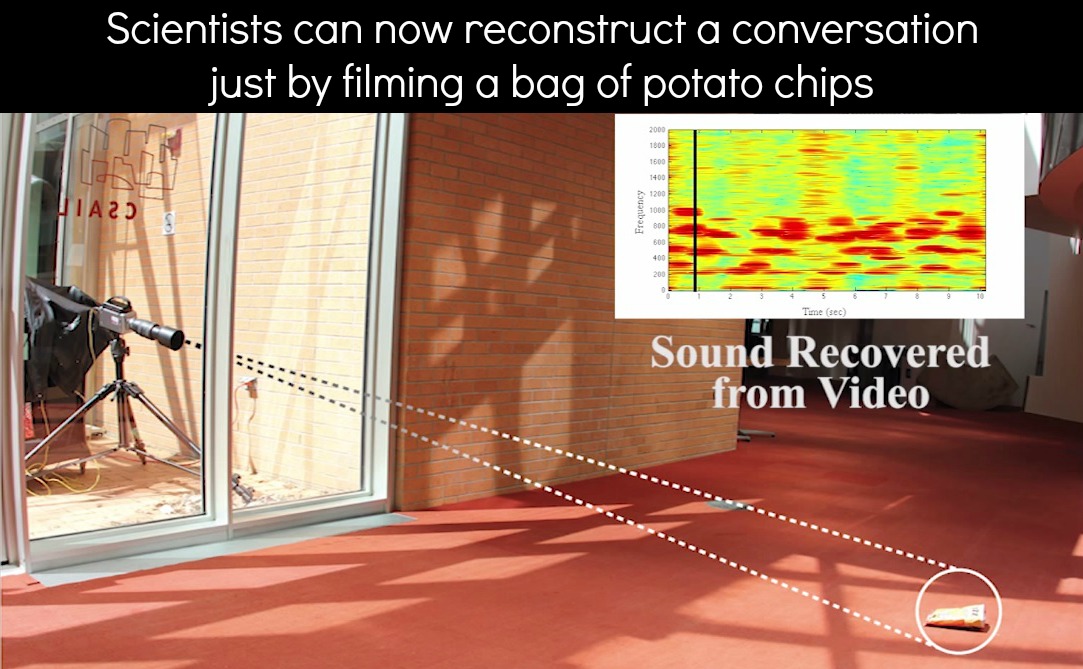

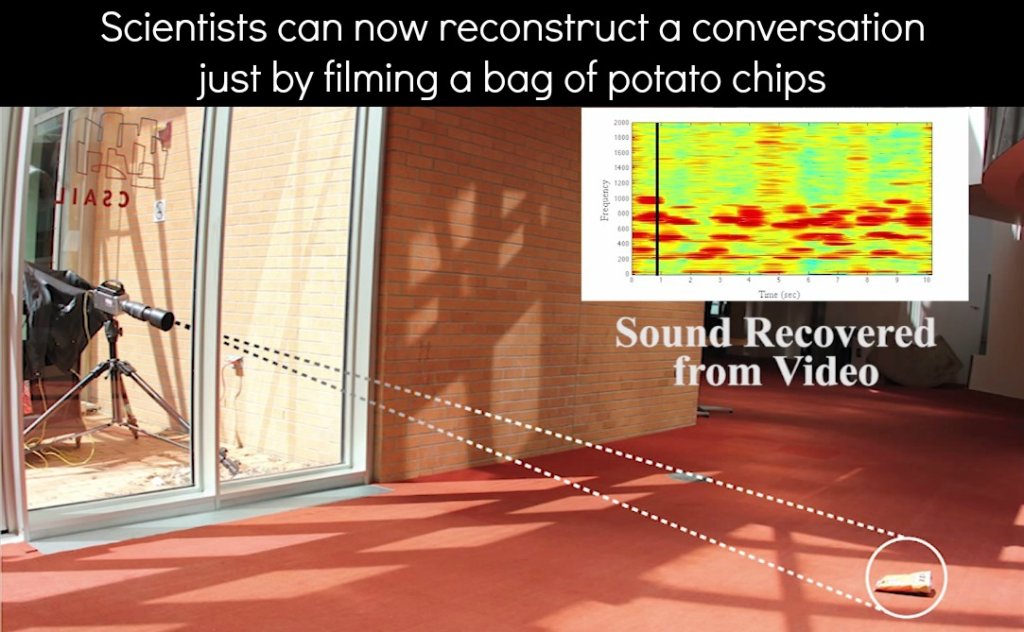

This sounds like something out of a science fiction movie. A research team — consisting of members from MIT, Microsoft, and Adobe — joined forces to create a new way to eavesdrop in on conversations. Using a high-speed camera and an algorithm designed to analyze tiny vibrations in everyday objects (like a plant or a bag of potato chips), this research team has developed a program that can actually reconstruct speech and songs to a scary level of accuracy. This reconstruction has been produced with the camera up to 15-feet away and even through soundproof glass.

So, How Does it Work?

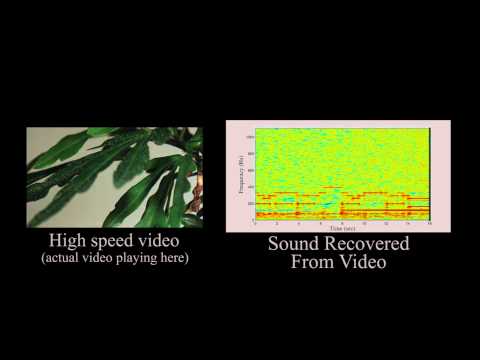

When a sound wave hits an object, it vibrates. These vibrations are much smaller than Humans can see, even when the high-speed footage is slowed down. These vibrations, if read correctly, contain information about all of the sound hitting the object being filmed (this includes background sound and the sound you’re actually interested in). If you want to listen into a conversation, being able to accurately read these vibrations would be a perfect way to do it.

In the experiment, the clarity of the sound you’re able to capture depends entirely on how many frames per second you can film. Smart phones have a frame capture rate of about 60 frames per second; this is far slower than is practical for being able to see these tiny vibrations. The experiment used cameras between 2,000 and 6,000 to reconstruct the audio you can hear in the video. Just in case you were wondering, the best commercial high-speed cameras you can get can reach frame capture rates of 100,000 frames per second, well above what the experiment calls for.

This entire process is made possible by the way cameras see color and assign pixels. The high-speed camera isn’t actually looking at the object’s motion, but instead it can measure how a pixel’s color changes over time. These slight color variations are caused because, as the object is being filmed, it’s vibrating and thus and thus is not stationary. Those tiny changes are perceived most dramatically as a change in color.

WATCH: Passive Recovery of Sound from Video

As Larry Hardesty from the MIT News Office explains, “Suppose, for instance, that an image has a clear boundary between two regions: Everything on one side of the boundary is blue; everything on the other is red. But at the boundary itself, the camera’s sensor receives both red and blue light, so it averages them out to produce purple. If, over successive frames of video, the blue region encroaches into the red region — even less than the width of a pixel — the purple will grow slightly bluer. That color shift contains information about the degree of encroachment.” From there, it’s just a matter of actually understanding the vibrations, which this research team has done a very good job at doing.

Obviously, this type of listening technology could greatly benefit law enforcement, the military, and don’t forget “Big Brother.” (Sorry, I couldn’t let a big brother joke pass me by). The technology to reconstruct these sounds already exists in most commercial cameras, it’s just a matter of using them to listen to their surroundings instead of simply filming them.