Policy Gradient Methods

Many of our current artificial intelligence (AI) systems are largely based on machine learning algorithms and deep neural networks, and are able to perform tasks like human beings would. In some cases, even better. One of the more popular examples of this is DeepMind’s Go-playing AI AlphaGo, which has already beaten professional Go players on more than one occasion.

The success of such AI systems can be attributed at least in part to a reinforcement learning technique called policy gradient methods. A policy gradient method optimizes AI policies that work within parameters that have been determined based on the expected result. Aside from AlphaGo, the technique has also been used to control deep neural networks in video games and 3D locomotion.

However, researchers at OpenAI point out that such a method does have its limits. In order to overcome the restrictions, these researchers have started using a new kind of reinforcement learning algorithm called Proximal Policy Optimization (PPO), which is proving to be much simpler to implement and fine tune. “We propose a new family of policy gradient methods for reinforcement learning, which alternate between sampling data through interaction with the environment, and optimizing a “surrogate” objective function using stochastic gradient ascent,” the researchers wrote in a study published online last week.

Improving AI’s Ability to Learn

To improve AI’s capacity for learning and adapting to new situations, OpenAI proposes relying on PPO, which they say “strikes a balance between ease of implementation, sample complexity, and ease of tuning, trying to compute an update at each step that minimizes the cost function while ensuring the deviation from the previous policy is relatively small,” as an OpenAI blog explained.

They demonstrated how PPO works by developing AI interactive agents in their artificial environment called Roboschool. “PPO lets us train AI policies in challenging environments,” the blog said. It trains an AI agent “to reach a target […], learning to walk, run, turn, use its momentum to recover from minor hits, and how to stand up from the ground when it is knocked over.” The interactive agents were able to follow new target positions set via keyboard by itself — despite these being different from what the agents were primarily trained on. In short, they managed not just to learn — but to generalize.

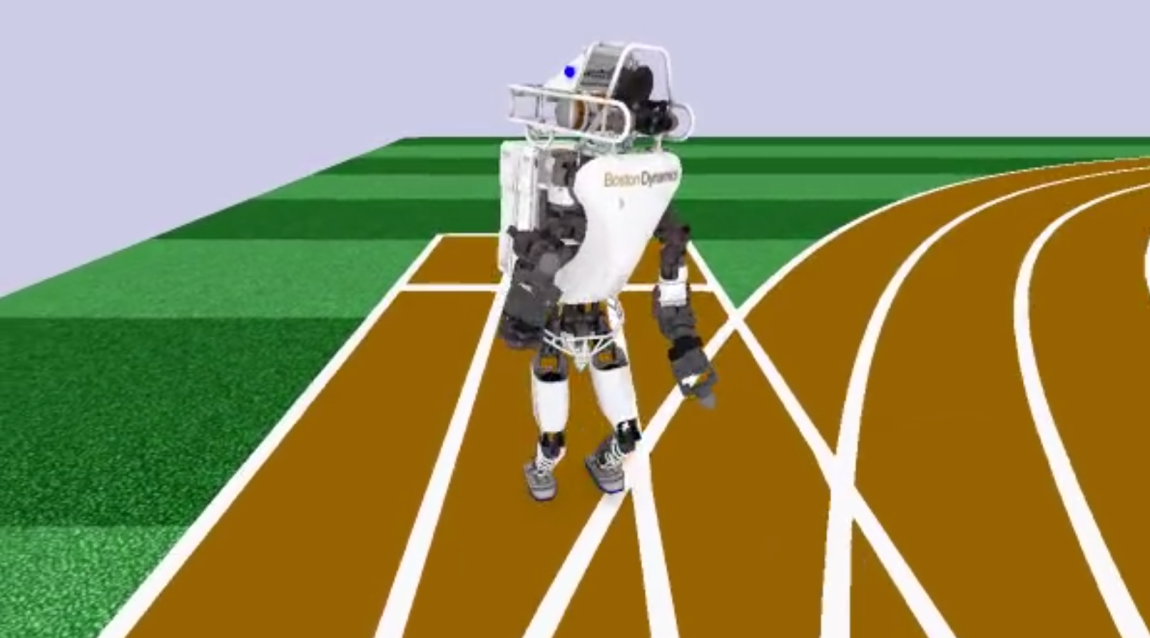

This method of reinforcement learning could also be employed to train robots to adapt to their environment. OpenAI’s researchers tested the idea on a simulation of Boston Dynamics’ bipedal robot Atlas. This was even more complicated than the previous experiment, as the original interactive agent OpenAI used had 30 distinct joints — whereas Atlas only had 17.

By using PPO, OpenAI hopes to develop AI that can not only adapt to new environments, but do so faster and more efficiently. To that end, they’re calling on developers to try it out. As they wrote on their website, “We’re looking for people to help build and optimize our reinforcement learning algorithm codebase.”