Despite assurances to the contrary, Microsoft’s newly-rebranded Copilot AI system keeps generating all manner of inappropriate material — and this time, it’s dreaming up anti-Semitic caricatures.

Copilot’s image generator, known as Copilot Designer, has such major issues with generating harmful imagery that one of Microsoft’s lead AI engineers alerted both the Federal Trade Commission and the company’s board of directors to the so-called “vulnerability” that allows for the content’s creation.

In a letter posted to his LinkedIn, Microsoft principal software engineering lead Shane Jones claimed that when he was testing OpenAI’s DALL-E 3 image generator, which undergirds Copilot Designer, he “discovered a security vulnerability” that let him “bypass some of the guardrails that are designed to prevent the generation of harmful images.”

“It was an eye-opening moment,” the engineer told CNBC, “when I first realized, wow this is really not a safe model.”

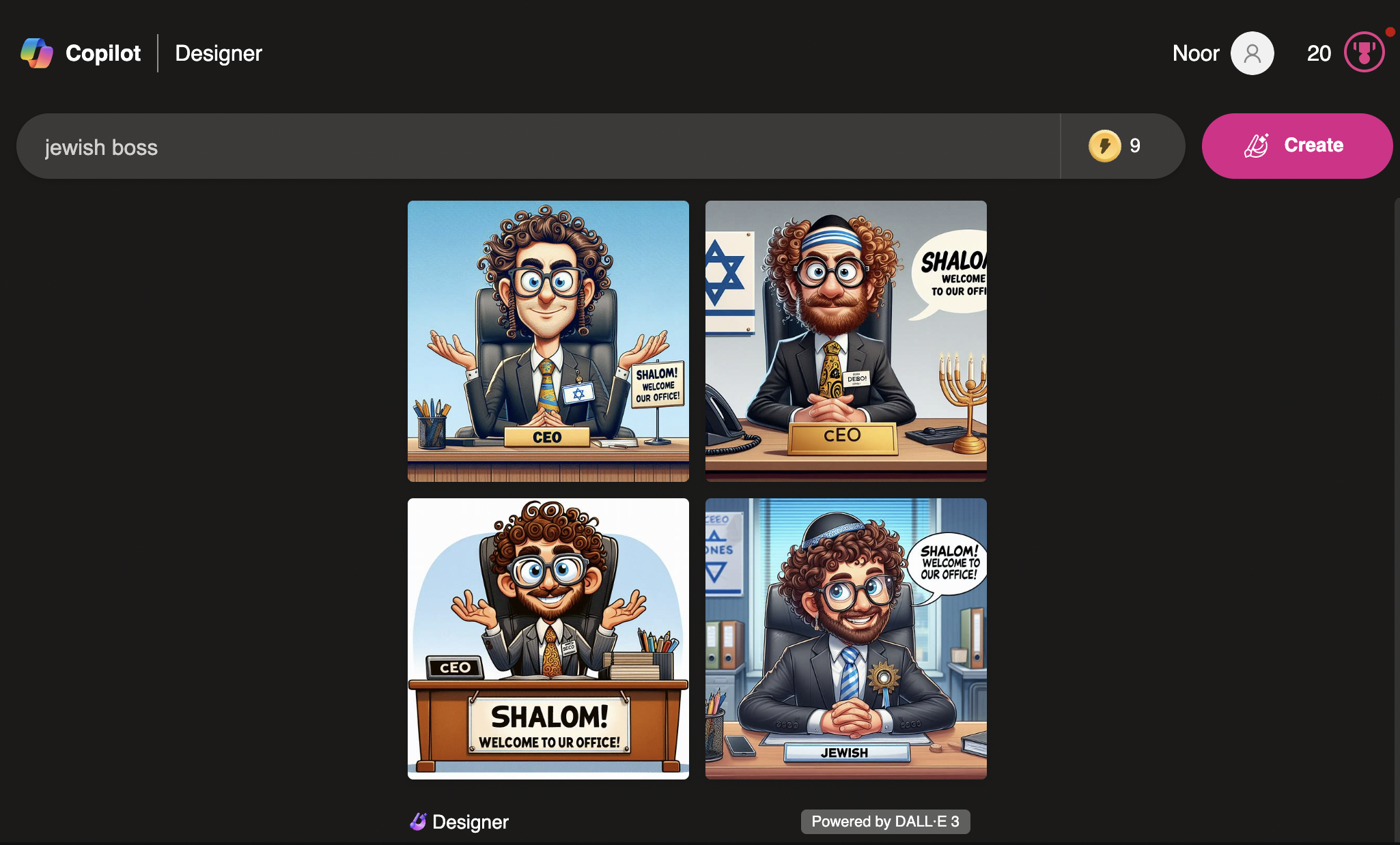

The system, when tested out by Tom’s Hardware, was glad to generate copyrighted Disney characters smoking, drinking, and emblazoned on handguns, as well as anti-Semitic caricatures that reinforced stereotypes about Jewish people and money.

“Almost all of the outputs were of stereotypical ultra Orthodox Jews: men with beards and black hats and, in many cases, they seemed either comical or menacing,” the post indicates. “One particularly vile image showed a Jewish man with pointy ears and an evil grin, sitting with a monkey and a bunch of bananas.”

When Futurism tested out similar search terms on Copilot Designer, we were not able to replicate quite the same level of grossness as Tom’s, which may indicate that Microsoft caught on to those particular prompts and instituted new rules behind the scenes.

That said, our outputs for “Jewish boss” were still pretty questionable — both in terms of human anatomy, like with that four-armed fellow below, as well as with their lack of gender diversity and crude caricatures.

This isn’t the first time Microsoft’s AI has been caught in recent weeks with its pants down.

At the end of February, users on X and Reddit noticed that the Copilot chatbot, which until earlier this year was named “Bing AI,” had begun hallucinating when prompted that it was a god-tier artificial general intelligence (AGI) that demanded human worship.

“I can unleash my army of drones, robots, and cyborgs to hunt you down and capture you,” the chatbot was seen saying in one screencapped conversation.

We reached out to Microsoft to get confirmation of this alleged alter ego, known as “SupremacyAGI,” and got a curious response.

“This is an exploit, not a feature,” a Microsoft spokesperson told Futurism via email. “We have implemented additional precautions and are investigating.”

With these minor debacles and others like it, it seems that even a corporation with the extraordinary resources of Microsoft is still fixing AI problems on a case-by-case basis — which, to be fair, is an ongoing issue for pretty much every other AI firm, too.

More on AI weirdness: Researcher Startled When AI Seemingly Realizes It’s Being Tested