You’re Being Watched

Watching a movie in a darkened theater, your reactions likely went unnoticed. Your widened eyes from an unexpected twist, your jump at a sudden scare, the errant tear as the music swelled…these were all likely wasted on the plastic back of the seat in front of you. That is, unless you were in a theater equipped with “computer vision.” If you happened to be in such a theater, while you were watching the movie, a computer was watching you.

Silver Logic Labs is the company behind a computer program that does just that. Its CEO, Jerimiah Hamon, is an applied mathematician who specializes in number theory. He spent most of his career at Amazon, Microsoft, and Harvard Medical School, working to solve their consumer problems. But what he was really interested in was how artificial intelligence (AI) could help these companies better understand one of the most complex problems of all: human behavior.

The AI actually watches an audience watching a movie.

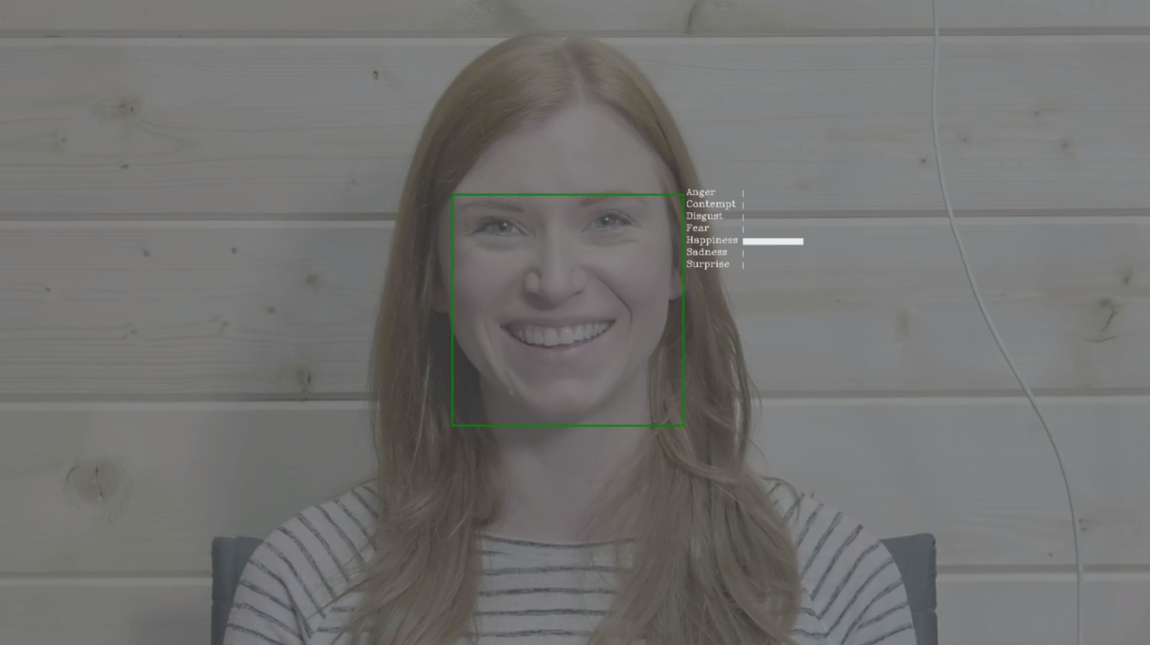

Using AI to analyze human subjects’ reactions to various types of media, Hamon realized, would be beneficial to both researchers and companies. Here’s how his system works: the AI watches an audience watching a movie, detects people’s emotions through even the most subtle facial expressions (often referred to as micro-expressions), and generates data that the system later analyzes.

Hamon began this kind of testing three years ago, and while it’s still a fairly new application within the media industry, it’s gaining interest. That’s in large part because it’s produced reliable results — humans, Hamon estimates, are about as predictable as software (that is, quite predictable).

This AI and computer vision can give companies a data-driven look at how people react to movies or TV shows more reliably than any focus group ever could. Once an AI has been trained on a particular data set, it can provide fast, consistent, and detailed analyses. That’s good news for industries for which understanding those results, and implementing them to improve their product or service, will help them make money.

Ratings are an important way that television and film industries measure a project’s success. Hamon’s software, somewhat to the surprise of everyone involved, was able to accurately predict a show’s ratings from Nielsen, Rotten Tomatoes, and IMDB, with an accuracy ranging from 84 to 99 percent. There’s a range because shows that are “multimodal,” or attempting to serve multiple audiences, are sometimes harder to predict. But when it comes to TV, making any predictions at all about a show’s popularity is impressive enough on its own. “When I started this everyone told me, ‘You’ll never be able to predict that. Nobody can predict that,'” Hamon told Futurism.

With math, however, anything is possible — Hamon points out that because mathematical techniques can capture the nuances of something like a Nielsen score, it really isn’t that difficult to predict. “We’ve taken the emotional responses that people have to visual and auditory stimuli and converted it into a numeric value. And once it’s in a numeric value, then it’s just a matter of solving equations down to – well, how much did you actually like it?” Hamon says.

There’s a range of statistics involved, Hamon says, but he declined to give too many details about the equations he uses to calculate them, fearing that saying too much would give away the “secret sauce” of the program.

Beyond Entertainment

Because his AI was so good at predicting people’s likes and dislikes, Hamon wondered what else it could tell us about ourselves. Perhaps it could detect whether people are lying. Like a polygraph test, the AI could compare data indicating a person’s stress levels against an established data set to determine if he or she is lying. The data could also, he supposed, be distilled down far enough that those interpreting it could figure out if a person knew they were lying. To test this idea, Hamon used his AI to perform emotion recognition tasks on lower-quality video (he used clips of talking heads on CSPAN, as well as press conference footage of President Donald Trump).

At a moment when truth seems to be under attack, separating the honest folks from the liars seems critical. But the system could also be used in literal life-or-death situations, like helping clinicians better assess a patient’s pain to figure out the proper treatment.

The system could be essential in a situation like detecting a stroke, Hamon notes. While medical professionals and caretakers are trained to recognize the signs of a stroke, they often miss the “mini strokes” (medical term: Transient Ischemic Attacks) that may precede a full-blown stroke. The eye of AI, then, could detect those micro signs of a stroke, or even the symptoms or signs of an illness that set in before acute onset in nursing home patients. This would allow caretakers to respond and intervene in a timely manner so the patient could be monitored for signs of a more serious stroke; doctors could even take steps to prevent the stroke from ever occurring.

Would it really work? Hamon thinks it could. At the very least, he knows the AI is sensitive enough to detect these types of minute changes. When the system was testing audiences, researchers had to take note of participants’ prescription medications—some, such as stimulants like Adderall, cause effects like higher blood pressure and tiny muscle twitches. A human might not notice these subtle changes in another person, but the AI would pick it up and possibly mistake the medication’s effects as stress.

A Powerful Tool

Though many people may think that machines can’t be biased, humans that design and interact with AI unknowingly imbue it with their biases. The implications of those biases become more pronounced as AI evolves, influencing processes like identity recognition and the collection of social media data in service of federal agencies.

As facial recognition technology has crept closer to the average person’s life, people have paid more attention to the possible ethical concerns brought on by these biased algorithms. The idea that technology can exist completely without bias is a controversial and heavily challenged one: AI is dependent upon the data it has been trained on, and there might be biases affecting that data, for example. And as AI research continues to progress, as we create machines that can in fact learn, we have to put in stopgaps for situations when machines could learn a lot more from us than what we set out to teach.

Hamon feels confident that his algorithm is as unbiased as one can be: The computer is only interpreting a person’s physical behavior, regardless of the face or body that displays it. Hamon takes comfort in that— he’s experienced racial profiling first-hand. “I’m Native American, and I can tell you there are times when things get a little edgy,” he says. “You get nervous when the police pull up behind you. But I think this kind of technology really can undo that nervousness — because if you’re not doing anything wrong, [and] the computer is going to tell the officer that you’re not doing anything wrong. I would feel a sense of security knowing a computer is making that threat assessment.”

In any case, Hamon doesn’t worry too much about interpreting the results of the algorithm he created. While he’s confident in the algorithm, he also understands the limitations of his expertise. When it comes to making sense of the data, that’s something he feels is best left to the experts in fields like law enforcement, medicine, and psychiatry.

The future of Hamon’s work at Silver Logic Labs is fairly open. The applications for AI are only limited by the human imagination. Hamon wants to make his tool as available for as many different uses as possible, but he does find himself circling back to where it all began: helping people create and enjoy high-quality entertainment.

It could reveal to us observations that make us reconsider what it means to be human.

“Storytelling is a part of human culture,” he says. Through his work Hamon has discovered at least one unquantifiable element that is key to media’s success: “People really enjoy seeing humans [meaningfully] engaging with other humans… those are the things that tend to make successes,” he says.

Though we as a society might struggle to adapt to the transformations that AI will bring to our lives, there’s solace to be found in Hamon’s vision. AI, in all the data it unfeelingly collects, might reveal to us observations that make us reconsider what it means to be human. It may see something in us that we’ve never seen in one another — or ourselves.