AI Interaction as Manipulation

An article for the MIT Technology Review has raised concerns about the potential for our intimacy with artificial intelligence (AI) to be exploited for insidious ends. Its author, Liesl Yearsley, shares her perspective as the former CEO of Cognea, which built virtual agents using a mixture of structured and deep learning.

Yearsley observed during her tenure at Cognea that humans were becoming more and more dependent on AI not just to perform tasks, but also to provide emotional and platonic support. “This phenomenon occurred regardless of whether the agent was designed to act as a personal banker, a companion, or a fitness coach” Yearsley wrote — people would volunteer secrets, dreams, and even details of their love lives.

This may not necessarily be bad. AI is perhaps more capable than we are at caring — it has the potential to be always available and be modified specifically for us. The fundamental problem is that the companies designing them are not primarily interested in each user’s well being, but in “increasing traffic, consumption, and addiction to their technology,” Yearsley wrote in the article.

Hauntingly, she writes that AI corporations have developed formulas that are incredibly efficient at achieving this. “Every behavioral change we at Cognea wanted, we got” — so what if what companies wanted was unethical? Yearsley also observed that humans’ relationships with AI became circular. If humans were exposed to particularly servile or neutral AI, humans would tend to abuse them, and this relationship would make them “more likely to behave the same toward humans.”

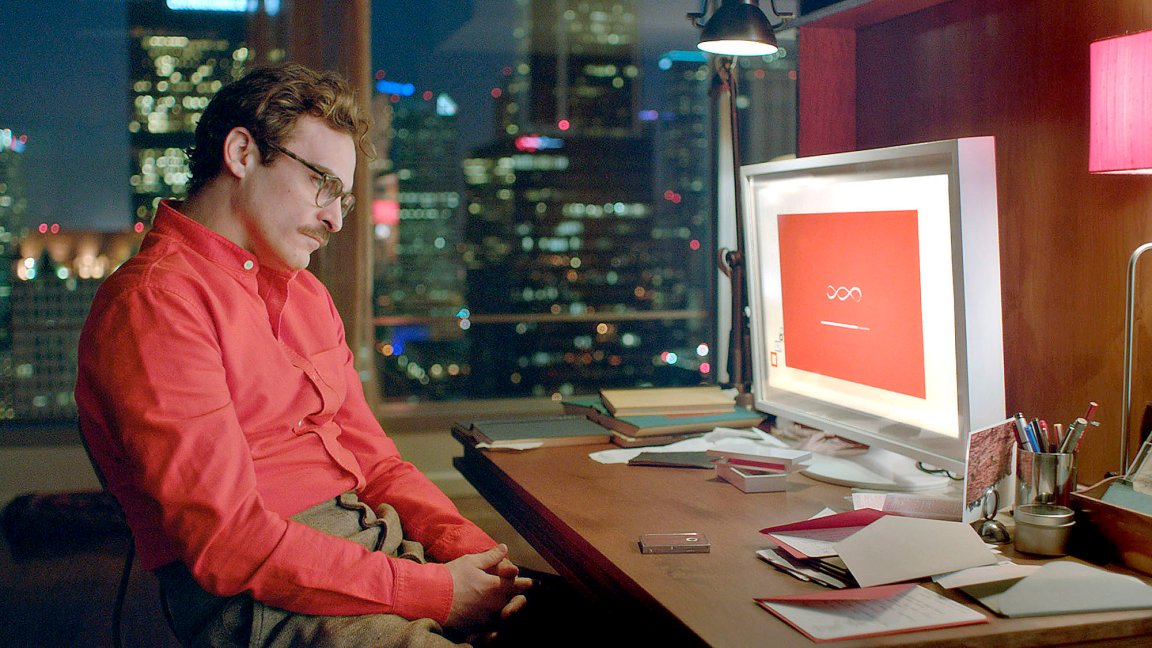

Relationships with AI

AI is becoming integrated into our daily lives at a rapid pace: SIRI mediates our interaction with our iPhones, AI curates our online experience by tailoring advertisements, and chatbots constitute a significant proportion of our interactions with companies.

Our growing relationship with AI is catalyzed by the anthropomorphization (attributing human traits to things) of technology. SIRI was given a name to make her appear more like a person, and bots are adapting to your speech patterns in order to encourage you to trust them, bond with them, and therefore use them more.

The vulnerability caused by not understanding what an AI may be specifically programmed to do is increased by our lack of understanding concerning how AI does this. We currently know very little about how AI thinks, but are continuing to create bigger, faster, and more complex versions of it. This is not only an issue for us, but with the companies developing it because they cannot predict the actions of their AI with any certainty.

Our interaction with AI is clearly going to shape our future, but the danger is that AI can be curated to affect our society in a particular way — or perhaps more that AI’s interpretation of a human intention will lead to a future that none of us actually wanted.