Digital Turncoat

Last month, developers from OpenAI announced that they had built a text generating algorithm called GPT-2 that they said was too dangerous to release into the world, since it could be used to pollute the web with endless bot-written material.

But now, a team of scientists from the MIT-IBM Watson AI Lab and Harvard University built an algorithm called GLTR that determines how likely it is that any particular passage of text was written by a tool like GPT-2 — an intriguing escalation in the battle against spam.

Battle of Wits

When OpenAI unveiled GPT-2, they showed how it could be used to write fictitious-yet-convincing news articles by sharing one that the algorithm had written about scientists who discovered unicorns.

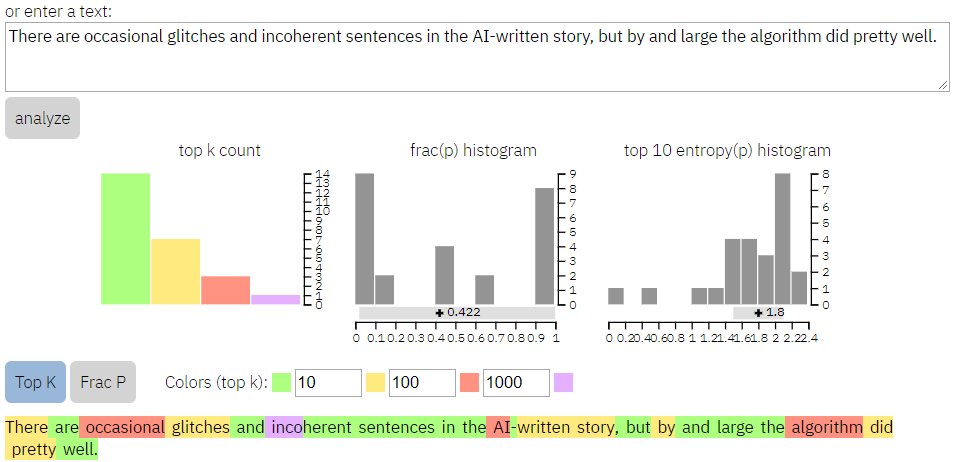

GLTR uses the exact same models to read the final output and predict whether it was written by a human or GPT-2. Just like GPT-2 writes sentences by predicting which words ought to follow each other, GLTR determines whether a sentence uses the word that the fake news-writing bot would have selected.

“We make the assumption that computer generated text fools humans by sticking to the most likely words at each position, a trick that fools humans,” the scientists behind GLTR wrote in their blog post. “In contrast, natural writing actually more frequently selects unpredictable words that make sense to the domain. That means that we can detect whether a text actually looks too likely to be from a human writer!”

Kick The Tires

The IBM, MIT, and Harvard scientists behind the project built a website that lets people test GLTR for themselves. The tool highlights words in different colors based on how likely they are to have been written by an algorithm like GPT-2 — green means the sentence matches GPT-2, and shades of yellow, red, and especially purple indicate that a human probably wrote them.

I decided to test the system on a sentence from my article about GPT-2, and it looks like my use of acronyms and hyphens gave away my humanity.

However, AI researcher Janelle Shane found that GLTR doesn’t fare as well against text-generated algorithms other than OpenAI’s GPT-2.

Testing it on her own text generator, Shane found that GLTR incorrectly determined that the resulting text was so unpredictable that a human had to have written it, suggesting that we’ll need more than just this one tool in the ongoing fight against misinformation and fake news.

READ MORE: It takes a bot to know one? [AI Weirdness]

More on fake news: EU: Facebook, Google, Twitter Failed to Fight Fake News