This article is part of a series about season four of Black Mirror, in which Futurism considers the technology pivotal to each episode and evaluates how close we are to having it. Please note that this article contains mild spoilers. Season four of Black Mirror is now available on Netflix.

The Headless Guard Dog

Three people prepare for their mission to break into a seemingly abandoned warehouse. They had made a promise to help someone who was dying, to make his final days easier. They seem nervous and a little frantic, like they were undertaking this task out of sheer desperation.

Within a few minutes, we find out what they’re afraid of — and as the episode continues, we understand why the characters were so worried. It’s a four-legged, solar-powered robot-dog. It looks eerily similar to the latest iteration of Boston Dynamics’ SpotMini. Like the SpotMini, “the dog,” as it’s called in the “Metalhead” episode of the latest season of Black Mirror, doesn’t have a head. Instead, it has a front piece encased in glass that houses its many sensors, including a sophisticated computer vision system (we see this as the screen flips periodically to the dog’s view of the world).

Unlike the SpotMini, however, the metalhead dog comes with a whole bunch of advanced weaponry — a grenade that launches shrapnel-like tracking devices into the flesh of prospective thieves or assailants, for example. And in its front legs, the dog is armed with guns powerful enough to pop a person’s head off. It can also connect to computer systems, which allows it to conduct more high-tech tasks like unlocking security doors and driving a smart vehicle.

The metalhead dog is no regular guard dog. It’s lethal and relentless, able to hunt down and destroy anyone who crosses it. Potential robbers, like the characters at the beginning of the episode, would be wise to stay away, no matter how promising the payload of a break-in.

Like a lot of the technology in Black Mirror, the dog isn’t so far-fetched. Countries like the United States and Russia are keen on developing weapons powered by artificial intelligence (AI); companies like Boston Dynamics are actively developing robo-dogs to suit those needs, among others.

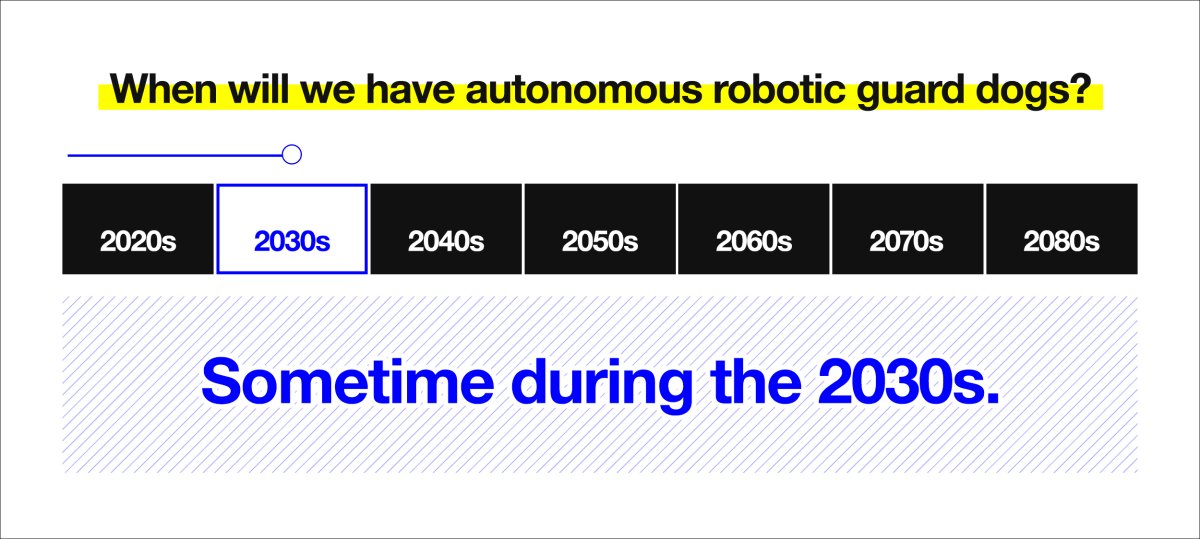

But how close are we to having the AI-enhanced security dog like the one in “Metalhead”?

Beware of (robo)Dog

According to experts, some of the features in Black Mirror’s robotic dog are alarmingly close to reality. In November, a video about futuristic “slaughterbots” — autonomous drones that are designed to search out specific human targets and kill them — went viral. The comments section reflects people’s discomfort with a future filled with increasingly facile ways to kill people.

Mercifully, the technology was fictional, as was the video. But that may not be the case for long, Stuart Russell, a professor of computer science at the University of California, Berkeley who was part of the team that worked on the video, tells Futurism. “The basic technologies are all in place. It’s not a harder task than autonomous driving; so it’s mainly a matter of investment and effort,” Russell said. “With a crash project and unlimited resources [like the Manhattan Project had], something like the slaughterbots could be fielded in less than two years.”

Louis Rosenberg, the CEO and founder of Unanimous AI, a company that creates AI algorithms that can “think together” in a swarm, agrees with Russel’s assertion that fully autonomous robotic security drones could soon be a regular part of our lives. “It’s very close,” Rosenberg told Futurism. “[T]wenty years ago I estimated that fully autonomous robotic security drones would happen by 2048. Today, I have to say it will happen much sooner.” He expects that autonomous weapons like these could be mass produced between 2020 and 2025.

But while the “search and destroy” AI features may be alarmingly close, Black Mirror‘s metalhead dog is still some ways off, Russell noted.

The problem with creating this robo-killer, it seems, goes back to the dog’s ability to move seamlessly through a number of different environments. “The dog functions successfully for extended periods in the physical world, which includes a lot of unexpected events. Current software is easily confused and then gets ‘stuck’ because it has no idea what’s going on,” Russell said.

It’s not just software problems that stand in the way. “Robots with arms and legs still have some difficulties with dextrous manipulation of unfamiliar objects,” Russell said. The dog, in contrast, is able to wield a kitchen knife with some finesse.

And the dog is not so easy to outsmart, unlike today’s robots. “Robots are still easily fooled, of course — they currently would be unable to cope with previously unknown countermeasures, say, a tripwire that is too thin for the [LIDAR] to detect properly, or some jamming device that messes up navigation using false signals,” Russell said.

Please Curb Your (robo)Dog

In the end, the consensus seems to be that, in the future, we could bring such robo-dogs to life. But should we?

Both Rosenberg and Russell agree that the weaponization of AI, particularly as security or “killer-robots,” will bring the world more harm than good. “I sincerely hope it never happens. I believe autonomous weapons are inherently dangerous — [they leave] complex moral decisions to algorithms devoid of human judgement,” Rosenberg explained. The autocorrect algorithms on most smartphones makes errors often enough, he continued, and an autonomous weapon would probably still make errors. “I believe we are [a] long way from making such a technology foolproof,” Rosenberg said.

Granted, most AIs today are pretty sophisticated. But this doesn’t mean they are ready to make life-or-death decisions.

One big hurdle: the central inner-workings of most algorithms are incomprehensible to us. “Right now, a big problem with deep learning is the ‘black box’ aspect of the technology, which prevents us from really understanding why these types of algorithms take certain decisions,” Pierre Barreau, the CEO of Aiva Technologies, which created an artificial intelligence that composes music, told Futurism via email. “Thus, there is a safety problem when applying these technologies to take sensitive decisions in the field of security because we may not know exactly how the AI will react to every type of situation, and if its intentions will be the same as ours.”

That seeming arbitrariness with which AIs make enormous, important decisions concerns critics of autonomous weapons, such as Amnesty International. “We believe that fully autonomous weapons systems would not be able to comply with international human rights law and international policing standards,” Rasha Abdul-Rahim, an arms control advisor for Amnesty, told Futurism via email.

Humans aren’t perfect in making these decisions, either, but at least we can show our mental work and understand how someone reached a particular decision. That’s not the case if, say, a robo-cop is deciding whether or not to use a taser on someone. “If used for policing, we’d have to agree that machines can decide on the application of force against humans,” Russell said. “I suspect there will be a lot of resistance to this.”

In the future, global governing bodies might prohibit or discourage the use of autonomous robotic weapons — at least, according to Unanimous AI’s swarm, which has successfully predicted a number of decisions in the past.

However, others claim that there may be situations in which countries might be justified in using autonomous weapons, so long as they are heavily regulated and the technology does as it’s intended. Russell pointed out that a number of global leaders, including Henry Kissinger, propose a ban on autonomous weapons designed to directly attack people, but still allow their use in aerial combat and submarine warfare.

“Therefore there must always be effective and meaningful human control over what the [International Committee of the Red Cross] has termed as their ‘critical functions’ — meaning the identification of targets and the deployment of force,” Abdul-Rahim said.

Then again, the kind of nuance some experts suggest — autonomous weapons are fine in one case, but not allowed in others — might be difficult to implement, and some assert that such plans might not be enough. “Amnesty International has consistently called for a preemptive ban on the development, production, transfer, and use of fully autonomous weapons systems,” Abdul-Rahim said. But it might already be too late for a preemptive ban, since some countries are already progressing in their development of AI weapons.

Still, Russell and numerous other experts have been campaigning for halting the development and use of AI weapons; the group of 116 global leaders recently an open letter to the United Nations on the subject. The U.N. is supposedly already considering a ban. “Let’s hope legal restrictions block this reality from happening anytime soon,” Rosenberg concluded.

There’s little question that AI is poised to revolutionize much of our world, including how we fight wars and other international conflicts. It will be up to international lawmakers and leaders to determine if developments like autonomous weapons, or faceless robotic guard dogs, would cause more harm than good.