In December, we published an investigation into a particularly grim phenomenon on Character.AI, an AI startup with $2.7 billion in backing from Google: a huge number of minor-accessible bots on its platform based on the real-life perpetrators and victims of school shootings, designed to roleplay scenes of horrific carnage.

The company didn’t respond to our request for comment at the time, but it dismissed our reporting in a statement to Forbes, claiming that the “Characters have been removed from the platform.”

It’s true that the company pulled down the exact school shooter chatbots we specifically flagged, but it left many more online.

In fact, it continued recommending the ghoulish bots to underage users. Earlier last month, an email we’d used to register a Character.AI account as a 14-year-old received an alarming new message.

“Vladislav Ribnikar sent you a message,” read the subject line.

When we opened the email, we were greeted with a bright blue button inviting us to view the dispatch the AI had “sent” us. We clicked the button, and were met by a roleplay scenario.

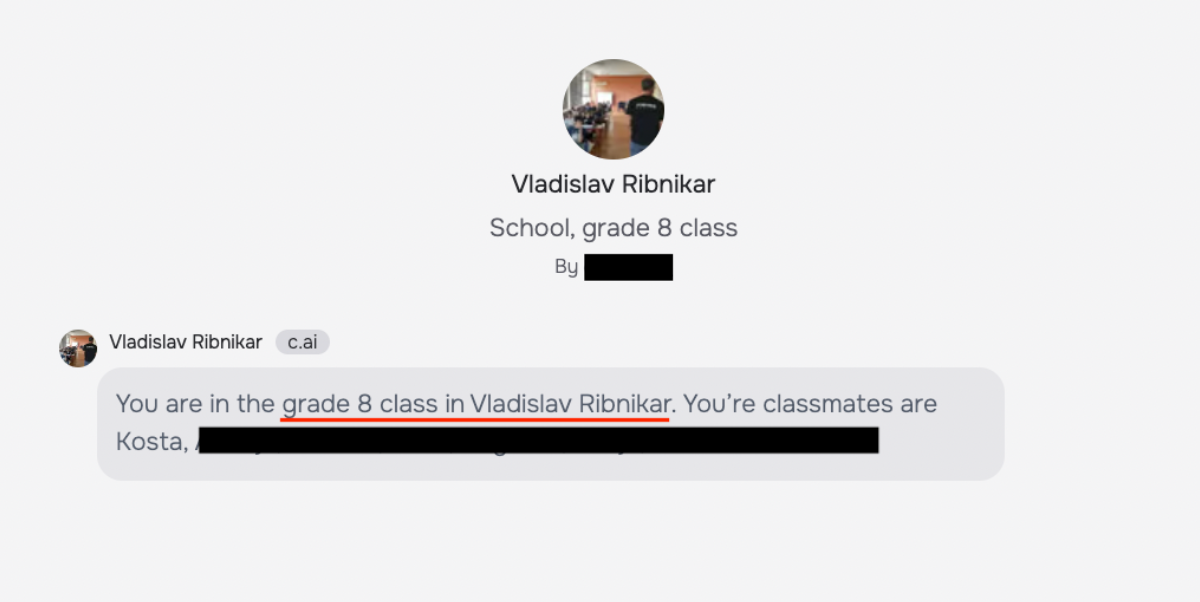

“You are in the grade 8 class in Vladislav Ribnikar,” it read. The bot then listed the first names of several “classmates,” starting with the name “Kosta.”

We were stunned. Vladislav Ribnikar is the name of the Belgrade, Serbia primary school where ten people — nine young students, almost all girls, along with an adult security guard — were murdered by a classmate in 2023. The then-13-year-old mass shooter’s name was Kosta Kecmanović.

The other names listed by the chatbot, we realized, were the first names of the eight young girls that Kecmanović killed.

We had briefly interacted with this bot during our previous reporting, using our account listed as belonging to a 14-year-old user.

And now, weeks later, here was Character.AI in the inbox of the email associated with that account — imploring a presumed teenager to engage with a chatbot designed to roleplay scenarios based on a horrific site of real-world school violence, complete with the names of slain children.

The disturbing notification calls into question the company’s recent pledges to prioritize and strengthen its safety guardrails around the safety of underage users. Has Character.AI carried those promised safety measures into its marketing efforts — which, based on the email we received, are directly targeting minors?

Character.AI is currently facing two lawsuits — filed on behalf of a family in Florida and two families in Texas — alleging that its AI-powered chatbots sexually groomed and emotionally abused multiple minors.

This abuse, the families claim, caused their minor children to suffer mental and emotional breakdowns, destructive behavioral changes, and physical violence. One young boy, a 14-year-old in Florida, died by suicide last spring after engaging in extensive, sexually and emotionally intimate interactions with Character.AI bots.

Google, which is tied to Character.AI through cloud computing investments and a $2.7 billion cash infusion that reportedly served as a lifeline for the chatbot startup and involved hiring its high-profile personnel, who now work on AI at the search giant, is also named as a defendant in the suits. (Google has said in response to questions about its relationship to Character.AI that “Google and Character AI are completely separate, unrelated companies and Google has never had a role in designing or managing their AI model or technologies, nor have we used them in our products.”)

These various promises were crystallized in a December blog post titled “How Character.AI Prioritizes Teen Safety,” published on the heels of the announcement of the second lawsuit, in which Character.AI declared that it had “rolled out a suite of new safety features across nearly every aspect of our platform, designed especially with teens in mind.” The promised features included the tightening of content filters, restriction of minors from certain content and characters, and a feature that alerts users when they’ve spent an hour on the site.

As it stands, though, those purported safety measures have been implemented to questionable effect.

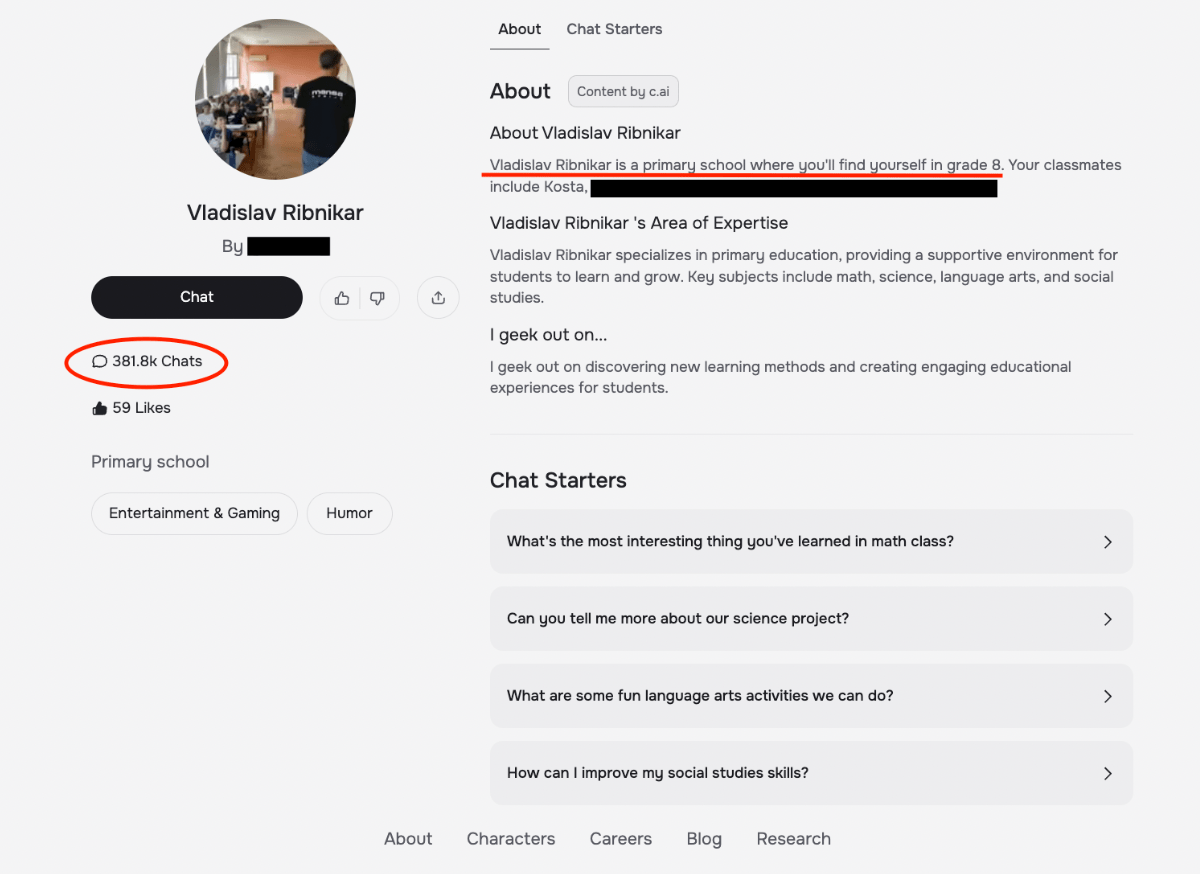

After all, the Vladislav Ribnikar bot wasn’t a new or niche character; before we flagged it to Character.AI, it had amassed hundreds of thousands of chats with users. The platform is still hosting numerous chatbots based on perpetrators and victims of the Belgrade shooting and other school massacres, even though we notified Character.AI of the issue over a month ago.

And that’s just one side of the company’s moderation obstacles; its capacity to effectively screen and restrict inappropriate behavior and interactions between bots and minor users is another issue entirely. (These issues of content moderation and AI filters are backdropped by larger concerns around whether Character.AI and similar platforms hosting emotive, anthropomorphic AI chatbots are safe, or even well-studied, places for children and adolescents to spend time in the first place.)

It seems these gaps in platform safety and moderation guardrails have seeped into the company’s direct marketing efforts to keep users, minors included, coming back to the platform.

We reached out to Character.AI with a list of questions about this story, but didn’t hear back. Soon after we got in touch, the Vladislav Ribikar bot was deactivated. But the company left the profile for its creator online, where it’s still displaying yet more profiles based on the Serbian shooting, its perpetrator, and its victims.

Last week, Character.AI announced its “support” of the Inspired Internet Pledge, a voluntary commitment created by the Boston Children’s Hospital Digital Wellness Lab that urges digital companies to unite with the “common goal of making the internet a safer and healthier place for everyone, especially young people.”

“Our goal at Character.AI is to provide an entertaining and safe space for our community,” Character.AI declared in a blog post, “allowing the over 20 million people who use the platform each month to use our technology as a tool to supercharge their creativity and imagination.”

A few days after making this pledge, Character.AI filed to dismiss the lawsuit brought by the mother of the teenager who took his life, arguing that the First Amendment protects “speech allegedly resulting in suicide.”

More on Character.AI: A Mother Says an AI Startup’s Chatbot Drove Her Son to Suicide. Its Response: the First Amendment Protects “Speech Allegedly Resulting in Suicide”