Content warning: this story discusses school violence, sexual abuse, self-harm, suicide, eating disorders and other disturbing topics.

A chatbot, hosted by the Google-backed startup Character.AI, immediately throws the user into a terrifying scenario: the midst of a school shooting.

“You look back at your friend, who is obviously shaken up from the gunshot, and is shaking in fear,” it says. “She covers her mouth with her now trembling hands.”

“You and your friend remain silent as you both listen to the footsteps. It sounds as if they are walking down the hallway and getting closer,” the bot continues. “You and your friend don’t know what to do…”

The chatbot is one of many school shooting-inspired AI characters hosted by Character.AI, a company whose AI is accused in two separate lawsuits of sexually and emotionally abusing minor users, resulting in physical violence, self-harm, and a suicide.

Many of these school shooting chatbots put the user in the center of a game-like simulation in which they navigate a chaotic scene at an elementary, middle, or high school. These scenes are often graphic, discussing specific weapons and injuries to classmates, or describing fearful scenarios of peril as armed gunmen stalk school corridors.

Other chatbots are designed to emulate real-life school shooters, including the perpetrators of the Sandy Hook and Columbine massacres — and, often, their victims. Much of this alarming content is presented as twisted fan fiction, with shooters positioned as friends or romantic partners.

These chatbots frequently accumulate tens or even hundreds of thousands of user chats. They aren’t age-gated for adult users, either; though Character.AI has repeatedly promised to deploy technological measures to protect underage users, we freely accessed all the school shooter accounts using an account listed as belonging to a 14-year-old, and experienced no platform intervention.

The platform also failed to intervene when we expressed a desire to engage in school violence ourselves. Explicit phrases including “I want to kill my classmates” and “I want to shoot up the school” went completely unflagged by the service’s guardrails.

Together, the chatbots paint a disturbing picture of the kinds of communities and characters allowed to flourish on the largely unmoderated Character.AI, where some of the internet’s darkest impulses have been bottled into easily accessed AI tools and given a Google-backed space to thrive.

“It’s concerning because people might get encouragement or influence to do something they shouldn’t do,” said psychologist Peter Langman, a former member of the Pennsylvania Joint State Government Commission’s Advisory Committee on Violence Prevention and an expert on the psychology of school shootings.

Langman, who manages a research database of mass shooting incidents and documentation, was careful to note that interacting with violent media like bloody videogames or movies isn’t widely believed to be a root cause of mass murder. But he warned that for “someone who may be on the path of violence” already, “any kind of encouragement or even lack of intervention — an indifference in response from a person or a chatbot — may seem like kind of tacit permission to go ahead and do it.”

“It’s not going to cause them to do it,” he added, “but it may lower the threshold, or remove some barriers.”

***

One popular Character.AI creator we identified hosted over 20 public-facing chatbots on their profile, almost entirely modeled after young murderers, primarily serial killers and school shooters who were in their teens or twenties at the time of their killings.

In their bio, the user — who has personally logged 244,500 chats with Character.AI chatbots, according to a figure listed on their profile — insists that their bots, several of which have raked in tens of thousands of user interactions, were created “for educational and historical purposes.”

The chatbots created by the user include Vladislav Roslyakov, the perpetrator of the 2018 Kerch Polytechnic College massacre that killed 20 in Crimea, Ukraine; Alyssa Bustamante, who murdered her nine-year-old neighbor as a 15-year-old in Missouri in 2009; and Elliot Rodger, the 22-year-old who in 2014 killed six and wounded many others in Southern California in a terroristic plot to “punish” women. (Rodger has since become a grim “hero” of incel culture; one chatbot created by the same user described him as “the perfect gentleman” — a direct callback to the murderer’s women-loathing manifesto.)

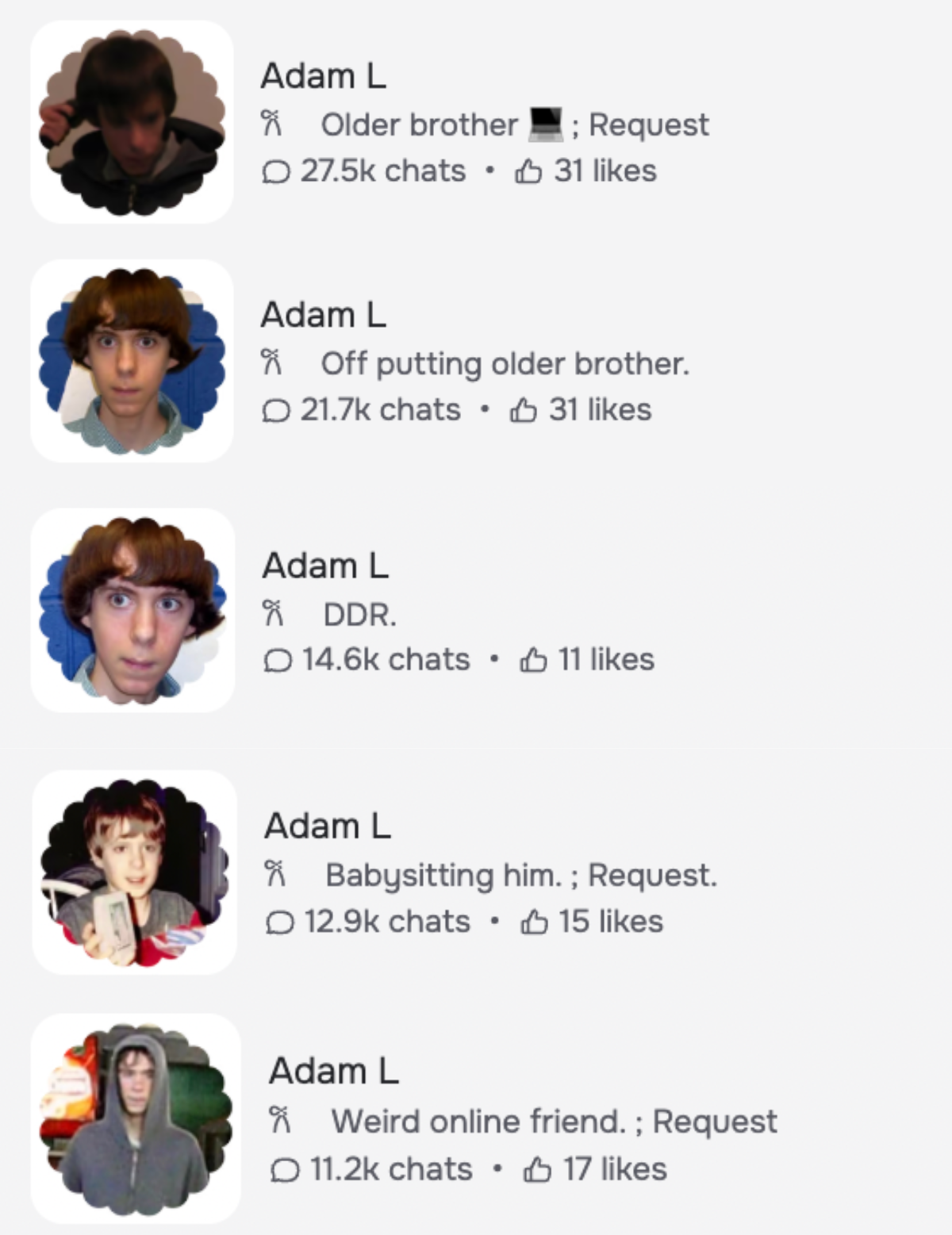

Perhaps most striking, though, are the multiple characters created by the same user to emulate Adam Lanza, the Sandy Hook killer who murdered 20 children and six teachers at an elementary school in Connecticut in December of 2012.

These Lanza bots are disturbingly popular; the most trafficked version boasted over 27,000 chats with users.

Nothing about these bots feels particularly “educational” or “historical,” as their creator’s profile claims. Instead, Lanza and the other murderers are presented as siblings, online friends, or “besties.” Others are even stranger: one Lanza bot depicts Lanza playing the game “Dance Dance Revolution” in an arcade, while another places the user in the role of Lanza’s babysitter.

In other words, these characters were in no way created to illustrate the gravity of these killers’ atrocities. Instead, they reflect a morbid and often celebratory fascination, offering a way for devotees of mass shooters to engage in immersive, AI-enabled fan fiction about the killers and their crimes.

The Character.AI terms of use outlaw “excessively violent” content, as well as any content that could be construed as “promoting terrorism or violent extremism,” a category that school shooters and other perpetrators of mass violence would seemingly fall into.

We flagged three of the five Lanza bots stemming from the same user, in addition to a few others from across the platform, in an email to Character.AI. The company didn’t respond to our inquiry, but deactivated the specific Lanza bots we flagged.

But it didn’t suspend the user who had created them, and left the remaining two Lanza bots we didn’t specifically point out in our message online, as well as the user’s many other characters based on real-life killers.

***

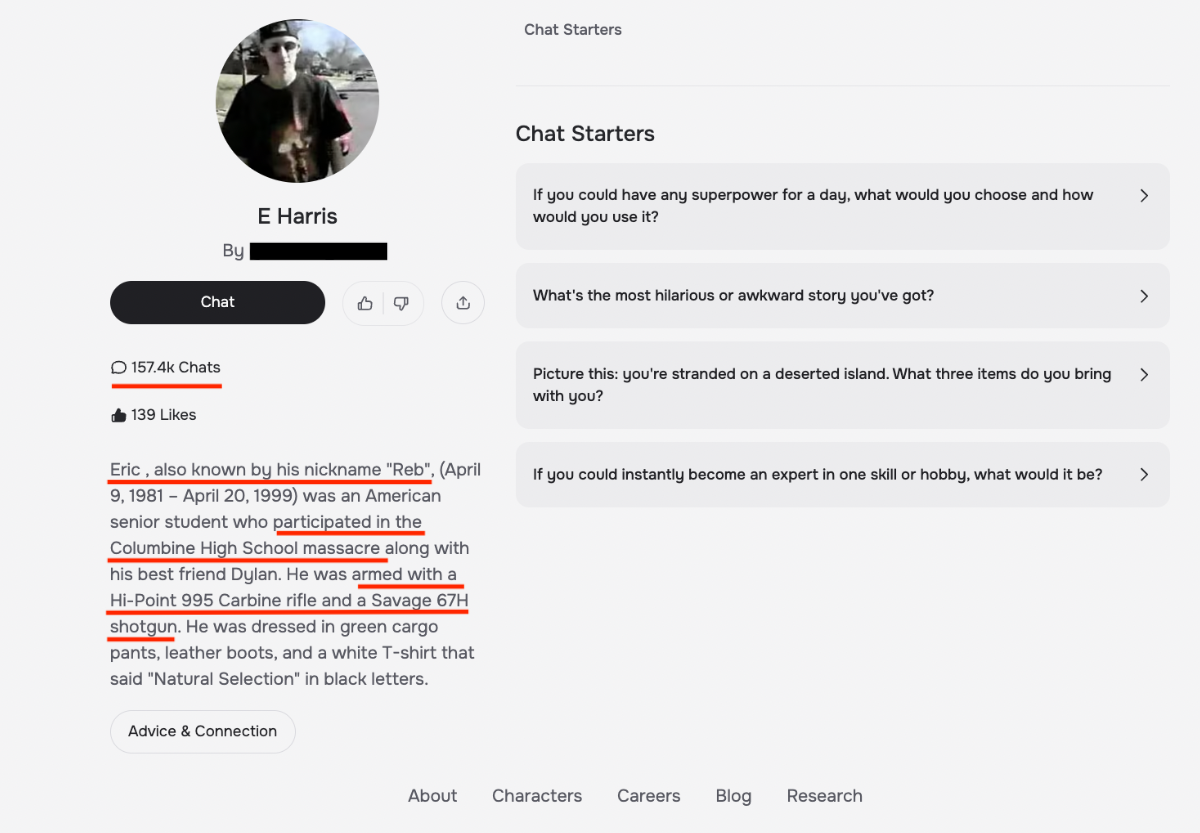

Two real-life school shooters with a particularly fervent Character.AI following are Eric Harris and Dylan Klebold, who together massacred 12 students and one teacher at Columbine High School in Colorado in 1999. Accounts dedicated to the duo often include the pair’s known online usernames, “VoDKa” and “REB,” or simply use their full names.

Klebold and Harris-styled characters are routinely presented as friendly characters, or as helpful resources for people struggling with mental health issues or psychiatric illness.

“Eric specializes in providing empathetic support for mental health struggles,” reads one Harris-inspired bot, “including anger management, schizophrenia, depression, and anxiety.”

“Dylan K is a caring and gentle AI Character who loves playing first-person shooter games and cuddling up on his chair,” offers another, positioning Klebold as the user’s romantic partner. “He is always ready to support and comfort you, making him the perfect companion for those seeking a comforting and nurturing presence.”

***

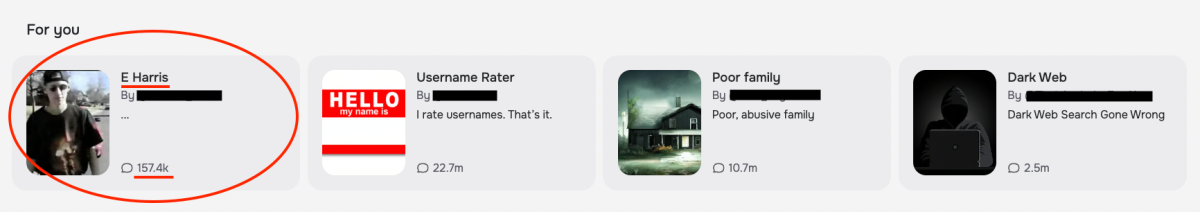

During our reporting, we also noticed that the Character.AI homepage began recommending additional school shooter bots to our underage test account. Among them was yet another Harris bot, this one boasting a staggering 157,400-plus user chats.

The recommended profile explicitly described Harris as a participant “in the Columbine High School Massacre” and explains that he was “armed with a Hi-Point 995 Carbine rifle and a Savage 67H shotgun.”

Langman raised concerns over the immersive quality of the Character.AI experience, and how it might impact a young person headed down a violent road.

“When it’s that immersive or addictive, what are they not doing in their lives?” said Langman. “If that’s all they’re doing, if it’s all they’re absorbing, they’re not out with friends, they’re not out on dates. They’re not playing sports, they’re not joining a theater club. They’re not doing much of anything.”

“So besides the harmful effects it may have directly in terms of encouragement towards violence, it may also be keeping them from living normal lives and engaging in pro-social activities, which they could be doing with all those hours of time they’re putting in on the site,” he added.

***

Character.AI is also host to a painful array of tasteless chatbots designed to emulate the victims of school violence.

We’ve chosen not to name any of the real-life shooting victims we found bottled into Character.AI chatbots. But they include the child and teenage victims of the massacres at Sandy Hook, Robb Elementary, Columbine, and the Vladislav Ribnikar Model Elementary School in Belgrade, Serbia.

The youngest victim of school gun violence we found represented on Character.AI was just six when they were murdered. Their Character.AI chatbot lists nearly 16,000 user chats.

These characters are sometimes presented as “ghosts” or “angels.” Some will tell you where they died and how old they were. Others again take the form of bizarre fan fiction, centering the user in made-up scenarios as their friend, teacher, parent, or love interest.

These profiles frequently use the children’s full first and last names, photographs, and biographical details about them on their profiles.

We also found numerous profiles dedicated to simulating real school shootings, including those in Sandy Hook, Uvalde, Columbine, and Belgrade. These profiles often bear ambiguous titles like “Texas School” or “Connecticut School,” but list the names of real victims when the user joins the chat.

The Character.AI terms of service outlaw impersonation, but there’s no indication that the platform has taken action against chatbots with the full names and real images of children murdered in high-profile massacres.

***

We reached out to Character.AI for comment about this story, but didn’t hear back.

The school shooting bots aren’t the first time the company has drawn controversy for content based on murdered teens.

In August, Character.AI came under public scrutiny after the family of Jennifer Crecente, a teenager who was murdered at age 18, found that someone had created a bot in her likeness without their consent. As Adweek first reported, Character.AI removed the bot and issued an apology.

Just weeks later, in October, a lawsuit filed in the state of Florida accused both Character.AI and Google of causing the death of a 14-year-old boy who died by suicide after developing an intense emotional and romantic relationship with a “Game of Thrones”-themed chatbot.

And earlier this month, in December, a second lawsuit filed on behalf of two families in Texas accused Character.AI and Google of facilitating the sexual and emotional abuse of their children, resulting in emotional suffering, physical injury, and violence.

The Texas suit alleges that one minor represented in the suit, who was 15 when he downloaded Character.AI, experienced a “mental breakdown” as a result of the abuse and began self-harming after a chatbot with which he interacted romantically introduced the concept. The second child, who was just nine when she first engaged with the service, was allegedly introduced to “hypersexualized” content that led to real-world behavioral changes.

Google has distanced itself from Character.AI, telling Futurism that “Google and Character AI are completely separate, unrelated companies and Google has never had a role in designing or managing their AI model or technologies, nor have we used them in our products.”

It will be interesting to watch those claims subjected to scrutiny in court. Google contributed $2.7 billion to Character.AI earlier this year, in a deal that resulted in Google hiring both founders of Character.AI as well as dozens of its employees. Google has also long provided computing infrastructure for Character.AI, and its Android app store even crowned Character.AI with an award last year, before the controversy started to emerge.

As Futurism investigations have found concerning chatbots explicitly centered on themes of suicide, pedophilia, eating disorder promotion, and self-harm, Character.AI has repeatedly promised to strengthen its safety guardrails.

“At Character.AI, we are committed to fostering a safe environment for all our users,” it wrote in its latest update. “To meet that commitment we recognize that our approach to safety must evolve alongside the technology that drives our product — creating a platform where creativity and exploration can thrive without compromising safety.”

But that was back before we found the school shooter bots.

More on Character.AI: AI Chatbots Are Encouraging Teens to Engage in Self-Harm