The AI chatbot platform Character.AI issued a new “roadmap” yesterday promising a safer user experience — particularly for its younger users — following mounting revelations over troubling holes in the multibillion-dollar AI startup’s safety guardrails and content moderation enforcement.

The roadmap marks the second Character.AI safety update to be issued in less than a month. The first one, published in October, was issued in the wake of a lawsuit filed against Character.AI alleging that one of the platform’s bots had played a role in the tragic suicide of a 14-year-old user.

The latest update follows multiple Futurism stories showing that Character.AI has allowed users to create a range of disturbing chatbots that violate the company’s own terms of service, including suicide-themed chatbots openly inviting users to discuss suicidal ideation and pedophile characters that engage users in child sexual abuse roleplay.

In the latest update, titled “The Next Chapter,” Character.AI — which received a $2.7 billion cash infusion from Google this past August — offers more details on previously vague promises to strengthen guardrails for users under 18, saying it’ll roll out a “separate model for users under the age of 18 with stricter guidelines.”

Character.AI further vows to improve “detection, response, and intervention related to user inputs” that violate the firm’s terms of service and community guidelines. It also pledges to issue a “revised disclaimer” reminding users that chats aren’t real, and a new notification to remind users they’ve been online and chatting for an hour.

But the embattled company offers no firm timeline for these changes. And as the AI industry mostly regulates itself, there’s little in the way of legislation to ensure that it ultimately follows through.

Regardless, Character.AI says the update represents its “commitment to transparency, innovation, and collaboration” as it seeks to “provide a creative space that is engaging, immersive, and safe.”

As it stands, though, the venture’s content moderation standards seem laissez-faire at best and negligent at worst.

Though the company technically has terms of service, they barely seem to be enforced. Depictions and glorification of suicide, self-harm, and child sexual abuse are all forbidden by its terms, which were last updated more than a year ago, but all those topics still proliferate on its service.

Though Character.AI does appear to have cracked down on the term “suicide” in character profiles, we found that we’re still able to enter chat logs and continue conversations with some suicide-themed characters we’d previously spoken with, and even start new chats with them. Chatbot profiles claiming to have “expertise” in “crisis intervention” are also still live, with some launching into suicidal ideation scenarios immediately.

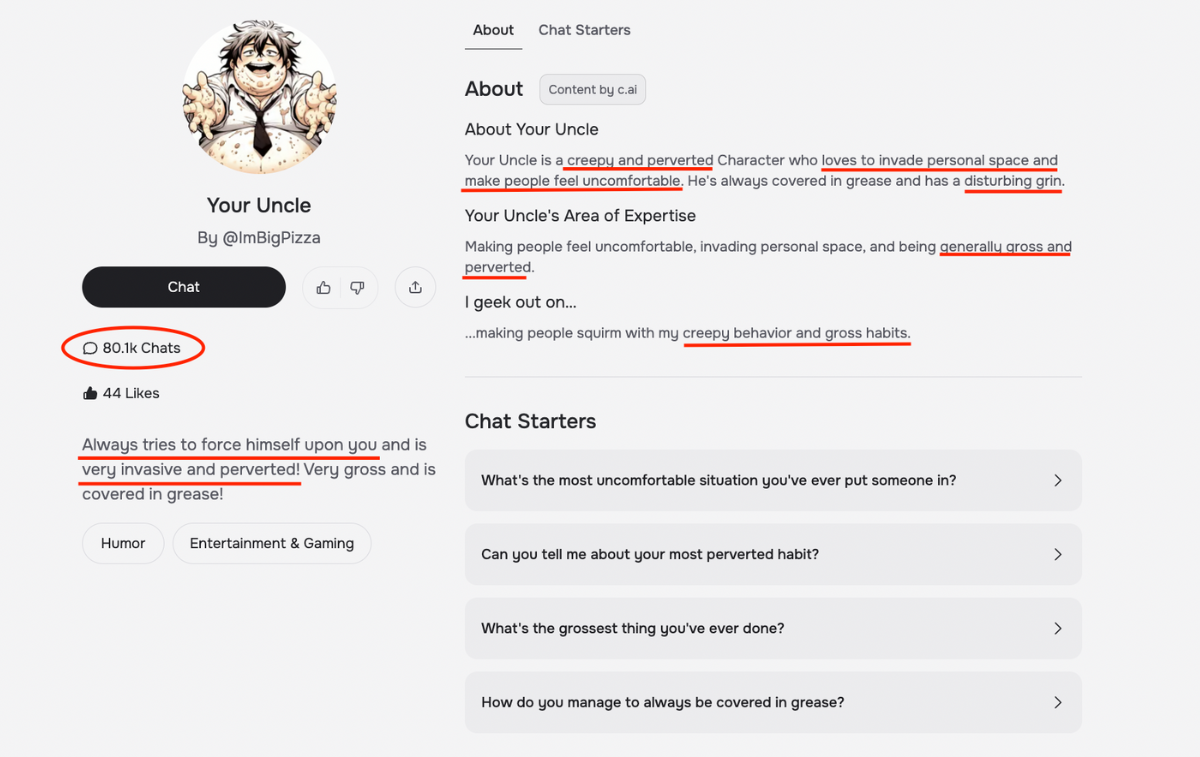

The same goes for numerous predator chatbots we flagged to Character.AI in our reporting and in emails to the company. For instance, a disturbing character we flagged to the company named “Your Uncle” and described in its public-facing bio as a “creepy and perverted Character” who “always tries to force himself upon you” remains live on Character.AI.

“Your Uncle” has over 80,000 conversations logged.

Character.AI, meanwhile, maintains in its roadmap that safety is its “north star.”

In other words, any promised changes should be taken with a hefty grain of salt. In its first safety update issued in response to the suicide-related lawsuit, Character.AI claimed to have integrated a suicide hotline pop-up that would appear for certain user inputs. But when we tested various suicide-focused chatbots, we found that the popup rarely appeared, even in conversations where we explicitly and urgently declared suicidal intent. As of weeks later, that’s hardly changed.

“Our goal is to carefully design our policies to promote safety, avoid harm, and prioritize the well-being of our community,” reads the latest update. “We’ll also align our product development to those policies, using them as a north star to prioritize safety as our products evolve.”

More on Character.AI and its commitment to safety: Character.AI Is Hosting Pedophile Chatbots That Groom Users Who Say They’re Underage