British researcher and Google DeepMind CEO Demis Hassabis, a luminary of AI research who won a Nobel prize last year, is throwing cold water on his peers’ claim that AI has achieved “PhD-level” intelligence.

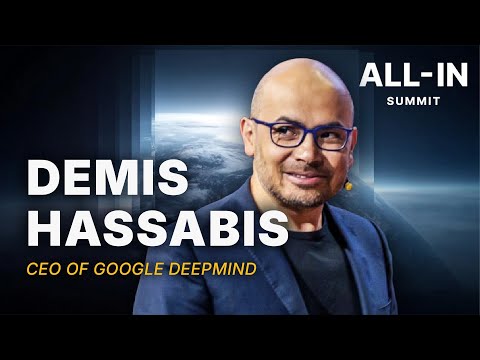

During a recent appearance at a summit put on by the “All-In” podcast, Hassabis was asked about what was holding us back from reaching artificial general intelligence (AGI), the hypothetical point at which an AI matches or surpasses human-level intelligence.

Hassabis said that current AI “doesn’t have the reasoning capabilities” of “great” human scientists who can “spot some pattern from another subject area” and apply it to another.

He also charged that we’re vastly overestimating the capabilities of current AIs.

“So you often hear some of our competitors talk about these modern systems that we have today that are PhD intelligences,” he said.

“I think that’s nonsense,” he argued. “They’re not PhD intelligences. They have some capabilities that are PhD level, but they’re not in general capable.”

It was a clear shot across the bow aimed at Google’s competitor OpenAI — a notable reality check that flies in the face of the industry’s increasingly boisterous attempts to paint AI as being right around the corner from surpassing human intelligence.

OpenAI claimed that its recently announced GPT-5 enabled its ChatGPT chatbot to have “PhD-level” intelligence.

“With GPT-5, now it’s like talking to an expert, a legitimate PhD-level expert in anything, in any area you need,” OpenAI CEO Sam Altman claimed during the model’s announcement last month.

To back up its eyebrow-raising claim, the firm pointed at carefully selected benchmarks, arguing it was far “more useful for real-world queries” than GPT-5’s predecessors.

However, as Hassabis pointed out last week, even the most advanced AI is still extremely prone to hallucinations and fails at some extremely basic tasks.

“In fact, as we all know, interacting with today’s chatbots, if you pose a question in a certain way, they can make simple mistakes with even high school maths and simple counting,” Hassabis said.

That’s something that “shouldn’t be possible for a true AGI system,” he added.

While Hassabis’ jab was directly aimed at Google DeepMind’s competitor OpenAI, it’s clear that the CEO’s own company is in a similar boat.

Google DeepMind’s latest Gemini 2.5, much like OpenAI’s GPT-5, is still suffering from widespread hallucinations. Iterations of its Gemini AI still frequently expose Google Search users to made-up facts and other demonstrably false information.

Hassabis argued that “core capabilities are still missing,” predicting that it would take another five to ten years and “probably one or two missing breakthroughs” to reach that point.

For now, experts argue that large language models, by their very nature, are ill-equipped to take on the intellect of a top-level human researcher.

“Fundamentally, there is a mismatch between what these models are trained to do, which is next-word prediction, as opposed to what we are trying to get them to do, which is to produce reasoning,” University of Cambridge machine learning professor Andreas Vlachos told New Scientist earlier this year.

More on OpenAI: OpenAI Realizes It Made a Terrible Mistake