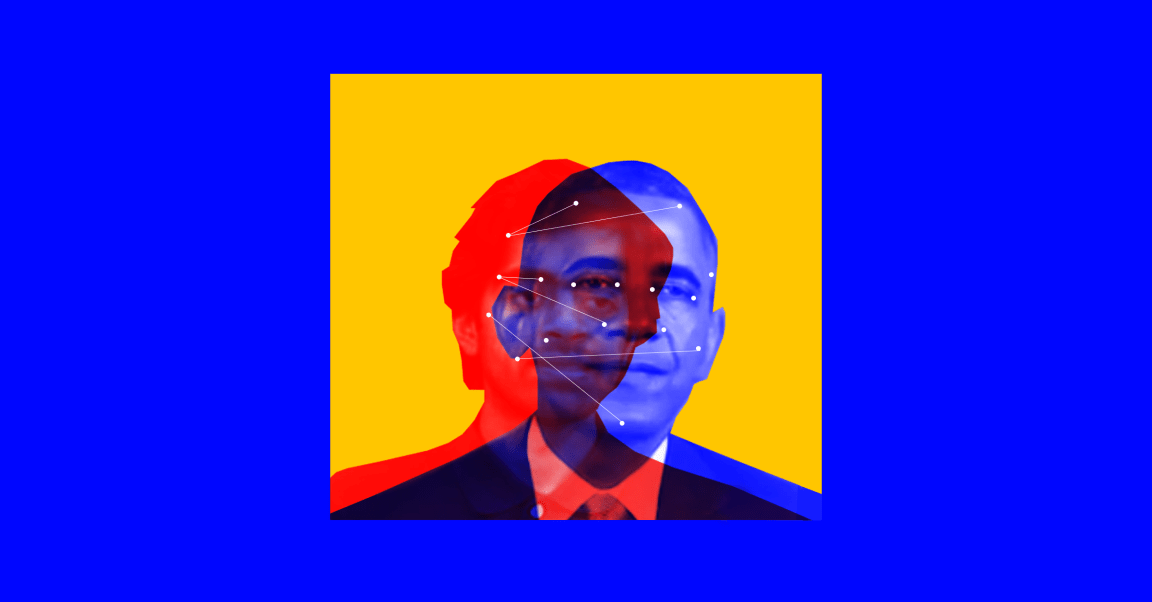

There are already fake videos on the internet, manipulated to make it look like people said things (or appeared in porn) that they never did. And now they’re about to get way better, thanks to some new tools powered by artificial intelligence.

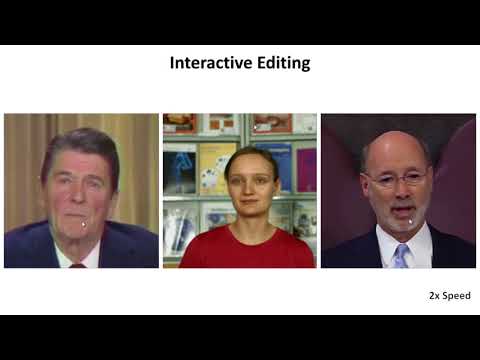

Instead of just moving a source video’s lips and face, an artificial intelligence-powered system can create photorealistic videos in which people can sway, turn their heads, blink their eyes, and emote. Basically, everything that an actor does and says in an input video will be translated into the video being altered.

According to the research, which will be presented at the VR filmmaking conference SIGGRAPH in August, the team ran a number of tests comparing its new algorithm to existing means of manipulating lifelike videos and images, many of which have been at least partially developed by Facebook and Google. Their system outperformed all the others, and participants in an experiment struggled to determine whether or not the resulting videos were real.

The researchers, who received some funding from Google, hope that their work will be used to improve virtual reality technology. And because the AI system only needs to train on a few minutes of source video to work, the team feels that its new tools will help make high-end video editing software more accessible.

The researchers also know their work might, uh, worry some folks.

“I’m aware of the ethical implications of those reenactment projects,” researcher Justus Thies told The Register. “That is also a reason why we published our results. I think it is important that the people get to know the possibilities of manipulation techniques.”

But at what point do we get tired of people “raising awareness” by further developing the problem? In the paper itself, there is just one sentence dedicated to ethical concerns — the researchers suggest that someone ought to look into better watermarking technologies or other ways to spot fake videos.

Not them, though. They’re too busy making it easier than ever to create flawless manipulated videos.