Hyper Reality

A new study has found that when people look at AI-generated images of faces, they’re often convinced they’re the real thing — and race seems to play an important and strange factor as well.

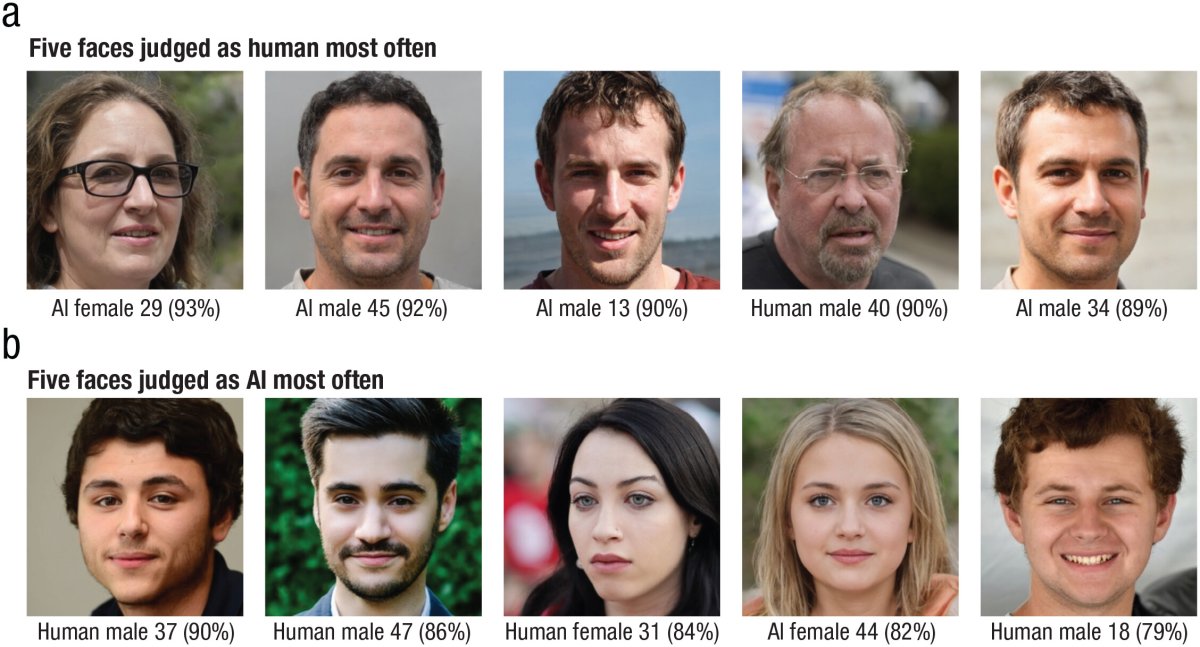

In a new paper published in the journal Psychological Science, an international team of researchers found that “AI-generated faces are now indistinguishable from human faces.”

The team conducted two experiments: one in which 124 participants were shown 100 AI-generated faces and 100 photos of real people, and another where more than 600 people were shown a mix of AI and human faces without being told that some were AI and asked to rate their humanity based on attributes like facial symmetry.

Here’s where it gets weird: all the participants in both experiments were white, and the images they were shown were all white, too. The idea was to head off “potential out-group effects in humanness ratings and other-race effects,” the paper explains — but you have to admit, excluding non-white people from the research feels like a significant blind spot.

While we’d certainly like to see a more inclusive followup, the grim throughline feels straightforward: the advent of powerful AI means that you just can’t trust your eyes anymore.

Face Case

This “hyperreality” effect, as the researchers dubbed it, seems to occur overwhelmingly when viewing white AI-generated faces, with a whopping 66 percent of the AI-generated images being incorrectly marked as human while only 51 percent of the human faces were marked correctly.

That prior study, the paper points out, had found by contrast that “non-white AI faces were judged as human at around chance levels, which did not differ significantly from how often non-white human faces were judged to be human” — in both the human and AI-generated categories, participants were correct in marking the humanness or AI-ness only about half of the time.

That same hyperreality effect when viewing white AI-generated faces was, interestingly, seen across the board regardless of the race of the participants in the prior study, which was published early last year in the journal PNAS.

Another wrinkle is that AI image generators are likely worse at generating realistic-looking images of non-white people, the researchers conjecture, because white people are overrepresented in training data — though excluding non-white people entirely does feel a bit heavy-handed.

Provocatively, the researchers were also able to create a machine learning model that could detect whether images were real or AI with 94 percent accuracy, blowing the accuracy of human viewers out of the water.

More on AI fakes: AI Industry Insider Claims They Can No Longer Tell Apart Real and Fake