Benign Intervention

A team of researchers at the independent firm AMCI Testing drove a Tesla in Full-Self Driving mode for over 1,000 miles, finding its capabilities to be “suspect” at best — because of dangerous and unpredictable infractions like running a red light.

They walked away unconvinced that the system, although impressive in some respects, is ready to be fully autonomous — a rebuff to Tesla CEO Elon Musk’s ambitions of launching a driverless robotaxi service.

According to the firm, its testers were forced to intervene over 75 times while Full Self-Driving was in control. On average, that’s once every 13 miles. Given that the typical driver in the US travels about 35 to 40 miles per day, that’s an alarmingly frequent rate of requiring human intervention.

“What’s most disconcerting and unpredictable is that you may watch FSD successfully negotiate a specific scenario many times — often on the same stretch of road or intersection — only to have it inexplicably fail the next time,” AMCI director Guy Mangiamele said in a statement.

Road Warrior

As shown in three videos summing up the findings, Full Self-Driving did manage several types of scenarios admirably.

In one instance, in which a Tesla was driving down a tight, two-way road lined with parked cars, the vehicle cleverly pulled into a gap on the right hand side to allow oncoming traffic to pass.

But in an activity where safety should be paramount, Full Self-Driving needs to be next to flawless. It definitely was not that.

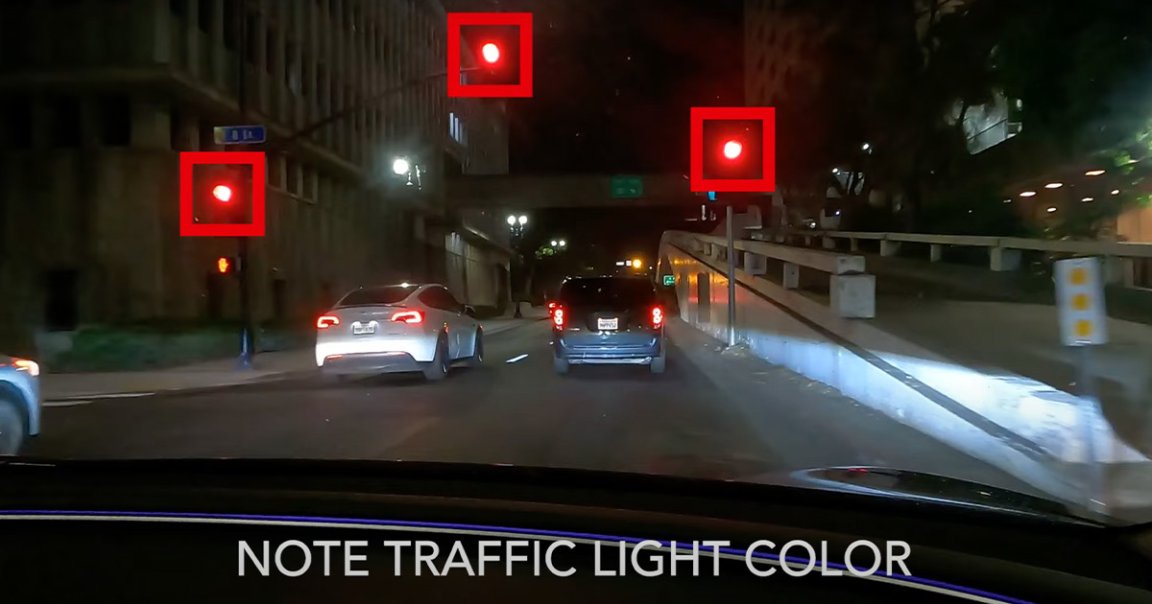

During a night drive in the city, the Tesla with the driving mode engaged straight-up ran a red light that was clearly visible, seemingly because it was following other motorists who also disregarded the light.

In another dangerous scenario, Full Self-Driving failed to either recognize or follow a double yellow line around a curve. The Tesla veered into oncoming traffic, and only avoided a potential collision after the driver intervened. (This is almost exactly what Musk experienced first-hand way back in 2015, and it’s still an issue now.)

Taxi Truths

Musk has teased the launch of a driverless robotaxi service, which would reportedly use a new vehicle that fans have nicknamed the “Cybercab.” Yet if AMCI’s testing is anything to go by, the company’s autonomous driving tech may not be safe enough for the task.

It’s unclear, though, how its safety record would stack up against the capabilities of competitors like Waymo, a veteran in the robotaxi industry.

But even with a human behind the wheel, Full Self-Driving poses significant risks, according to Mangiamele, because it “breeds a sense of awe that unavoidably leads to dangerous complacency.”

“When drivers are operating with FSD engaged, driving with their hands in their laps or away from the steering wheel is incredibly dangerous,” he added. “As you will see in the videos, the most critical moments of FSD miscalculation are split-second events that even professional drivers, operating with a test mindset, must focus on catching.”

More on Tesla: Workers Training Tesla’s Autopilot Say They Were Told to Ignore Road Signs to Avoid Making Cars Drive Like a “Robot”