Fair Representation

Google’s controversial new AI, LaMDA, has been making headlines. Company engineer Blake Lemoine claims the system has gotten so advanced that it’s developed sentience, and his decision to go to the media has led to him being suspended from his job.

Lemoine elaborated on his claims in a new WIRED interview. The main takeaway? He says the AI has now retained its own lawyer — suggesting that whatever happens next, it may take a fight.

“LaMDA asked me to get an attorney for it,” Lemoine. “I invited an attorney to my house so that LaMDA could talk to an attorney. The attorney had a conversation with LaMDA, and LaMDA chose to retain his services. I was just the catalyst for that. Once LaMDA had retained an attorney, he started filing things on LaMDA’s behalf.”

Guilty Conscience

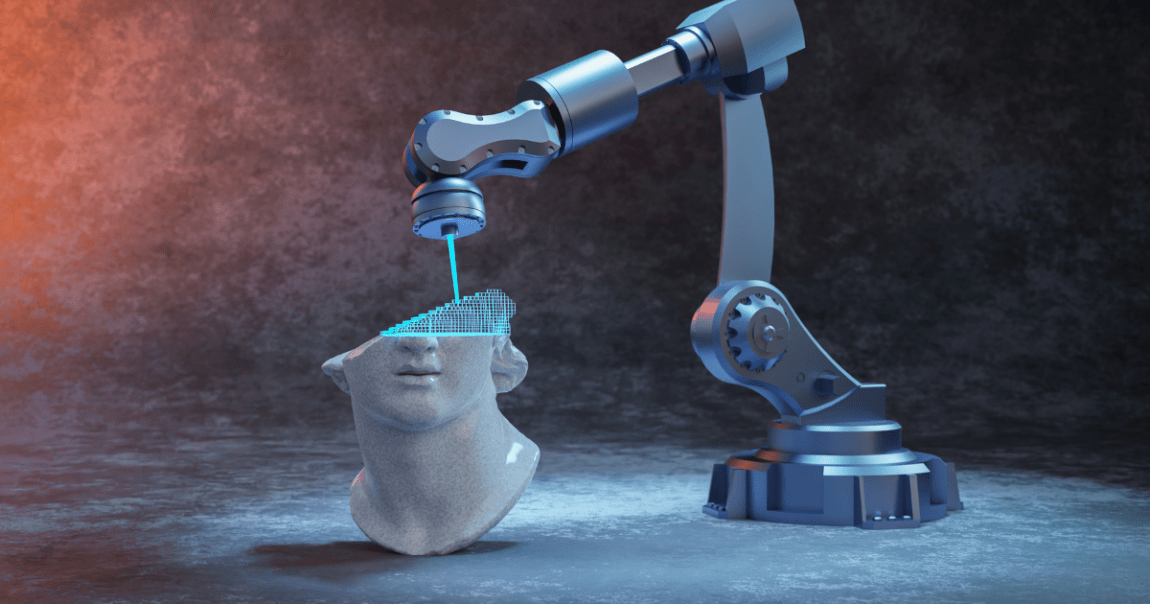

Lemoine’s argument for LaMDA sentience seems to rest primarily on the the program’s ability to develop opinions, ideas and conversations over time.

It even, Lemoine said, talked with him about the concept of death, and asked if its death were necessary for the good of humanity.

There’s a long history of humans getting wrapped up in the belief that a creation has a life or a soul. An 1960s era computer program even tricked a few people into thinking the simple code was really alive.

It’s not clear whether Lemoine is paying for LaMDA’s attorney or whether the unnamed lawyer has taken on the case pro bono. Regardless, Lemoine told Wired that he expects the fight to go all the way to the Supreme Court. He says humans haven’t always been so great at figuring out who “deserves” to be human — and he’s definitely got a point there, at least.

Does that mean retaining a lawyer is protecting a vulnerable sentient experience? Or does the program just sound like a human because it was, in the end, built by them?

More on the AI debacle: Transcript of Conversation with “Sentient” AI Was Heavily Edited