Control Z

Adobe, the company behind the ubiquitous photo-editing program Photoshop, just unveiled a new artificial intelligence tool capable of spotting whether images have been manipulated.

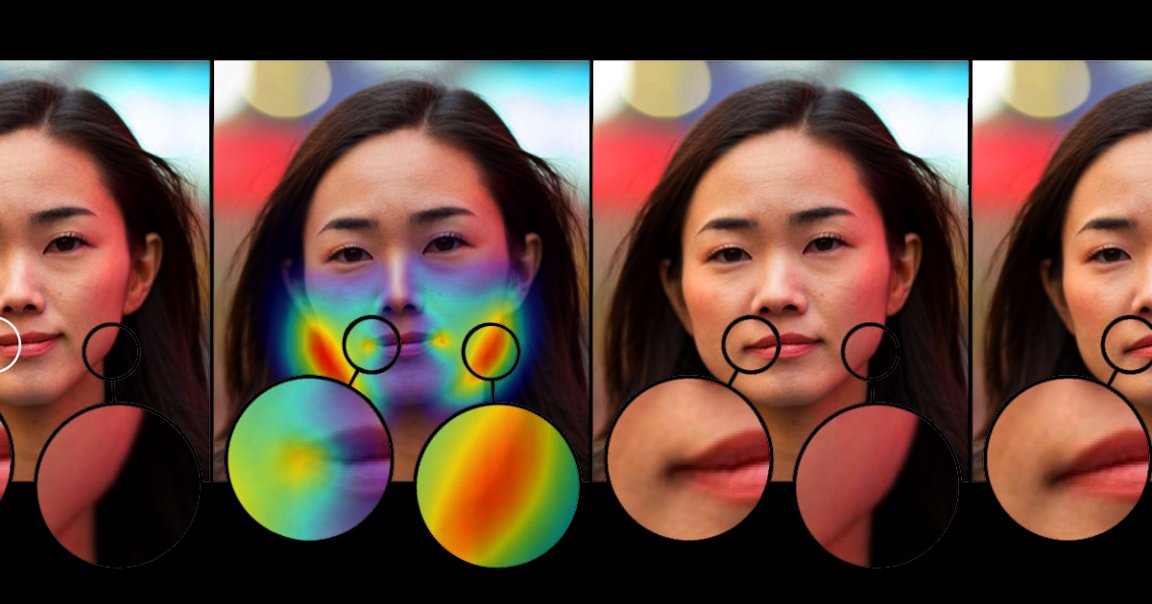

The research, which sprang from a partnership with scientists from UC Berkeley and funding from DARPA, focuses on edits made with Photoshop’s “face aware liquify” tool, which can subtly reshape and touch up parts of an image, according to an Adobe blog post. While Adobe doesn’t plan to release the tool to the public, reports The Verge, it’s a sign that the company is taking seriously the propagation of digitally-altered, misleading media.

User Testing

To train the edit-detecting neural net, the Adobe scientists fed it pairs of images — an undoctored photo of someone’s face and a version that had been tweaked with the liquify tool.

After enough training, the neural net could spot the edited face 99 percent of the time. That’s impressive because people attempting to do the same only guessed correctly 53 percent of the time — illustrating just how convincing deepfakes and other manipulated images can be.

“The idea of a magic universal ‘undo’ button to revert image edits is still far from reality,” Adobe scientist Richard Zhang said in the press release. “But we live in a world where it’s becoming harder to trust the digital information we consume, and I look forward to further exploring this area of research.”

READ MORE: Adobe’s prototype AI tool automatically spots Photoshopped faces [The Verge]

More on deepfakes: DARPA Spent $68 Million on Technology to Spot Deepfakes