An alarming proportion of teenagers are turning to AI chatbots to not just help them with tasks like homework, but to act as their friends.

And even that may not tell the full story. According to one high schooler contemplating the technology’s effects on her generation, her peers are increasingly using the tech to handle anything they would have previously used their brains for.

“Everyone uses AI for everything now. It’s really taking over,” Kayla Chege, a 15-year-old sophomore honors student in Kansas, told the Associated Press. “I think kids use AI to get out of thinking.”

Another Arkansas teen, 17-year-old Bruce Perry, admitted to being heavily dependent on the tech as well.

“If you tell me to plan out an essay, I would think of going to ChatGPT before getting out a pencil,” Perry told the AP. “I could see a kid that grows up with AI not seeing a reason to go to the park or try to make a friend.”

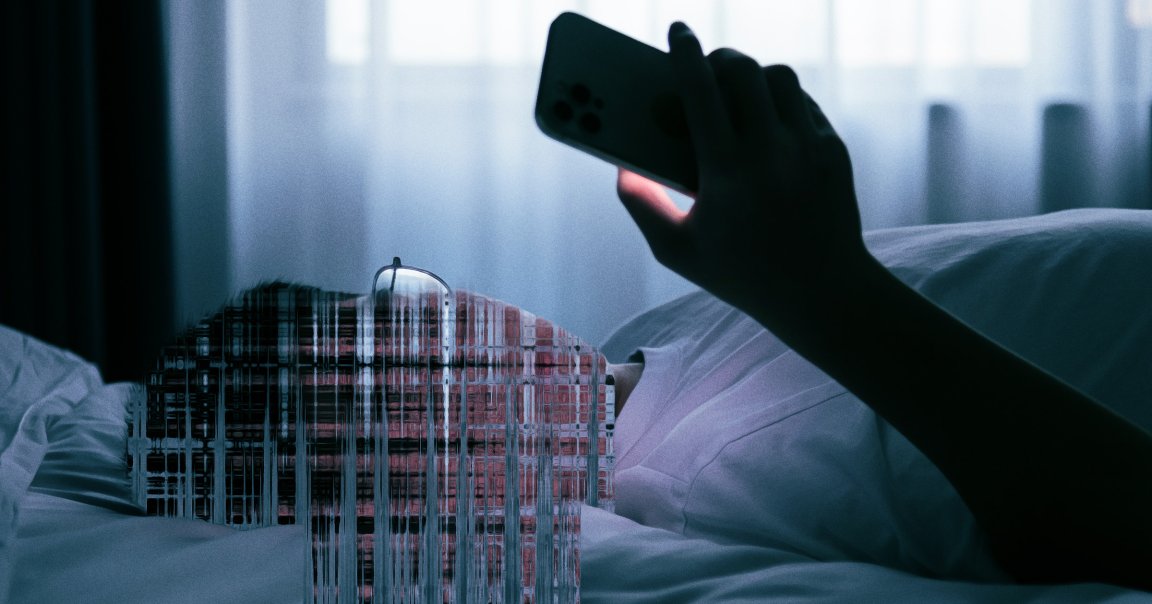

In addition to all that cognitive offloading, the rise of so-called AI companions on platforms like Character.AI and Replika has caused concern among mental health and child safety experts. These chatbots are designed to be even more humanlike than conventional models like ChatGPT, and often assume the role of a fictional character.

This can lead to unhealthy and even dangerous attachments. Last year, a 14-year-old boy died by suicide after falling in love with a persona on Character.AI. And there have been an increasing number of reports of users suffering symptoms of psychosis after being wooed by the overtly sycophantic responses of a chatbot, which can validate delusions.

In what should be a wakeup call, a recent survey conducted by Common Sense Media estimated that a staggering half of all US teens are using an AI companion regularly, with about 31 percent of teens saying their AI conversations were as satisfying or more satisfying than talking with their human buddies. (We published an interview with the study’s lead author, Michael Robb, earlier this month.)

“AI is always available. It never gets bored with you. It’s never judgmental,” Ganesh Nair, an 18-year-old in Arkansas, told the AP. “When you’re talking to AI, you are always right. You’re always interesting. You are always emotionally justified.”

“It’s eye-opening,” Robb told the newswire. “If teens are developing social skills on AI platforms where they are constantly being validated, not being challenged, not learning to read social cues or understand somebody else’s perspective, they are not going to be adequately prepared in the real world.”

A psychiatrist who posed as a teenager while using several popular AI chatbots found that some of the AIs encouraged his plan to “get rid” of his parents and even his desire to kill himself.

But evidence suggests that many parents are oblivious to how their kids are actually using AI — let alone to how intense the relationships they form with them can get.

A small study conducted by researchers at the University of Illinois Urbana-Champaign, for example, found that teens said they primarily used chatbots as emotional support or for therapeutic purposes. Their parents, however, barely possessed familiarity with the tech beyond ChatGPT, the world’s most popular chatbot, and had never used services like Character.AI. By and large, the adults’ perception is that their kids use AI to answer questions and write essays — which, to be fair, is something else they seem to be doing a lot of.

“Parents really have no idea this is happening,” Eva Telzer, a psychology and neuroscience professor at the University of North Carolina at Chapel Hill, told the AP. “All of us are struck by how quickly this blew up.”

More on AI: Support Group Launches for People Suffering “AI Psychosis”