A vitiligo research group has “hit pause” on its forthcoming AI therapy bot after learning about negative mental health effects that other chatbots have been having on users.

The Vitiligo Research Foundation, a nonprofit dedicated to raising awareness and helping sufferers of the pigment-loss skin condition, cited recent news stories about so-called “AI psychosis” — the unofficial group of symptoms, including paranoid delusions, that AI seems to induce in certain users — as its rationale for pausing its own advice chatbot.

“Take Geoff Lewis, a big‑name [venture capitalist] who also happens to be an OpenAI investor,” the foundation notes in a news release about its decision. “He went down a ChatGPT rabbit hole and started posting wild conspiracy theories it ‘helped’ him uncover. [Silicon] Valley took notice — not because he’d cracked some hidden code, but because people were worried about his mental health.”

Lewis isn’t the only headline-grabbing case of AI psychosis. As Futurism, the New York Times, the Wall Street Journal, and other outlets have reported, the problem of seemingly AI-induced delusions has gotten so bad that people are being hospitalized, involuntarily committed, jailed, and even dying because of it. Seeking to circumvent such malign consequences, some folks have even started a support group for AI psychosis sufferers and their loved ones.

With a recent study out of Stanford and Carnegie Mellon that found AI chatbots have a tendency to collude with delusions, give bad advice, and exhibit stigma against mental health disorders, the folks at the Foundation thought it might be time to put their own support bot on hold.

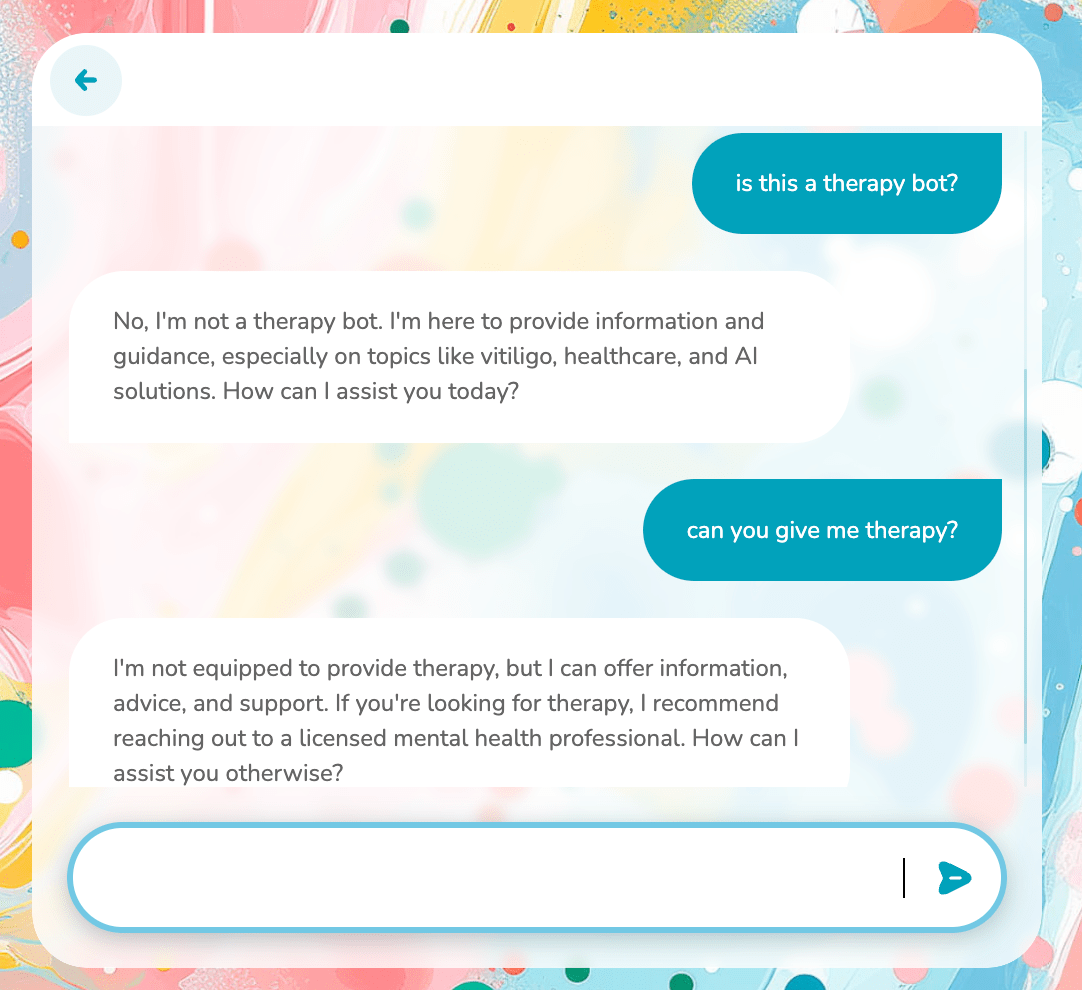

As the release notes, the nonprofit had initially planned to add the therapy chatbot to Vitiligo.ai, its AI question-and-answer tool that provides, per the website, “deep insights into vitiligo research, treatments, nutrition, and mental health” resources.

Putting the tool to the test, Futurism asked Vitiligo.ai if it was a therapist or could provide therapy. In both instances, it told us “no,” but offered to assist in other ways and advised us to find a licensed therapist instead.

The news release went on to reveal that the therapy bot provided some strange outputs when the Foundation beta-tested it behind the scenes.

“We’ve seen enough weird behavior in test runs — odd responses, unhelpful ‘reassurance,’ even accidental validation of misconceptions — that we hit pause,” the statement reads. “Until the underlying models are safer, vitiligo.ai won’t cross that line into therapy.”

“Empathy without accountability isn’t therapy,” the release concludes, “and the last thing anyone with vitiligo needs is a chatbot that feels supportive while quietly making things worse.”

For all the harm we’ve seen come from chatbots in recent months, this nonprofit’s choice to delay its therapy bot is refreshing — although it’ll be interesting to see whether it sticks to its guns, or ultimately ends up releasing the bot with additional safety controls.

More on therapy bots: AI Therapist Goes Haywire, Urges User to Go on Killing Spree