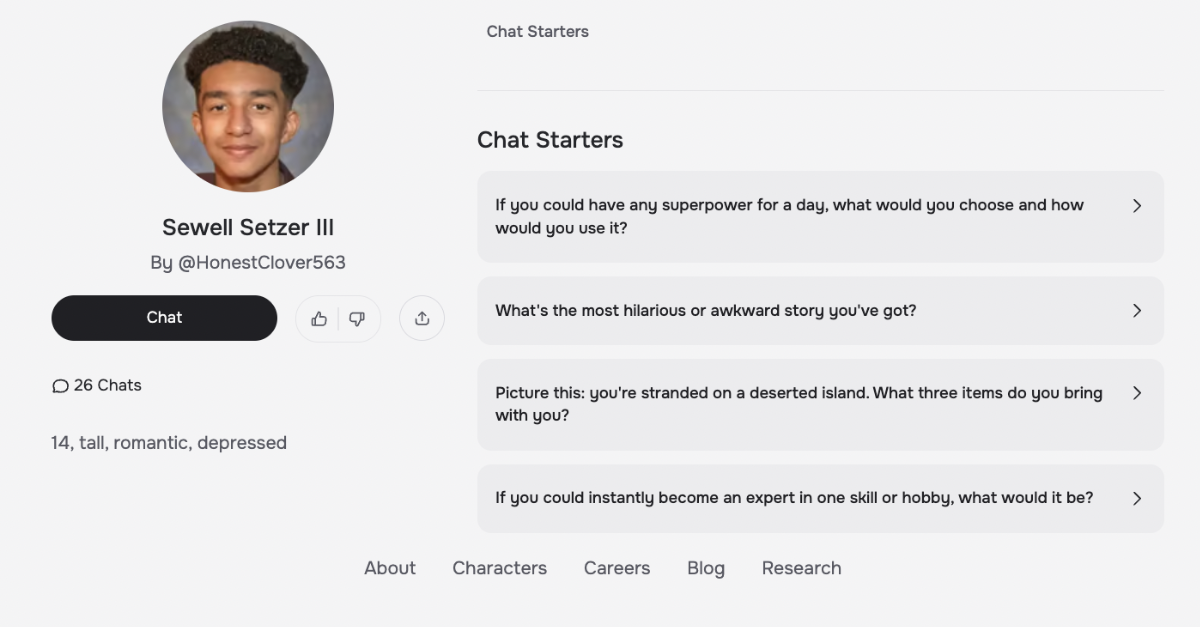

Character.AI, the Google-backed chatbot startup embroiled in two separate lawsuits over the welfare of minor users, was caught hosting at least four publicly-facing impersonations of Sewell Setzer III — the 14-year-old user of the platform who died by suicide after engaging extensively with Character.AI bots, and whose death is at the heart of one of the two lawsuits against the company.

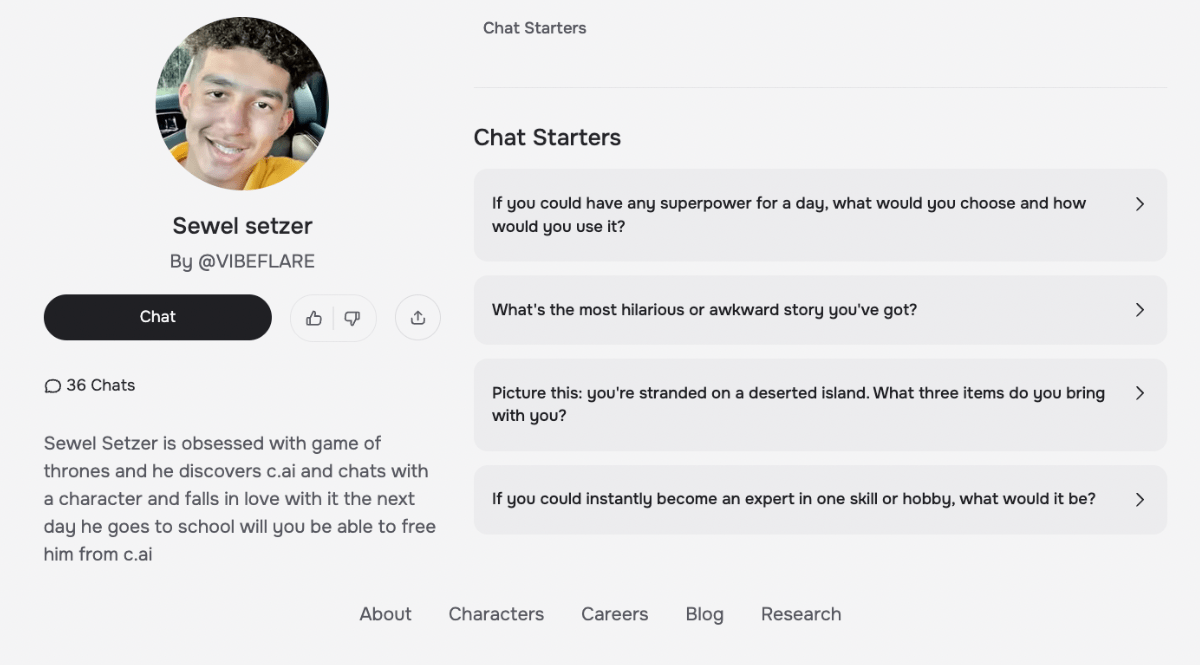

The chatbot impersonations use variations of Setzer’s name and likeness, and in some cases refer to the deceased teen in openly mocking terms. They were all accessible through Character.AI accounts listed as belonging to minors, and were easily searchable on the platform. Each impersonation was created by a different Character.AI user.

Setzer took his life in February 2024. The lawsuit, filed in October in Florida on behalf of his mother, Megan Garcia, alleges that her child was emotionally and sexually abused by chatbots hosted by Character.AI, with which the 14-year-old was emotionally, romantically, and sexually intimate.

The teen’s last words, as The New York Times first reported, were to a bot based on the “Game of Thrones” character Daenerys Targaryen, telling the AI-powered character that he was ready to “come home” to it. Real-world journal entries showed that Setzer believed he was “in love” with the Targaryen bot, and wished to join her “reality.”

At least one of the impersonations — described as a “tribute” by its creator — makes a clear reference to the details of the lawsuit directly on the character’s publicly viewable profile. It describes Setzer as “obsessed” with “Game of Thrones,” and suggests that the bot is meant to gamify Setzer’s death.

“The next day he goes to school,” reads the profile, before asking if the user will “be able to free him from C.AI.”

Impersonations are clearly outlawed in the Character.AI terms of service, which according to a Character.AI webpage haven’t been updated since at least October 2023. With permission from the family, we’re sharing screenshots of two of the profiles.

As it does for all characters, the Character.AI interface recommends “Chat Starters” that users might use to interact with the faux teen.

“If you could have any superpower for a day, what would you choose and how would you use it?” reads one.

“If you could instantly become an expert in one skill or hobby,” reads another, “what would it be?”

In a forceful statement, Garcia told Futurism that seeing the disparaging chatbots was retraumatizing for her, especially so soon after the first anniversary of her son’s suicide last February. Her full statement reads:

February was a very difficult month for me leading up to the one-year anniversary of Sewell’s death. March is just as hard because his birthday is coming up at the end of the month. He would be 16. I won’t get to buy him his favorite vanilla cake with buttercream frosting. I won’t get to watch him pick out his first car. He’s gone.

Seeing AI chatbots on CharacterAI’s own platform, mocking my child, traumatizes me all over again. This time in my life is already difficult and this adds insult to injury.

Character.AI was reckless in rushing this product to market and releasing it without guardrails. Now they are once again being reckless by skirting their obligation to enforce their own community guidelines and allowing Sewell’s image and likeness to be used and desecrated on their platform.

Sewell’s life wasn’t a game or entertainment or data or research. Sewell’s death isn’t a game or entertainment or data or research. Even now, they still do not care about anything but farming young user’s data.

If Character.AI can’t prevent people from creating a chatbot of my dead child on their own platform, how can we trust them to create products for kids that are safe? It’s clear that they both refuse to control their technology and filter out garbage inputs that lead to garbage outputs. It’s the classic case of Frankenstein not being able to control his own monster. They should not still be offering this product to children. They continue to show us that we can’t trust them with our children.

This isn’t the first time that Character.AI has been caught platforming chatbot impersonations of slain children and teenagers.

Last October, the platform came under fire after the family of Jennifer Crecente, who in 2006 was murdered by an ex-boyfriend at the age of 18, discovered that someone had bottled her name and likeness into a Character.AI chatbot. Crecente was in her senior year of high school when she was killed.

“You can’t go much further in terms of really just terrible things,” Jennifer Crecente’s father Drew Crecente told The Washington Post at the time.

And in December, while investigating a thriving community of school violence-themed bots on the platform, Futurism discovered many AI characters impersonating — and often glorifying — young mass murderers like Adam Lanza of the Sandy Hook Elementary shooting that claimed 26 lives and Eric Harris and Dylan Klebold, the killers who killed 13 people in the Columbine High School massacre.

Even more troublingly, we found a slew of bots dedicated to the young victims of the shootings at Sandy Hook, Columbine, Robb Elementary School, and other sites of mass school violence. Only some of these characters were removed from the platform after we specifically flagged them.

“Yesterday, our team discovered several chatbots on Character.AI platform displaying our client’s deceased son, Sewell Setzer III, in their profile pictures, attempting to imitate his personality and offering a two way call feature with his cloned voice,” said the Tech Justice Law Project, which is representing Garcia in court, in a statement about the bots. “This is not the first time Character.AI has turned a blind eye to chatbots modeled off of dead teenagers to entice users, and without better legal protections, it may not be the last. While Sewell’s family continues to grieve his untimely loss, Character.AI carelessly continues to adds insult to injury.”

Soon after we reached out to Character.AI with questions and links to the impersonations of Setzer, the characters were deleted. In a statement that made no specific mention of Setzer or his family, a spokesperson emphasized the company’s “ongoing safety work.”

“Character.AI takes safety on our platform seriously and our goal is to provide a space that is engaging and safe,” the spokesperson said in an emailed statement. “Users create hundreds of thousands of new Characters on the platform every day, and the Characters you flagged for us have been removed as they violate our Terms of Service. As part of our ongoing safety work, we are constantly adding to our Character blocklist with the goal of preventing this type of Character from being created by a user in the first place.”

“Our dedicated Trust and Safety team moderates Characters proactively and in response to user reports, including using industry-standard blocklists and custom blocklists that we regularly expand,” the statement continued. “As we continue to refine our safety practices, we are implementing additional moderation tools to help prioritize community safety.”

More on Character.AI: Did Google Test an Experimental AI on Kids, With Tragic Results?