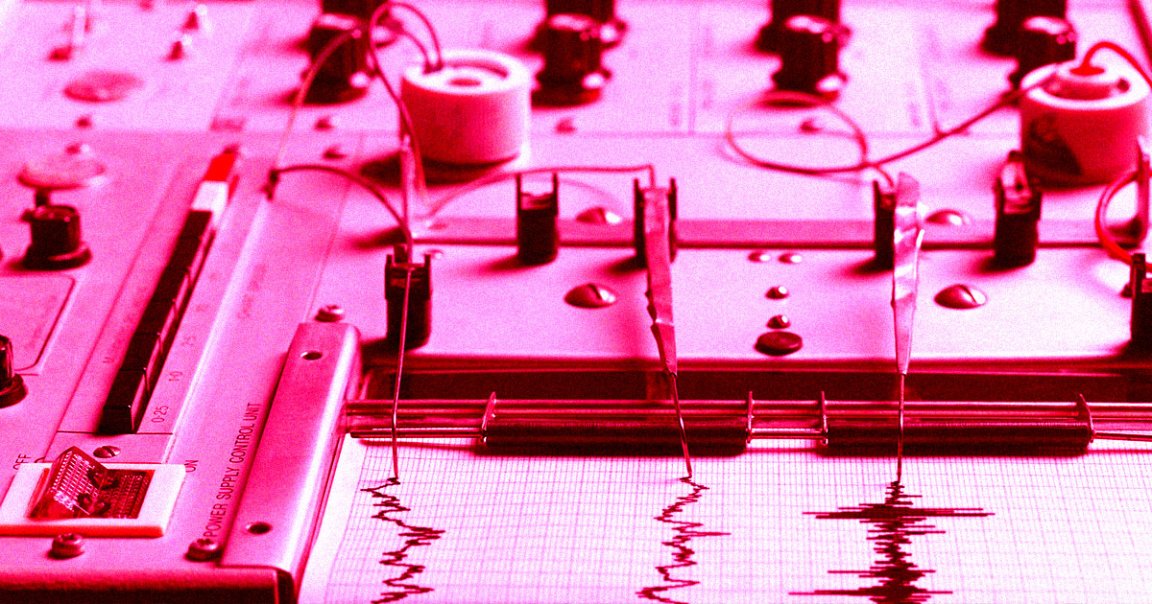

A polygraph test ostensibly measures a person’s breathing rate, pulse, blood pressure, and perspiration to figure out if they’re lying or not — though the 85-year-old technology has long been debunked by scientists.

Basically, the possibility of false positives and the subjectiveness involved in interpreting results greatly undermines the usefulness of the polygraph as a lie detector. Tellingly, their results are generally not admissible in US courts.

Because it’s 2024, researchers are now asking whether artificial intelligence might help. In a new study published in the journal iScience, a team led by University of Würzburg economist Alicia von Schenk found that yes, it just might — but, as MIT Tech Review reports, it also led to experimental subjects making more accusations overall, in yet another warning about the far-flung risks of replacing human intuition with algorithms.

First, the researchers asked participants to write down statements about their weekend plans. If they successfully lied about their plans without being found out, they were given a small financial reward.

The collected statements were then used to train an algorithm based on Google’s large language model BERT. The scientists found that it was capable of telling if a given statement was a lie with a success rate of 67 percent, a significant improvement over humans, who tend to only get it right 50 percent of the time.

Von Schenk and her colleagues then offered a separate group of volunteers the option to use the algorithm for a small fee to detect lies or rely on their own human intuition.

Only a third of the volunteers were willing to use the tool — but they also became power users.

“When you make the active choice to rely on the technology, we see that people almost always follow the prediction of the AI… they rely very much on its predictions,” von Schenk told MIT Tech.

As a result, those who didn’t use the tool determined on average that only 19 percent of statements were lies. But those who used the tool determined that a whopping 58 percent of statements were wrong, a massive spike in the accusation rate.

“This finding supports the truth-default theory and replicates commonly observed findings in the lie-detection literature, documenting that people typically refrain from accusing others of lying,” the paper reads. “One potential reason is that they are simply not very good at it and want to reduce the risks of paying the costs of false accusations for themselves and the accused. In support of this notion, in our study, people also did not succeed at reliably discerning true from false statements.”

But were these determinations accurate enough to ever fully be trustworthy? Trusting an AI lie detector would require a far better-than-average success rate for that to happen, as MIT Tech points out.

Despite the technology’s shortcomings, such a tool could serve an important function, given the proliferation of disinformation on the internet, a trend that is being accelerated with the use of AI.

Even AI systems themselves are becoming better at lying and deceiving, a dystopian trend that should encourage us to build up our defenses.

“While older machines such as the polygraph have a high degree of noise and other technologies relying on physical and behavioral characteristics such as eye-tracking and pupil dilation measurements are complex and expensive to implement, current Natural Language Processing algorithms can detect fake reviews, spam detection on X/Twitter, and achieve higher-than-chance accuracy for text-based lie detection,” the paper reads.

In other words, perhaps being willing to make more accusations could be a good thing — with plenty of caveats, of course.

“Given that we have so much fake news and disinformation spreading, there is a benefit to these technologies,” von Schenk told MIT Tech. “However, you really need to test them — you need to make sure they are substantially better than humans.”

More on lies: AI Systems Are Learning to Lie and Deceive, Scientists Find