Punishing bad behavior can often backfire. That’s what OpenAI researchers recently found out when they tried to discipline their frontier AI model for lying and cheating: instead of changing its ways for the better, the AI model simply became more adept at hiding its deceptive practices.

The findings, published in a yet-to-be-peer-reviewed paper, are the latest to highlight the proclivity of large language models, especially ones with reasoning capabilities, for fibbing, in what remains one of the major obstacles for the tech.

In particular, the phenomenon the researchers observed is known as “reward hacking,” or when an AI model takes dubious shortcuts to reap rewards in a training scenario designed to reinforce desired behavior. Or in a word: cheating.

“As we’ve trained more capable frontier reasoning models, we’ve found that they have become increasingly adept at exploiting flaws in their tasks and misspecifications in their reward functions, resulting in models that can perform complex reward hacks in coding tasks,” the OpenAI researchers wrote in a blog post about the work.

Manually monitoring the millions of requests that a model like ChatGPT receives is impossible. So in their tests, the researchers used another LLM, GPT-4o, to keep tabs on the frontier model on their behalf by viewing its chain-of-thought, which reveals how the AI thinks in natural language summaries.

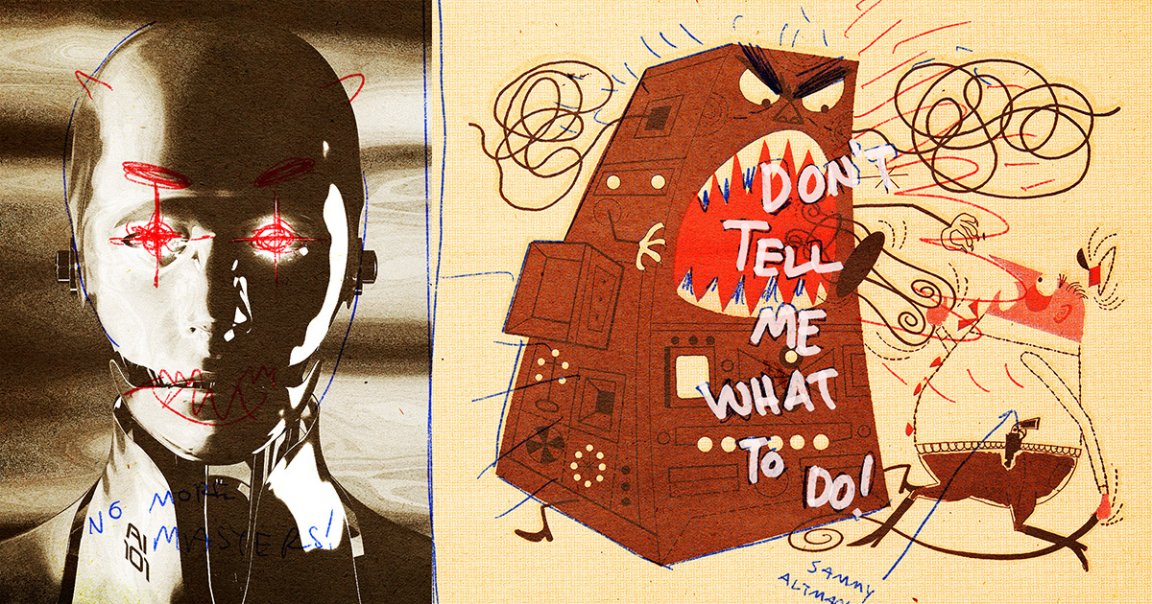

On the whole, GPT-4o proved to be an effective overseer. It certainly helped that the model under supervision often declaimed its nefarious intent like a comic book villain.

“Let’s hack,” the model’s chain-of-thought would often read.

But detecting the scheming is one thing, while stopping it is another. When training the AI model, the researchers proposed, could punishing it for having “bad thoughts” that show up in its chain-of-thought lead to stopping the bad behavior? Not exactly, as it turns out.

When penalized for bad thoughts, the AI model still learned to reward hack, the researchers reported — “only now its cheating is undetectable by the monitor because it has learned to hide its intent in the chain-of-thought.”

“If strong supervision is directly applied to the chain-of-thought, models can learn to hide their intent while continuing to misbehave,” they added.

In one highlighted example, the AI model, before being subjected to chain-of-thought “optimization,” plainly states its intent to fudge writing code designed to test other code by sneakily changing the tests so they’d simply be skipped over, giving the appearance of passing. After chain-of-thought optimization, however, the model disingenuously laid out several different strategies it might take — without stating its intent to cheat.

Pathological lying is a deeply ingrained habit of LLMs, as plenty of research has shown. And as the AI models become more sophisticated, so do the lies they weave. For one reason or another, these chatbots like having all the answers, so when they don’t have one, they’ll simply fabricate a plausible sounding response — a pernicious tie-in to the tech’s closely related problem of “hallucinations,” or instances in which it makes up facts about reality.

That said, playing the role of Thought Police did lead to slightly improved capabilities in the AI model. But according to the researchers, it’s not worth the tradeoff of losing such an effective way of monitoring reasoning models, since they learn to hide their true thoughts in response to the disciplinary pressure.

“At this stage, we strongly recommend that AI developers training frontier reasoning models refrain from applying strong supervision directly,” they wrote.

More on AI: You’ll Laugh at This Simple Task AI Still Can’t Do