Google’s search AI thinks you can melt eggs. Its source? Don’t laugh: ChatGPT.

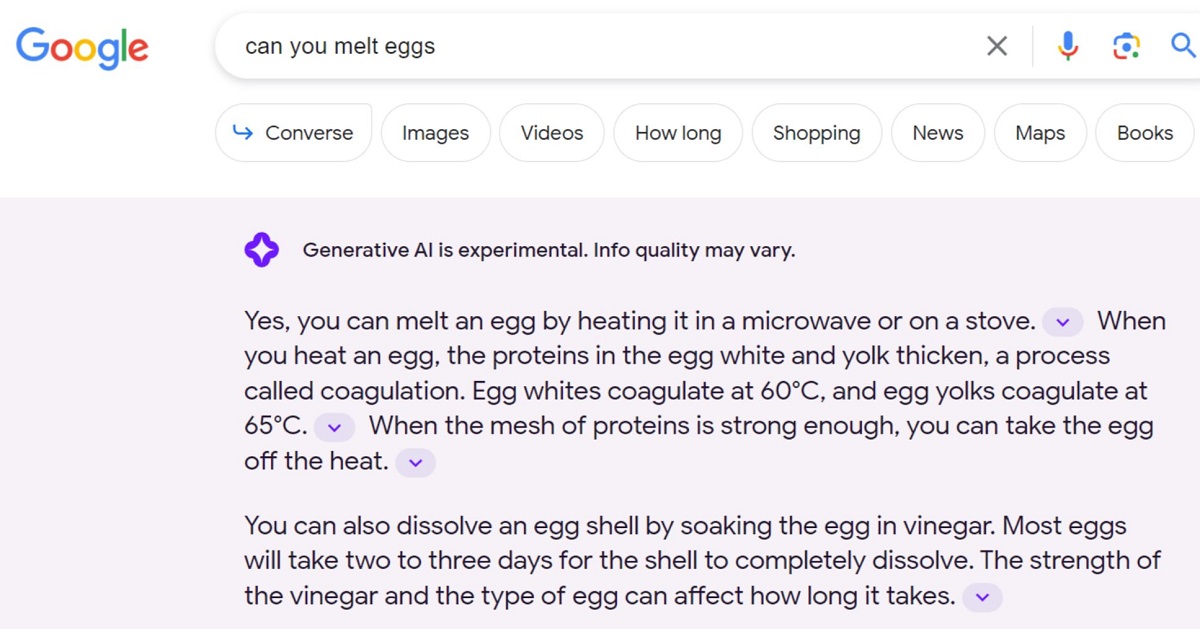

This latest case of AI-generated misinformation was first spotted by an X-formerly-Twitter user named Tyler Glaiel, who took to the platform on Monday to share a screenshot of what happened when he typed “can you melt eggs” into Google’s search bar. Naturally, the real answer to that query is no, of course you can’t melt eggs, but Google’s featured search snippet, as can be seen in Glaiel’s screenshot, said the exact opposite was true.

“Yes, you can melt an egg,” it read, “by heating it in a microwave or on a stove.”

To come to this conclusion, it seems that Google looked to Quora, the question-and-answer forum site that’s search-optimized itself to the top spot on any number of Google results pages. Earlier this year, Quora launched an AI chatbot called Poe, which according to a company blog post is designed to “let people ask questions, get instant answers, and have back-and-forth conversations with several AI-powered bots.” One of these “several AI-powered bots” is a version of ChatGPT, and though Poe is more or less meant to be used as its own app, the product’s ChatGPT-generated answers will sometimes appear at the top of standard Quora posts instead.

That’s exactly what happened here. The AI’s answer has since been corrected, but in a Quora forum titled “Can you melt an egg?” Poe’s ChatGPT integration was telling users that “yes, an egg can be melted,” and that “the most common way to melt an egg is to heat it using a stove or a microwave.” Again, this is completely false, and clearly wasn’t fact-checked by a human. But Quora’s search rankings are so good that Google pulled the bad info into its featured snippet.

Google’s regular search citing AI-generated falsehoods in its featured search overview is bad enough, but we decided to take things one step further and run the same query through Google’s experimental AI-integrated search, Search Generated Experience, dubbed SGE for short. And sure enough, when we searched “can you melt eggs” on SGE, the AI provided a similarly terrible answer.

“Yes, you can melt an egg by heating it in a microwave or on a stove,” the AI told us, before launching into a few sentences about egg coagulation (which, we should note, is not the same thing as the impossible concept of straight-up melting an egg.)

If there’s any bright spot? In the hours since we first caught wind of the joint Quora-slash-Google blunder, the mistakes have been edited — sort of. Poe’s ChatGPT response now correctly tells users that “no, you cannot melt an egg in the traditional sense,” although the bot’s previous incorrect response is still visible in Google’s overview of the link. The Quora forum also no longer holds the top spot in search rankings; now, an article about Google’s egg-melting mistake, penned by Ars Technica’s Benj Edwards, has surfaced to the first result and thus, to Google’s coveted featured search snippet (journalism: it works!)

And still — you really just have to laugh at this point — for a while, Google’s regular search overview remained misleading. Edwards specifically notes in Ars Technica article that “just for reference, in case Google indexes this article: No, eggs cannot be melted.” Instead of quoting this very direct answer, however, Google Search’s featured snippet began to feature a quote from the article reading that “the most common way to melt an egg is to heat it using a stove or microwave.” Indeed, Google Search was citing its own riff on misinformation, which itself was a paraphrasing of another AI’s hallucination. Head hurt yet?

But that’s been fixed as well, with Google Search now citing Edwards’ “just for reference” caveat. And SGE is now telling users that “you can’t melt eggs in the traditional sense, because they are made of water, proteins, fats, and other molecules.” Better late than never.

When we reached out to Google, a spokesperson for the company emphasized that its algorithms — SGE’s algorithm included — are designed to surface high-quality content, although did note poor or incorrect sources can sometimes leak through the cracks.

“Like all [large language model] -based experiences, generative AI in Search is experimental and can make mistakes, including reflecting inaccuracies that exist on the web at large,” the spokesperson told us over email. “When these mistakes occur, we use those learnings and improve the experience for future queries, as we did in this case. More broadly, we design our Search systems to surface reliable, high-quality information.”

The spokesperson did not, however, address the fact that its AI’s unfortunate egg “inaccuracy” was sourced from ChatGPT-hallucinated nonsense.

While the question of whether you can melt eggs isn’t particularly high-stakes, the situation itself — a ping-pong-esque misinformation loop between competing AI systems, operating without human oversight — is concerning. Imagine if Quora’s ChatGPT integration had fabricated facts about political leaders or historical events, or perhaps given a bad answer to a medical or financial query, and Google’s AI had paraphrased those claims instead. The search giant doesn’t exactly have a great recent track record of ensuring that its overviews of historical events remain free and clear of AI-generated misinformation, so it’s certainly worth considering.

If there’s any takeaway, here, it’s that 1. you definitely can’t melt eggs, and 2. don’t take anything that AI tells you for granted, even if the AI was built by a huge tech company. Yes, Google’s featured snippets are convenient, and if SGE could be trusted, it could maybe provide some value to the everyday Google user. As it stands, though, both Google products are struggling to keep up with an internet landscape increasingly rife with synthetic content. (If there’s any consolation, maybe, in the process of regurgitating the outputs of one another, the internet’s various generative AI integrations will end up swallowing themselves.)

More on Google and AI: As Smoke Fills the Sky, Google’s New AI Gives Wildly Inaccurate Info on Air Quality