A surprising number of people, from social media platforms to police surveillance systems, are very interested in what you look like. That means companies are able to learn a lot about you — your appearance, age, ethnicity, and more — whenever your face pops up. While this is generally for the sake of targeted ads, it can also put people at risk of privacy violations or identity theft.

To help people hold onto (whatever remains of) their privacy, tools have emerged intended to trip up facial recognition AI. Real-world products like “Face Off Hats” (that’s a physical hat with a trippy pattern) and 3D-printed masks fool face-scanning software by presenting optical illusions to the cameras we encounter during our everyday lives.

But those tactics are to throw off the cameras. What happens if a photo of you somehow makes it online?

Soon, there may be a filter to keep AI from spotting your face those photos that slip past.

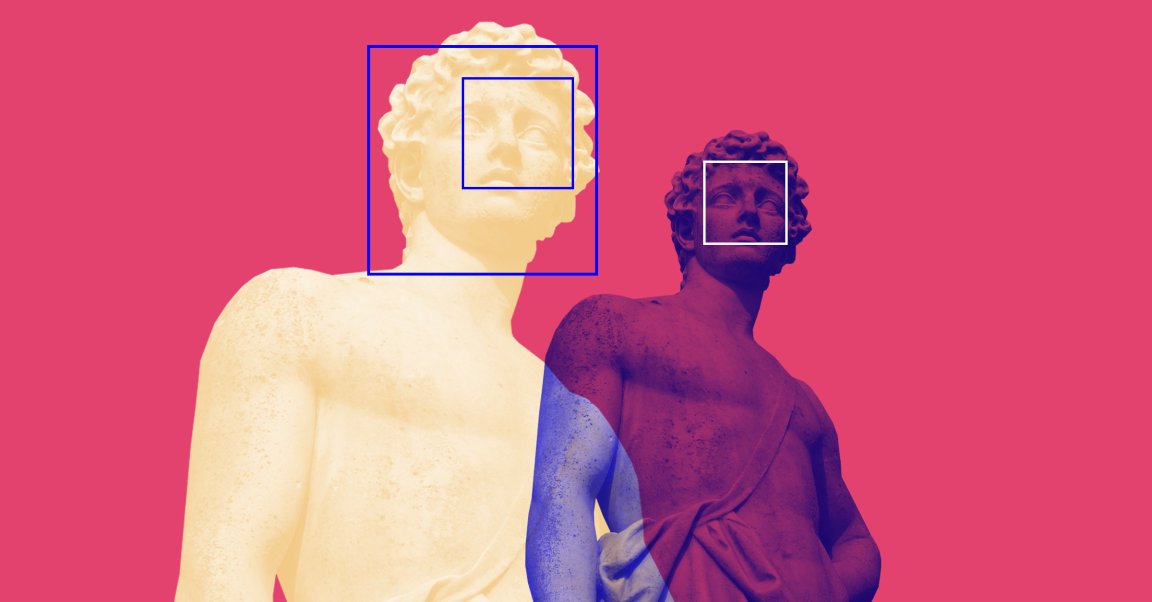

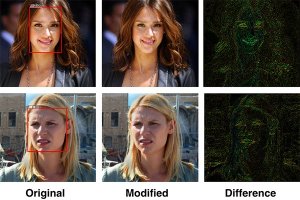

Engineers from the University of Toronto have built a filter that slightly alters photos of people’s faces to keep facial recognition software from realizing what its looking at. The AI-driven filter looks for specific facial features and changes certain pixels. People can barely see a difference, but any AI scanning the image can’t even tell that it’s looking at a face.

(Credit: Avishek Bose)

To build its filter, the team pitted two neural networks against each other. The first AI system was tasked with identifying facial features from a set of several hundred photos, and the second algorithm was responsible for altering the photos to the point that they no longer looked like faces to the first. The two AI systems went back and forth, gradually getting better at their tasks, until the researchers determined that the filter was probably effective enough to work on commercial facial recognition software.

In a test, the filter reduced the accuracy of face-detecting software from correctly spotting just about every face it was shown to only being able to recognize one in 200. Facial recognition AI was also suddenly unable to recognize the emotion or ethnicity of the person pictured, which for some reason tech companies felt was important to be able to do.

This probably isn’t the kind of filter likely to pop up on Instagram, especially since Instagram’s parent company, Facebook, is storing your intimate, personal selfies to make sure no one else uploads them. But the engineers who developed this filter hope to release it via an app in the near future.