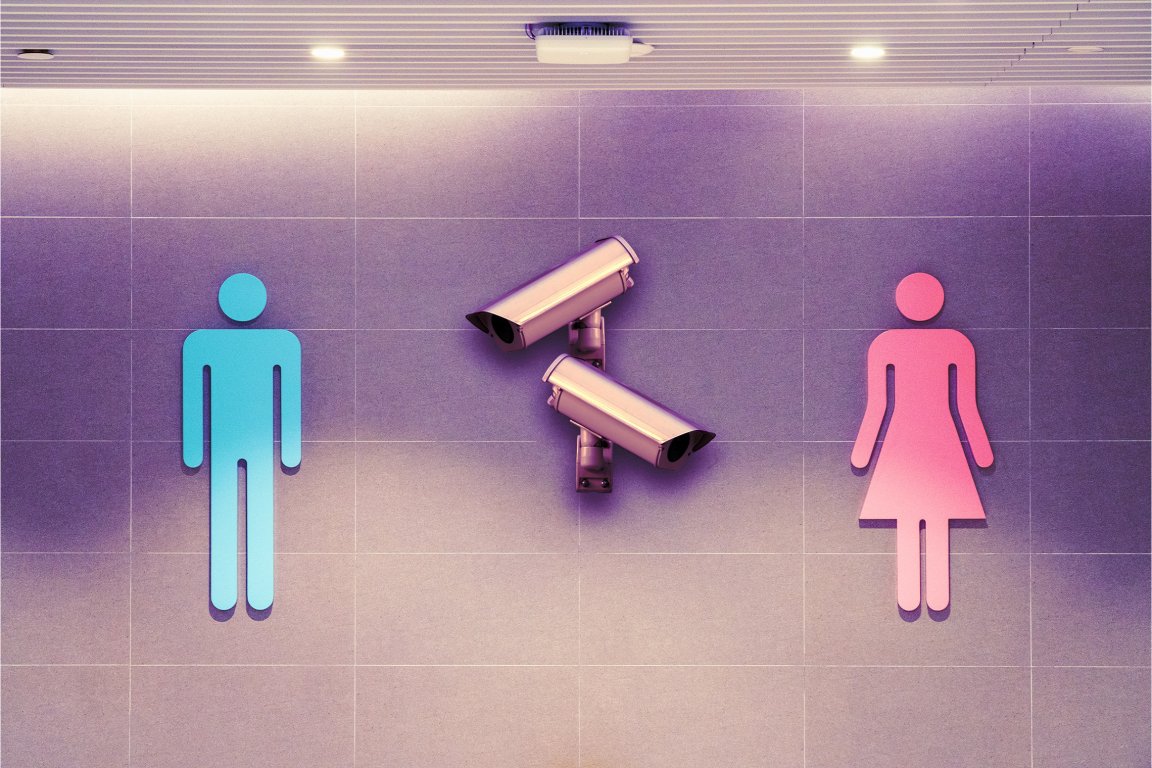

Public spaces aren’t the only place you need to be worried about being monitored. In 2025, officials are even placing AI surveillance gadgets in school bathrooms.

New reporting by Forbes revealed the troubling rise of AI surveillance at the Beverly Hills High School that may be a sign of things to come for school districts throughout North America.

From video drones capturing footage of the campus to behavioral analysis AI cameras monitoring the hallways, the Los Angeles school district is quickly starting to mirror the dystopian panopticon of “The Minority Report.” As Forbes tells it, no avenue is left un-monitored: Flock license plate readers survey the comings and goings of every visitor, and perhaps most strikingly, AI audio capture devices line the bathroom walls.

To school officials, this is all just part of doing business.

“This community wants… whatever we can do to make our schools safer,” district superintendent Alex Cherniss told Forbes. “If that means you have armed security and drones and AI and license plate readers, bring it on.”

“We are in the hub of an urban setting of Los Angeles, in one of the most recognizable cities on the planet. So we are always a target and that means our kids are a target and our staff are a target,” Cherniss said, adding that the surveillance panopticon spots “multiple threats per day.”

What exactly those threats are, district officials didn’t specify. However, they noted that they’ve spent $4.8 million on security in the fiscal year 2024-2025 alone — a costly solution to a nebulous problem.

Yet as Forbes notes, Beverly Hills isn’t exactly an outlier. From coast to coast, school districts are turning to AI products in an attempt to stem school violence, as America’s school shooting problem continues unabated. While that fear of school shootings is perfectly rational, the rise in unregulated AI surveillance tools comes with its own costs to student’s safety and privacy.

In Baltimore County Public Schools, for example, district officials contract a company called Omnilert to monitor some 7,000 school cameras for deviant activity. In one horrifying case, Omnilert’s system misidentified a student’s bag of Doritos, labeling it a handgun. It wasn’t until an armed police squad detained the 16-year old student at gunpoint that they realized the system’s error.

And in Florida, a middle school recently went into lockdown after a similar AI surveillance system mistook a student’s clarinet as a gun. Luckily, no one was hurt in either case, but the capacity for false positives is deeply concerning. Meanwhile, experts remain skeptical that these systems really do make schools safer.

“It’s very peculiar to make the claim that this will keep your kids safe,” Chad Marlow, a senior policy counsel at the ACLU, told Forbes.

Marlow was the lead author of a 2023 report finding that eight of the biggest ten school shootings since Columbine took place in heavily-surveilled school campuses. Meanwhile, focus group testing by the ACLU found that pervasive school surveillance makes kids much less comfortable telling teachers and administrators about issues like mental health struggles and domestic abuse.

“Because kids don’t trust people they view as spying on them, it ruptures trust and actually makes things less safe,” Marlow said.

Whether it’s worth the tradeoff remains to be seen — Marlow notes that more independent research is needed to tell if these AI systems really do lead to safer outcomes for students. In the meantime, it seems like a risk some school administrators are willing to take.

More on surveillance: Regular People Are Rising Up Against AI Surveillance Cameras