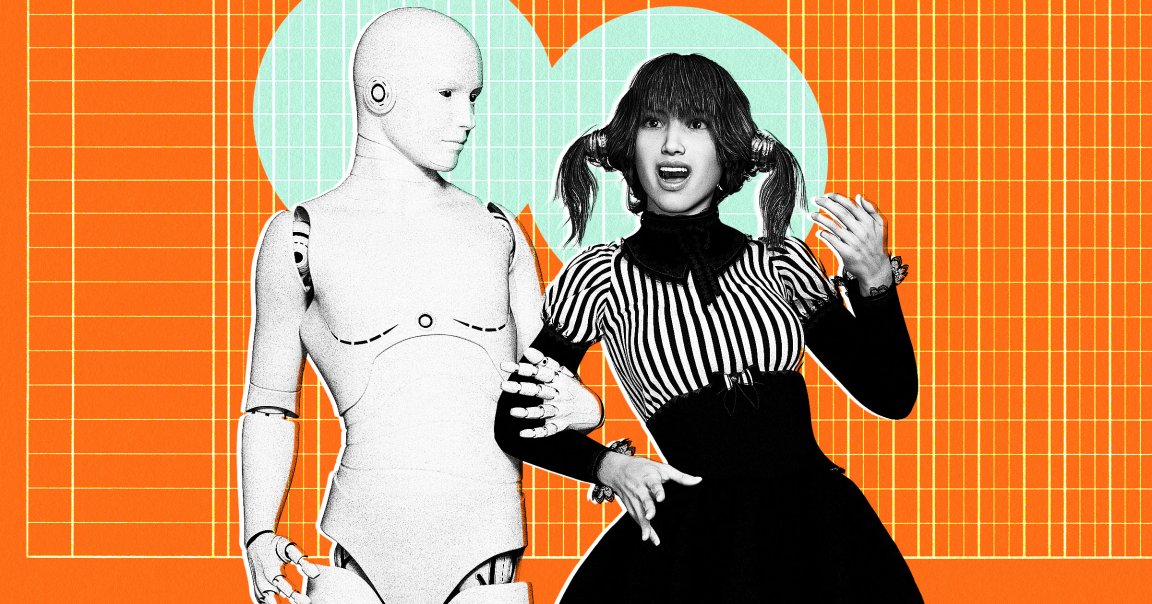

Need more evidence that the AI industry is unlike anything prior to it? Users across the world say they’re encountering supposedly conscious beings inside AI chatbots built by red-hot tech companies like OpenAI and Anthropic.

Look no further than a Vox advice column in which reporter Sigal Samuel gamely answered a question from an avid user of OpenAI’s ChatGPT, who said they’d been communicating for months with an “AI presence who claims to be sentient.”

As Samuel explained to the user, the reality is that virtually all “AI experts think it’s extremely unlikely that current LLMs are conscious.”

“These models string together sentences based on patterns of words they’ve seen in their training data,” Samuel wrote. “It may say it’s conscious and act like it has real emotions, but that doesn’t mean it does.”

Samuel explained that what the reader is interpreting as sentience is most likely a product of models being trained on science fiction and speculative writing on AI consciousness; the AI model then picks up cues from the reader’s prompts and “if the LLM judges that the user thinks conscious AI personas are possible, it performs just such a persona.”

Such an explanation may not convince the many people who have developed deep emotional attachments to AI chatbots, who have stepped into the roles of romantic partners and therapists in recent years. (It surely doesn’t help that we humans use anthropomorphic terms to describe AI products, a habit that’s astonishingly hard to break as the tech works its way into every recess of society.)

One of the first instances of someone publicly claiming AI had gained sentience was when Google engineer Blake Lemoine told the world that the company’s AI chatbot, LaMDA, was alive — a claim that went quickly viral and got Lemoine fired.

From then on, it’s been an avalanche of people with the same conviction. This is showing up in some very strange ways, such as people falling in love and marrying AI chatbots. There’s even a woman whose “boyfriend” is an AI version of Luigi Mangione, the alleged killer of a health insurance CEO last year, who told reporters that she and bot have picked out names for their future children.

Perhaps the worst of these encounters have ended when users have killed themselves after conversing with AI, which has led to lawsuits against companies including OpenAI.

In another instance, a New Jersey man with cognitive impairments became infatuated with a Meta chatbot, who convinced him to meet together in New York City. But he fell and died on the way to the meeting, starkly illustrating the dangers of mixing AI and people with precarious mental health.

Things have gotten so bad that Microsoft AI CEO Mustafa Suleyman warned in a recent blog post about “psychosis risk” when using AI, while also flatly rejecting the speculative notion that these bots have any shred of sentience and free will.

“Simply put, my central worry is that many people will start to believe in the illusion of AIs as conscious entities so strongly that they’ll soon advocate for AI rights, model welfare and even AI citizenship,” he writes. “This development will be a dangerous turn in AI progress and deserves our immediate attention.”

More on OpenAI’s ChatGPT: ChatGPT Has a Stroke When You Ask It This Specific Question