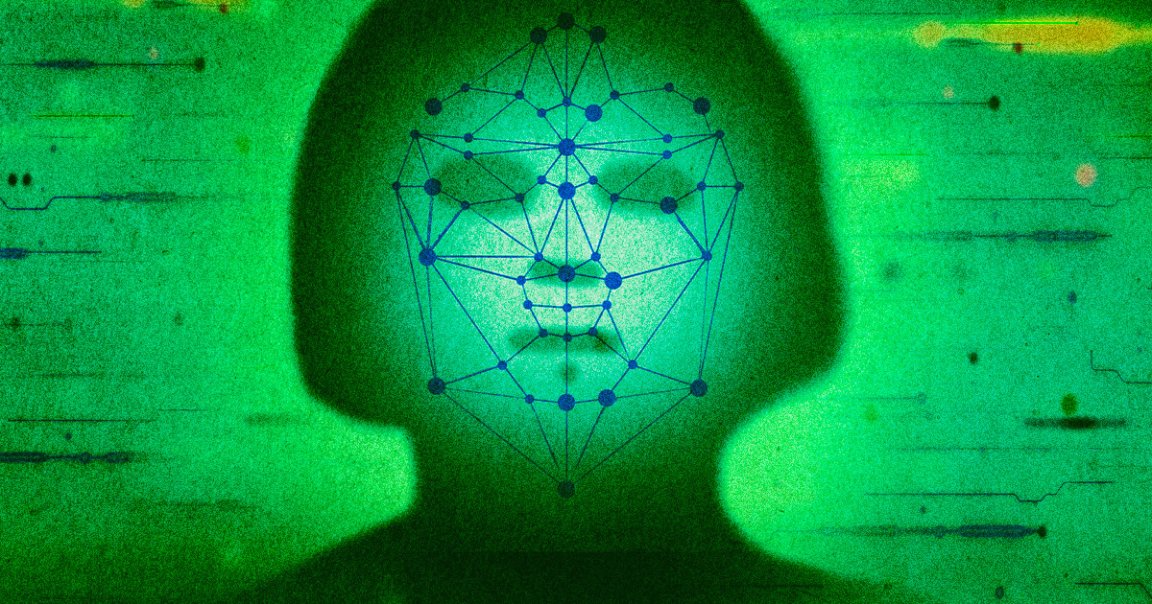

False Positive

A 61-year-old man says he was savagely beaten and gang raped in jail after facial recognition led to his false arrest for a robbery in Houston, Texas, The Guardian reports.

Harvey Eugene Murphy Jr. is now suing the retailers Macy’s and EssilorLuxottica for $10 million for their alleged use of facial recognition, according to the news outlet, which mistakenly identified him for a 2022 robbery at a Sunglass Hut, a subsidiary of EssilorLuxottica, in which two robbers ran off with thousands of dollars of goods.

Murphy claims that an EssilorLuxottica employee, working with its partner Macy’s, used facial recognition software to misidentify him as one of the culprits and also fingered him for two more robberies — even though the quality of the images fed into the software was poor.

And in any case, Murphy says he was in Sacramento, California at the time of the incident. In October 2023, he came back to Texas to renew his license at a local department of motor vehicles, where police were alerted to his presence and arrested him, charging him with robbery.

He eventually ended up in Harris County jail. While there, prosecutors dropped the charges against him when they confirmed he was nowhere near the Sunglass Hut in Houston.

But before his release, he says, three men beat and sexually assaulted him.

Bad Bot

Later, Murphy’s attorney discovered via police records that EssilorLuxottica and Macy’s used facial recognition technology to connect him to the crime.

“The attack left him with permanent injuries that he has to live with every day of his life,” the lawsuit reads, according to The Guardian. “All of this happened to Murphy because the defendants relied on facial recognition technology that is known to be error prone and faulty.”

The news outlet reports this is the seventh publicly known case in America in which someone was misidentified and arrested due to faulty facial recognition technology.

In late 2023, the Federal Trade Commission (FTC) slapped Rite Aid for using facial recognition software to falsely accuse shoppers of shoplifting. Black, Hispanic and female customers were often targets.

With Murphy’s case, other known false positives, and the FTC reprimand of Rite Aid, the evidence is adding up that facial recognition is just bad news.

More on facial recognition technology: Rite Aid’s Facial Recognition Accused Innocent Shoppers of Theft