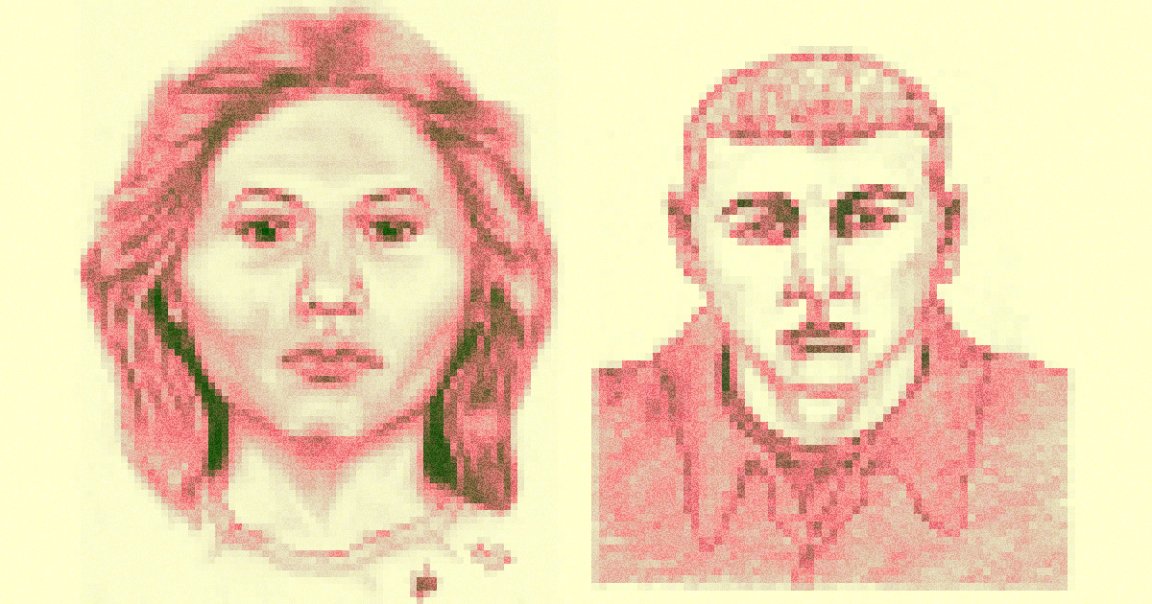

Suspect Portraits

There are a lot of potentially — shall we say — problematic uses for AI, but using one to generate police sketches? Now that’s just asking for trouble.

As Vice reports, independent developers have created a program called “Forensic Sketch AI-rtist” that uses OpenAI’s DALL-E 2 text to image AI to create “hyper-realistic” composite sketches of suspects.

According to the developers, they haven’t released the program yet, but are “planning on reaching out to police departments in order to have input data” to test on, they told Vice in a joint email.

On the client side of things, the program works by presenting users with a fill-in template that asks for gender, age, skin color, hair, eyebrows, eyes, nose, beard, and even a description of a suspect’s jaw. Once filled in, all a user has to do is choose how many images they want and hit “generate profile.”

From there, the system generates sketches using OpenAI’s DALL-E. Voilà, justice!

Biases Upon Biases

But according to experts, this approach is inherently flawed and at odds with how humans actually remember faces.

“Research has shown that humans remember faces holistically, not feature-by-feature,” Jennifer Lynch of the Electronic Frontier Foundation told Vice.

“A sketch process that relies on individual feature descriptions like this AI program can result in a face that’s strikingly different from the perpetrator’s,” she added, noting that once a witness sees a generated composite, it may “replace” what they actually remembered, especially if it’s a uncannily lifelike one like an AI’s.

And that doesn’t even touch on the AI program’s potential to exacerbate biases — especially racial — that can already be bad enough in human-made sketches. Worryingly, OpenAI’s DALL-E 2 has been well documented to produce stereotypical and outright racist and misogynistic results.

“Typically, it is marginalized groups that are already even more marginalized by these technologies because of the existing biases in the datasets, because of the lack of oversight, because there are a lot of representations of people of color on the internet that are already very racist, and very unfair,” explained Sasha Luccioni, a researcher at Hugging Face, a company and online community that platforms open source AI projects. “It’s like a kind of compounding factor.”

The ultimate effect is a continuing chain of biases: that of the witness, of the cops questioning them, and now, of a flawed, centralized AI capable of propagating its inherent biases to potential customers all across the country. In turn, whatever it generates, regardless of accuracy, will only reinforce the prejudices of the people and systems involved in the process — and of the public with whom these images will be shared.

More on generative AI: Cops Upload Image of Suspect Generated From DNA, Then Delete After Mass Criticism