Murphy Mnemonic

Researchers have found that they can incept false memories by showing subjects deepfaked clips of movie remakes that were never actually produced.

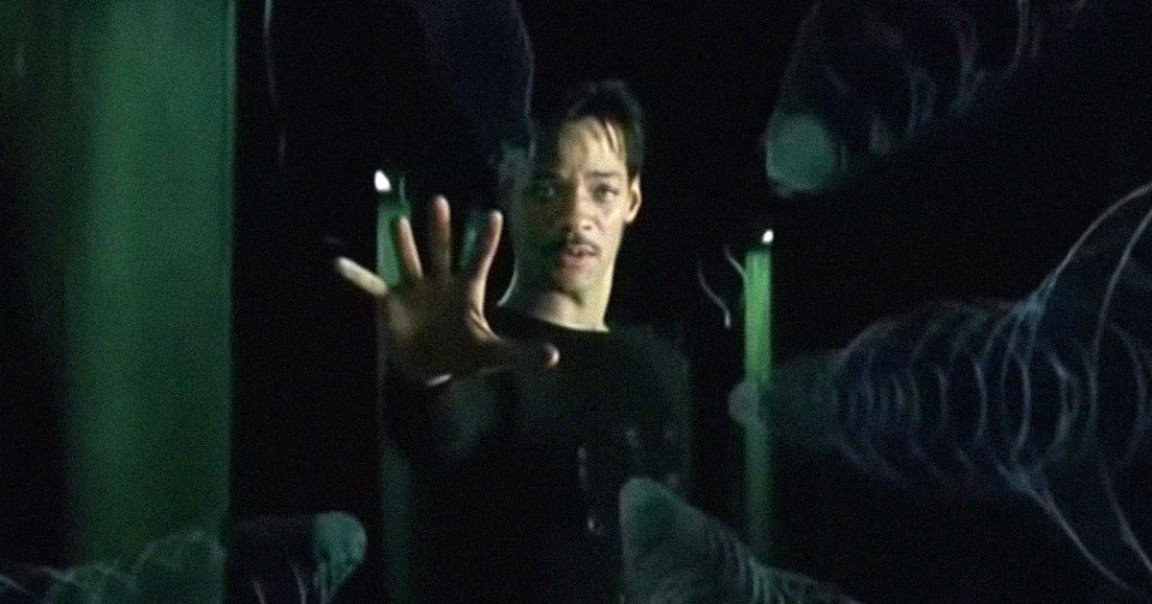

As detailed in a recent paper published in the journal PLOS One, deepfaked clips of made-up movies were convincing enough to trick participants into believing they were real. Some went as far as to rank the fake movies, which included purported remakes of real movies like Will Smith starring in a rebooted “The Matrix,” to be better than the originals.

But the study did have an important caveat.

“However, deepfakes were no more effective than simple text descriptions at distorting memory,” the paper reads, suggesting that deepfakes aren’t entirely necessary to trick somebody into accepting a false memory.

“We shouldn’t jump to predictions of dystopian futures based on our fears around emerging technologies,” lead study author Gillian Murphy, a misinformation researcher at University College Cork in Ireland, told The Daily Beast. “Yes there are very real harms posed by deep fakes, but we should always gather evidence for those harms in the first instance, before rushing to solve problems we’ve just assumed might exist.”

All were presented as if they were real and participants were asked if they had seen it and to rate how it compared to the original. pic.twitter.com/MYxzAJnrDy

— Gillian Murphy (@gillysmurf) July 13, 2023

Refakes

The researchers showed clips of deepfaked videos to 436 participants, ranging from Brad Pitt starring in “The Shining” to Charlize Theron filling in for “Captain Marvel.”

The effect is pretty convincing, as seen in a clip of Chris Pratt taking over for Harrison Ford in “Raiders of the Lost Ark,” shared by Murphy on Twitter.

An average of 49 percent of participants were fooled by the deepfaked videos. A full 41 percent of this group claimed that the “Captain Marvel” remake was better.

But a simple text description of the deepfake proved to be just as convincing, pre-empting any concerns over deepfake footage having a unique ability to distort recollections of reality.

“Our findings are not especially concerning, as they don’t suggest any uniquely powerful threat of deepfakes over and above existing forms of misinformation,” Murphy told The Daily Beast.

Fortunately, there are ways to protect ourselves from deepfakes ripping the carpet of reality from under our feet. For one, we could ensure people are technologically literate enough to distinguish between deepfaked and real media.

At the same time, generative AI is only going to get more convincing as time goes on, meaning that we need to stay vigilant before the tech has the chance to rewrite the past.

More on deepfakes: Deranged Reality TV Show Psychologically Tortures Participants by Showing Them Deepfakes of Their Partners