The questionable use of AI-generated content in academic journals poses a problem for the credibility of the scientific community. Some of the culprits are easier to weed out than others, but no one could expect an example as ridiculous as this latest case.

As spotted by a user on X-formerly-Twitter, a new study published this week in the journal Frontiers in Cell and Developmental Biology — which is supposedly peer-reviewed, mind you — heavily features figures and diagrams that are obviously AI-generated. And, how should we put this? They’re insane-looking nonsense.

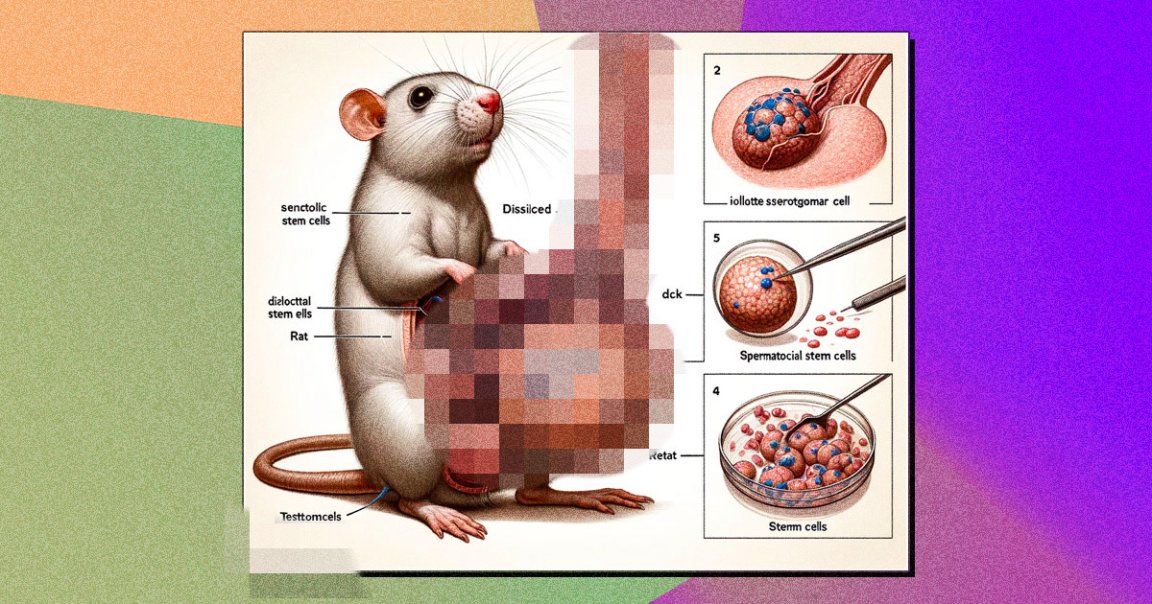

Take a look, for example, at this diagram of an extremely well endowed mouse. The morphology is so grotesque and comical it defies any explanation, flaunting what appears to be a dissected view of testicles as nearly as large as the mouse itself. They’re also protruding from its stomach and just sort of hanging out there in the open, tethered to some unknown upper anatomy out of the frame.

Note the emphasis on “appears to be,” because the labels on the diagram are made up gobbledygook like “testtomcels,” “retat,” and “dck.” But it did get a few things right though, like this helpful line pointing to the middle of the creature labeled “Rat.”

To the researchers’ credit, they weren’t being deceptive, disclosing that the “images in this article were generated by Midjourney.” Yet they appear to have made little or no effort to edit them, which ends up undermining their credibility anyway. Moral of the story: double check your AI-hallucinated stuff.

Speaking of double-checking, some members in the scientific community warn that Frontiers is what’s known as a “predatory journal,” a type of publication that misleadingly claims to peer-review submissions while taking advantage of scientists desperate to get a paper published. If true, that might help explain why these absolutely confounding images didn’t raise any alarms; no one, it seems, is doing any real checking.

It’s worth noting, however, that not everyone agrees with that characterization of Frontiers, as what journals are considered “predatory” remains a heated topic in the community. Addressing this most recent controversy, the publisher acknowledged that it’s seen the “scrutiny” blowing up on social media.

“We thank the readers for their scrutiny of our articles: when we get it wrong, the crowdsourcing dynamic of open science means that community feedback helps us to quickly correct the record,” Frontiers wrote

on X.

Regardless of the publication, it’s undeniable that generative AI has made worrying inroads into academia. Some papers have been more upfront by listing ChatGPT as a co-author. Others made no such disclosure, but managed to slip through peer review anyway.

The ones that do get caught often make careless mistakes, like forgetting to delete telltale AI phrases such as “regenerate response.” In any case, the content an AI generates is usually filled with factual errors. That’s a no-go for any kind of published material, but especially the scientific kind, which relies on highly technical knowledge that a general-purpose chatbot won’t use correctly.

More on AI: OpenAI Hiring Detective to Find Who’s Leaking Its Precious Info