Broken Algo

Using an algorithm similar to those that predict earthquake aftershocks, cops have for more than a decade tried to use “predictive policing” software to presage where crimes will take place before they happen — and as a joint investigation by The Markup and Wired shows, it seems to have failed miserably.

Geolitica, a predictive policing software used by police in Plainfield, New Jersey — the only department out of 38 departments willing to provide the publications with information — was so bad at predicting crimes that its success rate was less than half a percent.

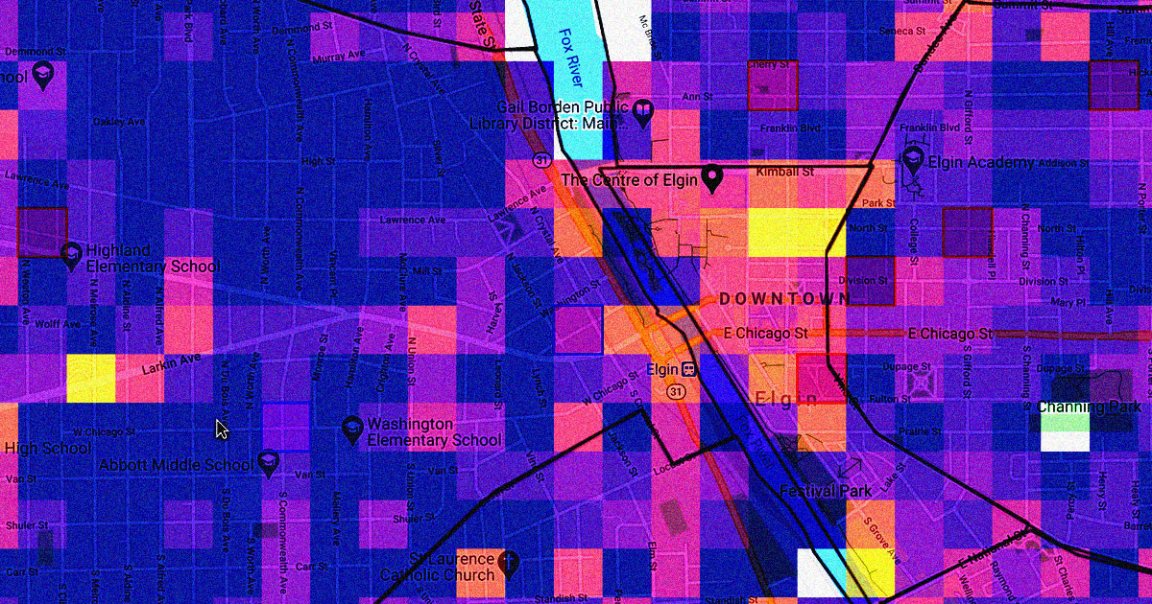

Formerly known as PredPol, the machine learning [same as below] software purchased by authorities in Plainfield and other police departments promised to help cops fight crime before it happens, like Philip K. Dick’s classic novella “Minority Report” — except with computers instead of psychics.

Unsurprisingly, these prediction models, which have been the subject of ample reporting over the years, are riddled with ethical concerns given the discriminatory and racist natures of both artificial intelligence and law enforcement. And, as this new investigation reveals, they’re shockingly ineffective at predicting crime, as well.

Hilariously Bad

To get to this conclusion, The Markup and Wired looked at 23,631 of Geolitica’s predictions between the months of February and December 2018 and found that less than 100 of those predictions actually aligned with an actual crime, a success rate of less than half a percent.

While the algorithm was slightly better at predicting some crimes than others — it correctly identified 0.6 percent of robberies or aggravated assaults, versus the 0.1 percent of burglaries it got right — the results still paint a damning picture of the tool’s effectiveness.

Geolitica was so bad at predicting crime, in fact, that the head of Plainfield’s police department actually admitted that it was rarely used.

“Why did we get PredPol? I guess we wanted to be more effective when it came to reducing crime,” Plainfield PD captain David Guarino told the websites. “And having a prediction where we should be would help us to do that. I don’t know that it did that.”

“I don’t believe we really used it that often, if at all,” he continued. “That’s why we ended up getting rid of it.”

Guarino went on to suggest that the money his department spent on its contract with Geolitica — which initially charged Plainfield PD for a $20,500 annual subscription fee and an additional $15,500 for a second yearlong extension — could have been better spent on community programs.

As Wired reported last week, Geolitica is going out of business at the end of this year — but the people who worked there have already been snatched up by SoundThinking, formerly known as ShotSpotter, another law enforcement software company to which the soon-to-be-defunct predictive policing platform’s customers will be transferred.

Geolitica, thankfully, will be no more — but its legacy will seemingly live on.

More on police tech: NYPD Deploys Villanous-Looking Dalek in Subway System