Crossing Lines

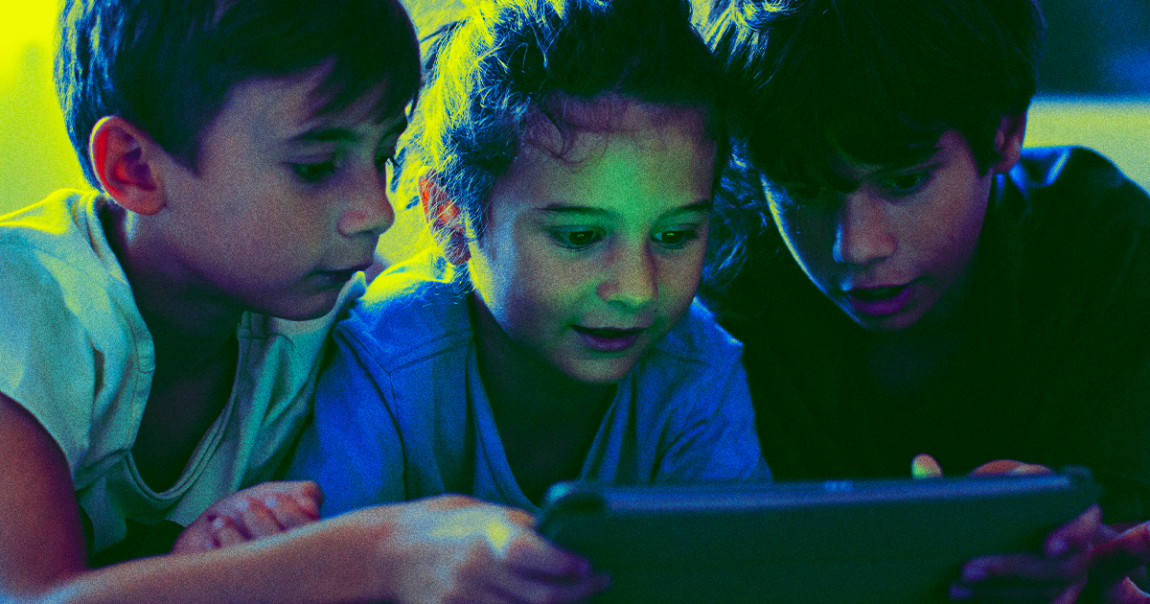

AI hustle bros are back at it. And this time, their get-rich-quick schemes are gunning for your kids’ still-forming brains.

AI scammers are using generative tools to churn out bizarre and nonsensical YouTube kids’ videos, a troubling Wired report reveals. The videos are often created in a style akin to that of the addictive hit YouTube and Netflix show Cocomelon, and are very rarely marked as AI-generated. And as Wired notes, given the ubiquity and style of the content, a busy parent might not bat an eye if this AI-spun mush — much of which is already garnering millions of views and subscribers on YouTube — were playing in the background.

In other words, tablet-prone young children appear to be consuming copious amounts of brain-liquifying AI content, and parents have no clue.

Cocomelon Hell

As Wired reports, a simple YouTube search will return a bevy of videos from AI cash-grabbers teaching others how to use a combination of AI programs — OpenAI’s ChatGPT for scripts, ElevenLabs’ voice-generating AI, the Adobe Express AI suite, and many more — to create videos ranging in length from a few minutes to upwards of an hour.

Some claim their videos are educational, but quality varies. It’s also deeply unlikely that any of these mass-produced AI videos are being pushed out in consultation with childhood development experts, and if the goal is to make money through unmarked AI-generated fever dreams designed for consumption by media-illiterate toddlers, the “we’re helping them learn!” argument feels pretty thin.

Per Wired, researchers like Tufts University neuroscientist Eric Hoel are concerned about how this bleak combination of garbled AI content and prolonged screentime will ultimately impact today’s kids.

“All around the nation there are toddlers plunked down in front of iPads being subjected to synthetic runoff,” the scientist recently wrote on his Substack, The Intrinsic Perspective. “There’s no other word but dystopian.”

YouTube told Wired that its “main approach” to counter the onslaught of AI-generated content filling its platform “will be to require creators themselves to disclose when they’ve created altered or synthetic content that’s realistic.” It also reportedly emphasized that it already uses a system of “automated filters, human review, and user feedback” to moderate the YouTube Kids platform.

Clearly, though, plenty of automated kids’ content is slipping through the self-reporting cracks. And some experts aren’t convinced that this honor code approach is quite cutting it.

“Meaningful human oversight, especially of generative AI, is incredibly important,” Tracy Pizzo Frey, the media literacy nonprofit Common Sense Media’s senior AI advisor, told Wired. “That responsibility shouldn’t be entirely on the shoulders of families.”

More on AI content: OpenAI CTO Says It’s Releasing Sora This Year