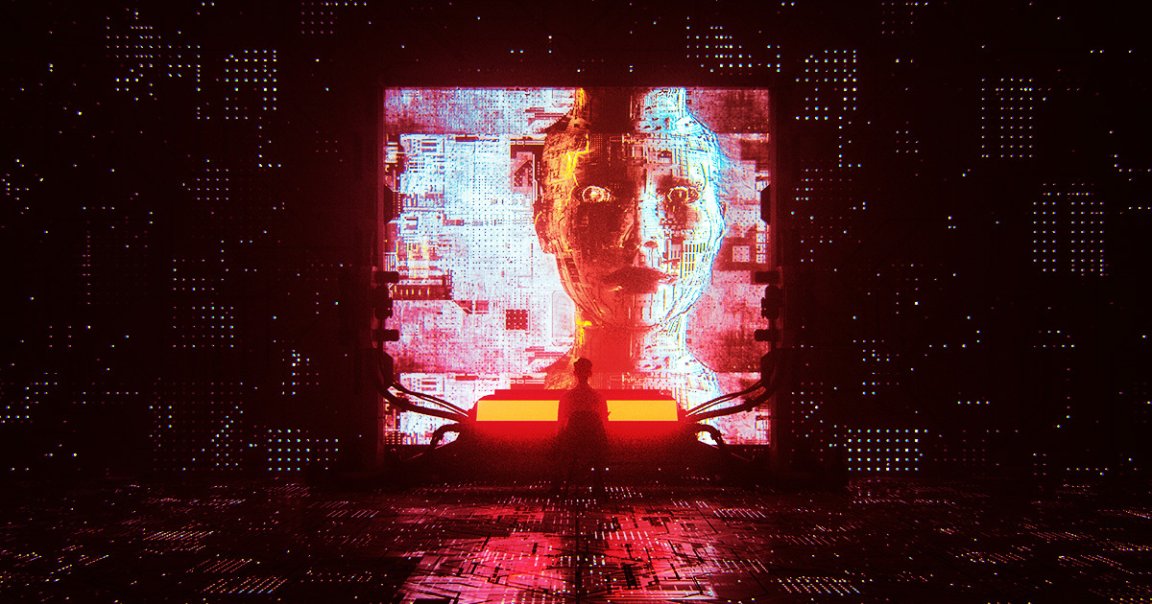

Final Exam

AI experts are calling for submissions for the “hardest and broadest set of questions ever” to try to stump today’s most advanced artificial intelligence systems — as well as those that are still coming.

As Reuters reports, this test — known in the field, memorably, as “Humanity’s Last Exam” — is being crowdsourced by the Center for AI Safety (CAIS) and the training data labeling firm Scale AI, which over the summer raised a cool billion dollars for an overall value of $14 billion.

Reuters points out that submissions for this “exam” were opened just a day after results from OpenAI’s new o1 model preview dropped. As CAIS executive director Dan Hendryks notes, o1 seems to have “destroyed the most popular reasoning benchmarks.”

Back in 2021, Hendrycks co-authored two papers with AI testing proposals that would evaluate whether models could out-quiz undergraduates. At the time, the AI systems being tested were spouting off answers nearly at random, but as Hendrycks notes, the models of today have “crushed” the 2021 tests.

Abstract Thinking

While the 2021 testing criteria primarily grilled the AI systems on math and social studies, “Humanity’s Last Exam” will, as the CAIS executive director said, incorporate abstract reasoning to make it harder. The two institutions organizing the test are also planning to keep the test criteria confidential and not opening it up to the public, to make sure the answers don’t end up in any AI training data.

Due November 1, experts in fields as far-flung as rocketry and philosophy are being encouraged to submit questions that would be difficult for those outside their areas of expertise to answer. After undergoing peer review, winners will be offered co-authorship of a paper associated with the test and prizes up to $5,000 sponsored by Scale AI.

While the organizers are casting a very wide net for the types of questions they’re seeking, they told Reuters that there’s one thing that will not be on the exam: anything about weapons, because it’s too dangerous for AI to know about.

More on advanced AI: OpenAI’s Strawberry “Thought Process” Sometimes Shows It Scheming to Trick Users