Image-to-Video

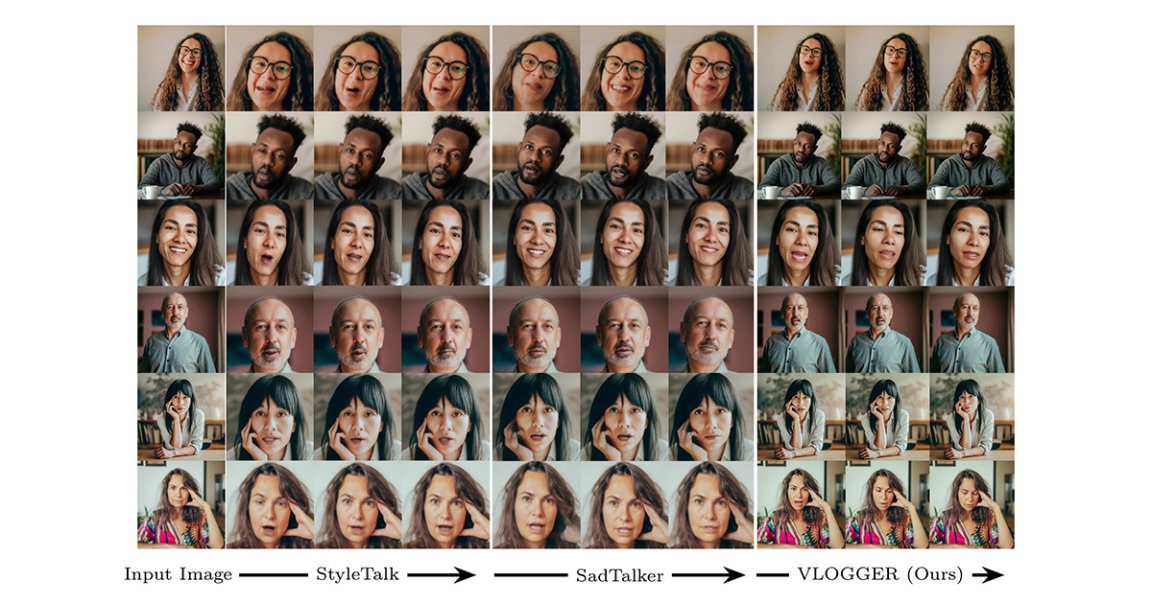

Google researchers have developed a new AI model that can transform a single still image of a person into a moving, talking avatar. It’s called Vlogger, and its surprisingly realistic outputs are nothing short of freaky.

In a white paper, the Google team describes Vlogger as a “novel framework to synthesize humans from audio,” adding that “it is precisely automation and behavioral realism that what we aim for in this work… a multi-modal interface to an embodied conversational agent.” This “agent,” they continue, is ultimately “designed to support natural conversations with a human user.”

So, in other words, the goal of these researchers is absolutely to create realistic-looking fake people that interact in a “human”-feeling way with actual human beings on the other end.

In the paper, the researchers propose that this model — which requires just one image and a desired audio clip as inputs — could be used to “enhance online communication, education, or personalized virtual assistants.” Vlogger can also edit moving videos, which the researchers claim will “ease creative processes.”

They do not, however, mention that a tool that could generate entirely synthetic, moving-and-speaking video clips from just one image feels ripe for abuse by bad actors.

Anyone, Anywhere

Indeed, it’s the advancements that Google purports to have made in its creation of Vlogger that make it so hypothetically dangerous.

AI deepfakes, for example, are already a growing issue. But while generating a deepfake is easier than ever due to the public availability of generative AI tools, creating a particularly convincing video deepfake generally requires a combination of multiple AI tools. Right now, when using the Vlogger model, users still need to provide the desired audio for the video. Still, Vlogger would likely streamline the process overall.

What’s more, according to the paper, Vlogger “does not require training for each person” that its tech animates. The researchers also say that it “generates the complete image” and “considers a broad spectrum of scenarios” that “are critical to correctly synthesize humans who communicate.”

Put simply, that means Vlogger doesn’t require specific training for each individually-animated person, which presumably allows it to draft a realistic fake video from a single picture of just about anyone — including everyday people who aren’t in the public eye. Nothing could go wrong there, we’re sure.

Vlogger’s AI animations aren’t perfect — yet. They still bear a distinctly inhuman edge, moving and speaking in an awkwardly robotic way. But enabled by a vast amount of data — the tool is trained on the MENTOR dataset, a vast trove comprising 2,200 hours of video and “800,000 identities,” according to the paper — the tech is impressive nonetheless. And if it continues to improve? Best of luck, reality!

More on deepfake tech: Hackers Steal $25 Million by Deepfaking Finance Boss