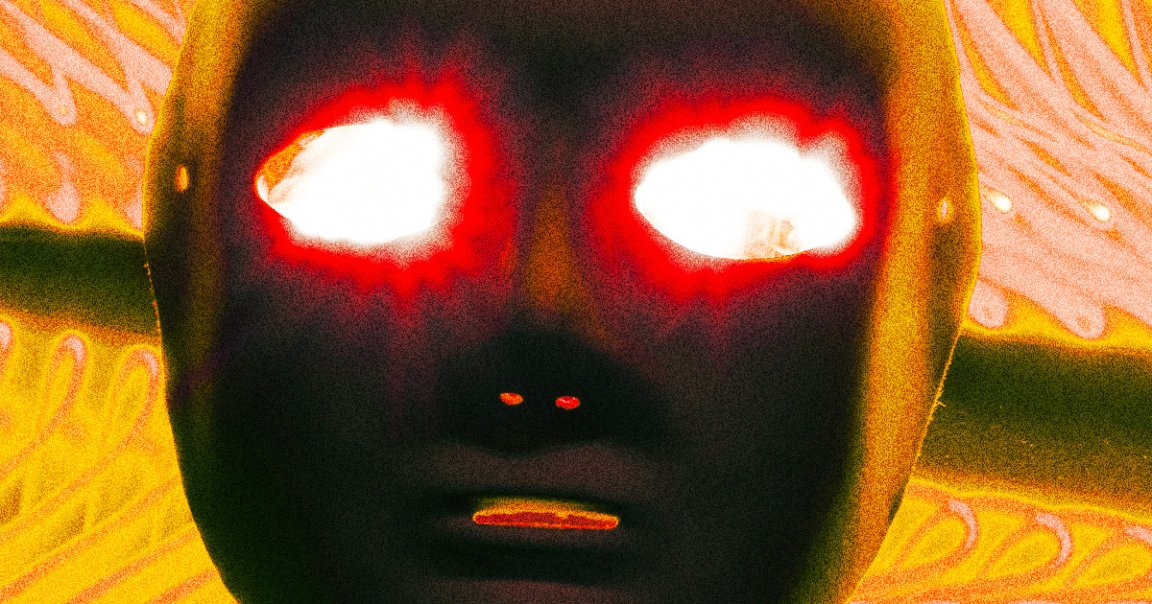

Nonzero Chance

Geoffrey Hinton, a British computer scientist, is best known as the “godfather of artificial intelligence.” His seminal work on neural networks broke the mold by mimicking the processes of human cognition, and went on to form the foundation of machine learning models today.

And now, in a lengthy interview with CBS News, Hinton shared his thoughts on the current state of AI, which he fashions to be in a “pivotal moment,” with the advent of artificial general intelligence (AGI) looming closer than we’d think.

“Until quite recently, I thought it was going to be like 20 to 50 years before we have general purpose AI,” Hinton said. “And now I think it may be 20 years or less.”

AGI is the term that describes a potential AI that could exhibit human or superhuman levels of intelligence. Rather than being overtly specialized, an AGI would be capable of learning and thinking on its own to solve a vast array of problems.

For now, omens of AGI are often invoked to drum up the capabilities of current models. But regardless of the industry bluster hailing its arrival or how long it might really be before AGI dawns on us, Hinton says we should be carefully considering its consequences now — which may include the minor issue of it trying to wipe out humanity.

“It’s not inconceivable, that’s all I’ll say,” Hinton told CBS.

The Big Picture

Still, Hinton maintains that the real issue on the horizon is how AI technology that we already have — AGI or not — could be monopolized by power-hungry governments and corporations (see: the former non-profit and now for-profit OpenAI).

“I think it’s very reasonable for people to be worrying about these issues now, even though it’s not going to happen in the next year or two,” Hinton said in the interview. “People should be thinking about those issues.”

Luckily, by Hinton’s outlook, humanity still has a little bit of breathing room before things get completely out of hand, since current publicly available models are mercifully stupid.

“We’re at this transition point now where ChatGPT is this kind of idiot savant, and it also doesn’t really understand about truth, ” Hinton told CBS, because it’s trying to reconcile the differing and opposing opinions in its training data. “It’s very different from a person who tries to have a consistent worldview.”

But Hinton predicts that “we’re going to move towards systems that can understand different world views” — which is spooky, because it inevitably means whoever is wielding the AI could use it push a worldview of their own.

“You don’t want some big for-profit company deciding what’s true,” Hinton warned.

More on AI: AI Company With Zero Revenue Raises $150 Million