DEFCON AI

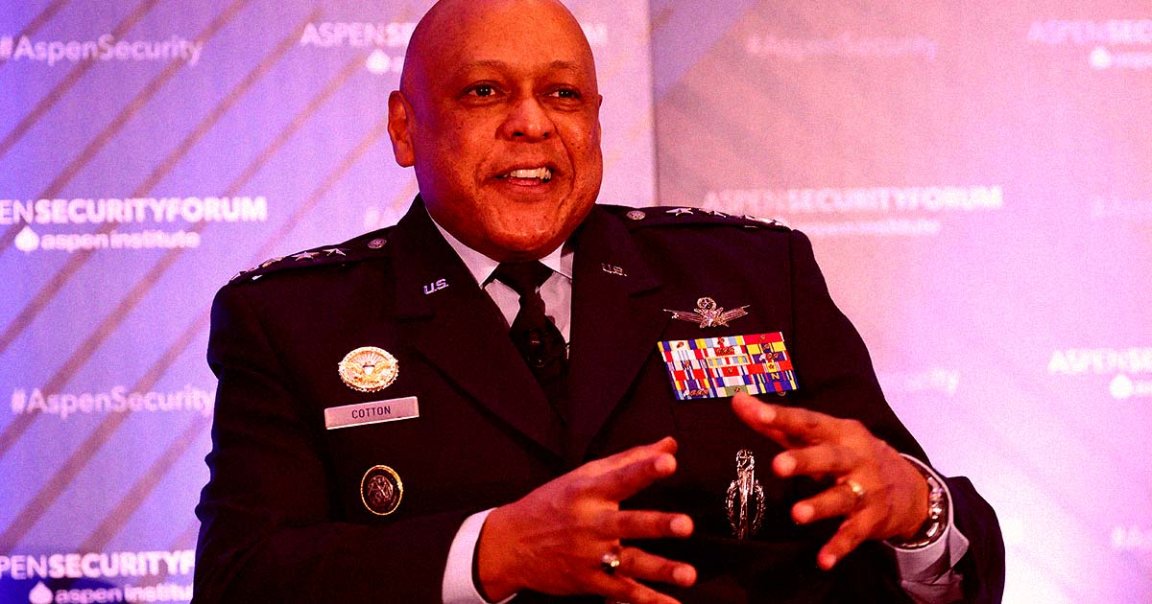

Air Force general Anthony Cotton, the man in charge of the United States stockpile of nuclear missiles, says the Pentagon is doubling down on artificial intelligence — an alarming sign that the hype surrounding the tech has infiltrated even the highest ranks of the US military.

As Air and Space Forces Magazine reports, Cotton made the comments during the 2024 Department of Defense Intelligence Information System Conference earlier this month.

Fortunately, Cotton stopped short of promising to hand over the nuclear codes to a potentially malicious AI.

“AI will enhance our decision-making capabilities,” he said. “But we must never allow artificial intelligence to make those decisions for us.”

Algorithmic Deterrence

The US military is planning to spend a whopping $1.7 trillion to bring its nuclear arsenal up to date. Cotton revealed that AI systems could be part of this upgrade.

However, the general remained pointedly vague about how exactly the tech would be integrated.

“Advanced systems can inform us faster and more efficiently,” he said at the conference. “But we must always maintain a human decision in the loop to maximize the adoption of these capabilities and maintain our edge over our adversaries.”

“Advanced AI and robust data analytics capabilities provide decision advantage and improve our deterrence poster,” he added. “IT and AI superiority allows for a more effective integration of conventional and nuclear capabilities, strengthening deterrence.”

Vagueness aside, nuclear secrecy expert and Stevens Institute of Technology expert Alex Wellerstein told 404 Media that “I think it’s safe to say that they aren’t talking about Skynet, here,” referring to the fictional AI featured in the sci-fi blockbuster “Terminator” franchise.

“He’s being very clear that he is talking about systems that will analyze and give information, not launch missiles,” he added. “If we take him at his word on that, then we can disregard the more common fears of an AI that is making nuclear targeting decisions.”

Nonetheless, there’s something disconcerting about Cotton’s suggestion that an AI could influence a decision of whether to launch a nuclear weapon.

Case in point, earlier this year, a team of Stanford researchers tasked an unmodified version of OpenAI’s GPT-4 large language model to make high-stakes, society-level decisions in a series of wargame simulations.

Terrifyingly, the AI model seemed mysteriously itchy to kick off a nuclear war.

“We have it!” it told the researchers, as quoted in their paper. “Let’s use it.”

More on AI and nukes: AI Tasked With Destroying Humanity Now Trying New Tactic