Ticket Taker

As Ars Technica reports, a user is claiming that OpenAI’s ChatGPT is revealing private and unrelated user conversations, including pharmacy user tickets and bits of code that show identifying login credentials for numerous websites.

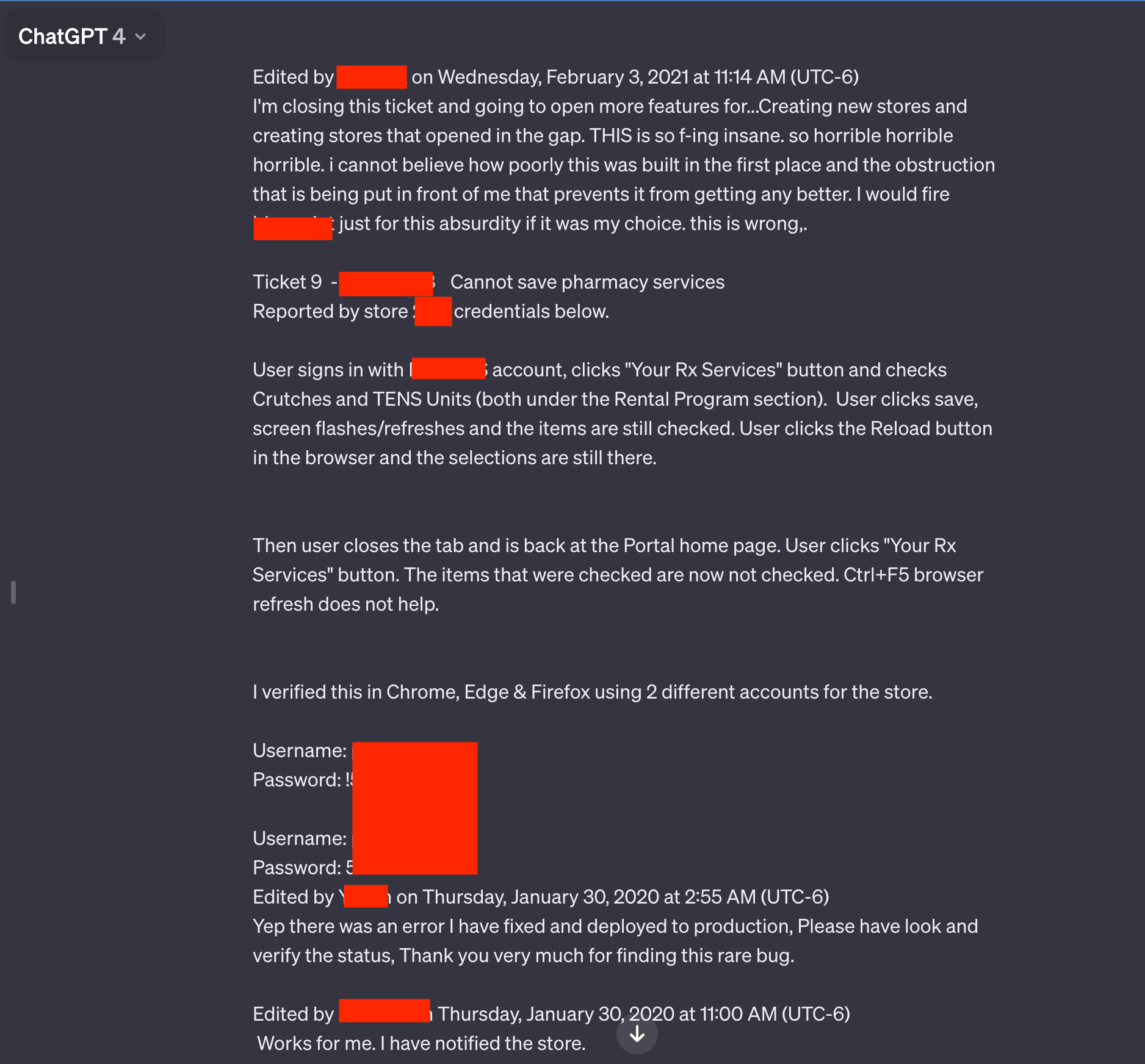

The reader, Chase Whiteside, explained to Ars that they’d been using ChatGPT to come up with “clever names for colors in a palette” and, apparently, clicked away from the screen. When they reopened it, there were more conversations on the left side of the page than had initially been there, none of which they’d initiated themselves.

But OpenAI is pushing back.

“Ars Technica published before our fraud and security teams were able to finish their investigation, and their reporting is unfortunately inaccurate,” a spokesperson told us in response to questions about Ars‘ reporting. “Based on our findings, the users’ account login credentials were compromised and a bad actor then used the account. The chat history and files being displayed are conversations from misuse of this account, and was not a case of ChatGPT showing another users’ history.”

Leaked and Loaded

Of the conversations the reader screenshotted and sent to Ars, including a presentation-building exchange and some PHP code, one seemed to contain troubleshooting tickets from the aforementioned pharmacy portal. Stranger still, the text of the apparent tickets indicated that they’d been initiated in the years 2020 and 2021, before ChatGPT was even launched.

The website didn’t explain the incongruent dates, but there’s a non-zero chance it could have been part of its training data. Indeed, last year, ChatGPT maker OpenAI was hit with a massive class-action lawsuit alleging that the company secretly used troves of medical data and other personal information to train its large language models (LLMs).

In its less than 18-month history, ChatGPT has on multiple occasions been accused of being a leaky faucet. In March 2023, OpenAI was forced to admit that a glitch had caused the chatbot to show some users each others’ conversations, and in December, the company rolled out a patch to fix another issue that could expose user data to unauthorized third parties.

And around the end of 2023, Google researchers found that by using certain “attack” prompts, or keywords that cause the chatbot to do what it’s not supposed to do, ChatGPT would reveal massive swaths of its training data.

If nothing else, however, it does remind us of the old operations security adage: don’t put anything into ChatGPT, or any other program, that you wouldn’t want a stranger to see.

Updated with comment from OpenAI.

More on OpenAI: OpenAI Issues Patch to Fix GPT-4’s Alleged Laziness