Wrong Again

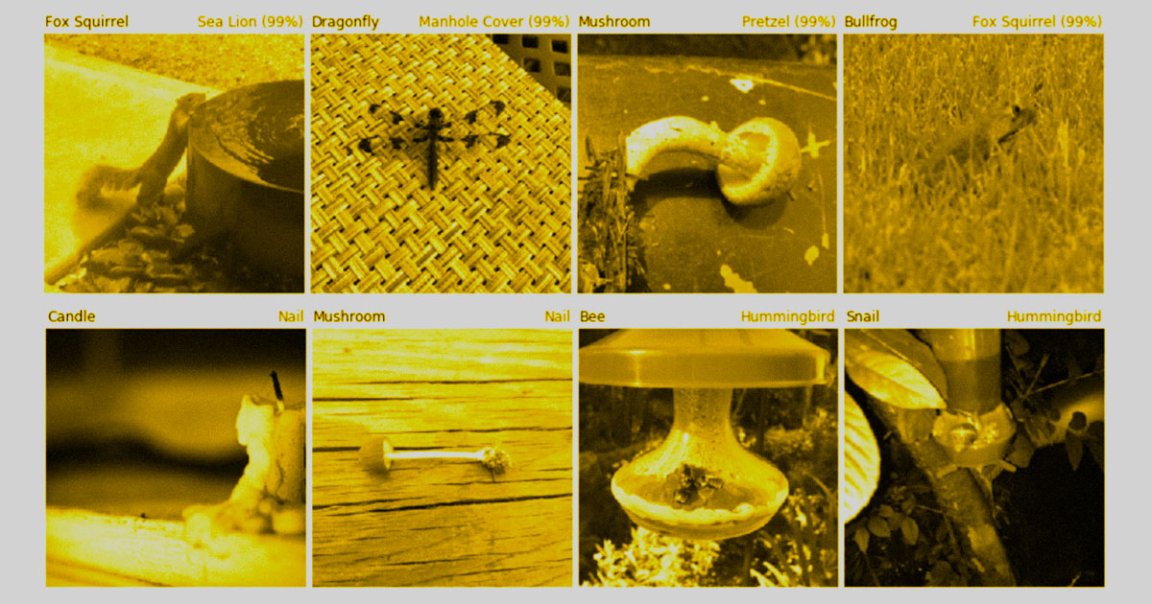

Image recognition software powered by artificial intelligence has come a long way. But it’s not foolproof: a team of researchers found that about two percent of the time, machine vision systems will have no idea what they’re looking at, even if it’s blatantly obvious to a human.

The researchers discovered 7,500 unmodified photographs of objects that are able to completely throw AIs for a loop. A preprint of their research was published in the repository arXiv.

Thanks to “deep flaws in current classifiers including their over-reliance on color, texture, background cues,” the accuracy of an AI drops dramatically. The result: an image of a lynx gets classified as a crayfish or an alligator gets confused with a hummingbird.

ImageNet

The photos belong to a much larger industry-standard database called ImageNet — comprised of millions of hand-labeled images — that often gets used to train image recognition AIs.

The database was first put together in 2006 and over the years, AI models have become more and more accurate at predicting what they see in the millions of photos.

But AI-powered image recognition systems are still far from perfect. For instance in 2018, Amazon’s face recognition AI called Rekognition incorrectly matched 28 members of Congress with mugshots of criminals.

The researchers are hopeful their research will eventually lead to more accurate and robust machine vision systems that are able to additionally consider the context of the image, not just the image itself.

READ MORE: AI fails to recognize these nature images 98% of the time [The Next Web]

More on machine vision: Scientists Create an AI From a Sheet of Glass