Artificial intelligence (AI) fueled many high-profile developments in 2016. We saw self-driving cars hit the streets of Pittsburgh, became familiar with helpful talking devices in our homes, and had fun playing Pictionary with a computer.

Yet, current AI-powered services generally lack the high-level comprehension and reasoning required to effect truly transformative change in enterprise. While some existing services can match or beat humans at individual tasks, it’s been hard getting machines to transfer learned knowledge and skills across multiple tasks. Without this transfer, practical applications of AI will remain restricted to one or two domains at a time.

Research in deep learning and reinforcement learning is finally endowing machines with the capabilities that can free AI-powered services from their constraints. Crucially, researchers are finding ways to help machines gain competence using some of the same tricks as humans – improving decision making through trial and error, motivating behavior through curiosity and play, and acquiring basic common sense.

Can researchers really create better AI by letting machines learn as humans do?

Complex Decision Making

Consider a child charged with setting the table for dinner. She’ll first need to clear the table, then get the placemats and set them out. Next come the plates and glasses. Of course knives, forks, and spoons need to be laid out precisely too. While doing this, she must be careful not to drop anything, or to get in the way and bump into her parents while they prepare the food. To successfully set the table, the child must complete a host of overlapping subtasks.

Acclaimed cognitive scientist Marvin Minsky postulated that human behavior is not actually the result of a single cognitive agent, but in fact arises from a collection of individually-simple interacting agents. Put succinctly, humans invariably complete any task by completing a multitude of smaller tasks.

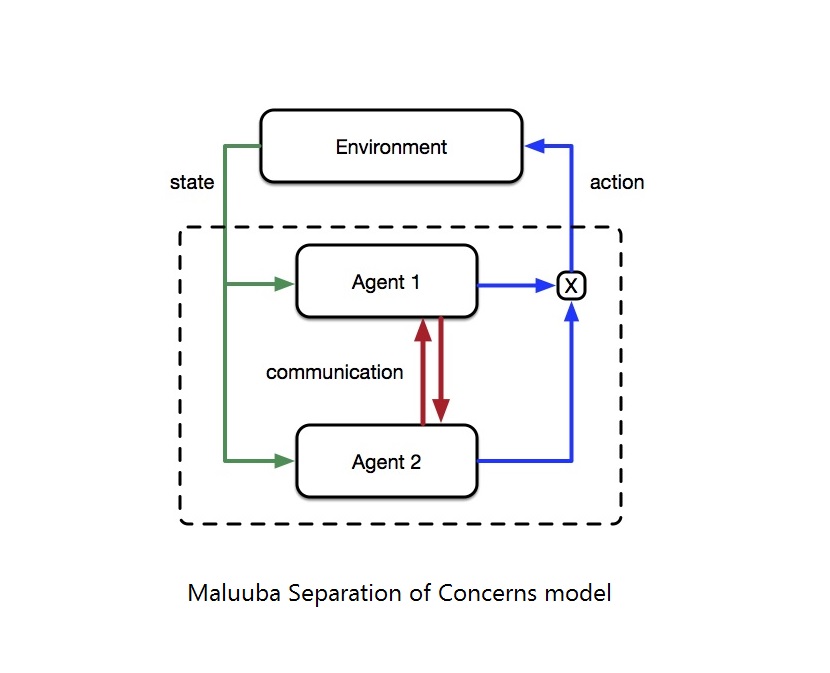

In the field of reinforcement learning, which concerns learning behavior through experience, a similar strategy can be followed to improve scalability. Using an approach based on separation of concerns, a difficult task may be decomposed into a set of more tractable subtasks. This modular, decompositional approach speeds learning by focusing effort on small tasks that are easy to solve, and broadly useful.

This could yield more capable services whose competence spans multiple domains. Rather than AI-powered tools limited to clearly-defined tasks like managing calendar schedules, we could see capabilities that extend to more complex decision making and proactively help managers make business decisions. For example, rather than a single agent coordinating one person’s schedule, a distributed set of agents could coordinate many schedules, helping to organize larger events or conferences with thousands of attendees.

Building Human-Like Knowledge

Humans are great at learning new things. We’re naturally curious and want to understand the world around us. Though it takes time to learn a new skill, the skills we learn seldom stand alone. We often benefit from knowledge gained through prior experience.

Learning to code in one programming language helps with learning to code in another, and learning to drive a car helps with learning to drive a truck. With each new skill we learn, learning related skills becomes easier. To develop Artificial General Intelligence, we need to grant machines this ability to become better at learning faster over repeated attempts at related tasks.

Researchers are working on ways to help AI retain knowledge gained while solving one problem, and then apply it to new but similar problems. This is known as meta learning. In the context of machine learning, these ideas have a long history. Motivated by studies of curiosity and information seeking in robots and simulated agents, some recent work explores these ideas aided by state-of-the-art tools from deep learning and reinforcement learning.

AI-powered systems that learn where and how to find information pertinent to the tasks set before them would represent a significant advance over existing technology. For example, the ability to iteratively query a search engine and assimilate the results would alleviate the burden of “knowledge engineering” that limits current question answering systems. Similarly, the ability to intelligently and adaptively elicit a user’s preferences would permit better product recommendations in less time.

Machines that know how to find what they need to know would provide great value.

The Digital Awakening

Humans gain common sense naturally, simply by experiencing the world around us. We know that if you throw a ball into the air it will fall down. We know that you can fit a sandwich into a lunchbox. We know these things because we’ve seen balls thrown and eaten sandwiches from lunchboxes. In contrast, machines have no physical presence in the world, no embodied upbringing during which seeing and learning would be inevitable.

Though competent in the situations their developers planned for, existing AI-powered systems tend to break down when confronted with the unexpected. These systems lack the basic common sense that permits humans to effortlessly navigate situations not entirely unlike those they’ve encountered before. For machines to interact fluently with humans through natural language, we need to teach them about all the little things we take for granted.

As noted by Prof. Jackie Chi Kit Cheung of the Reasoning and Learning Lab at McGill University in Montreal: “Conversational interactions with current systems have been constrained, as it can be very difficult for machines to understand the subtleties of language and the broad range of words, terms and phrases that humans use.”

Dr. Cheung’s work seeks to tackle the Winograd Schema Challenge. This has been proposed as an alternative to the Turing Test. In the challenge a multiple choice questions are posed such that common sense knowledge and reasoning capabilities must be used to determine the answers.

Throughout 2017 researchers will develop more capable algorithms, companies will deliver more useful tools and services, and interest in AI will continue to grow. We’ll see greater adoption of AI capabilities that can apply across an increasingly diverse set of tasks, not constrained to limited domains. These capabilities will take on more complex challenges involving inference, synthesis and decision-making that will help employees in their daily roles. Though it could be some time before we see a truly general Artificial Intelligence, this year will undoubtedly bring exciting theoretical and practical advancements in AI.

Philip Bachman is a Researcher at Microsoft working with the Maluuba team in Montreal. His research centers on language understanding, covering machine comprehension, dialogue and conversational interfaces, and complex challenges in human-machine interaction. He has presented papers at leading academic conferences exploring novel techniques for addressing the underlying challenges. Prior to joining Maluuba, Philip completed his PhD at McGill University.