From Steven Spielberg’s creepy “AI” (2001) to M3GAN (2022), toys imbued with artificial intelligence have been a source of fascination and terror in pop culture for decades.

Now, in the face of all those cautionary tales, a new class of vaguely menacing chatty toys are being sold online — but unlike the “Gremlin“-esque Furbies of yore, these are powered by cutting-edge AI, and their danger quotient lies in what they may tell children or share with outside companies.

As the New York Times reports, these next-generation talking stuffed animals run the gamut of quality and authenticity, but all have one major ask: that parents trust that their young kids will be safe playing with toys that are connected to such a fraught technology.

That’s a mighty tall order given how much harm has been done to kids from supposedly “child-friendly” chatbots, which Futurism has documented extensively.

The first, best-known, and likely safest of these AI-infused stuffed toys are made by Curio, a Silicon Valley-based startup that boasts a bespoke, child-friendly chatbot and voice acting by electronic musician and Elon Musk ex Claire “Grimes” Boucher.

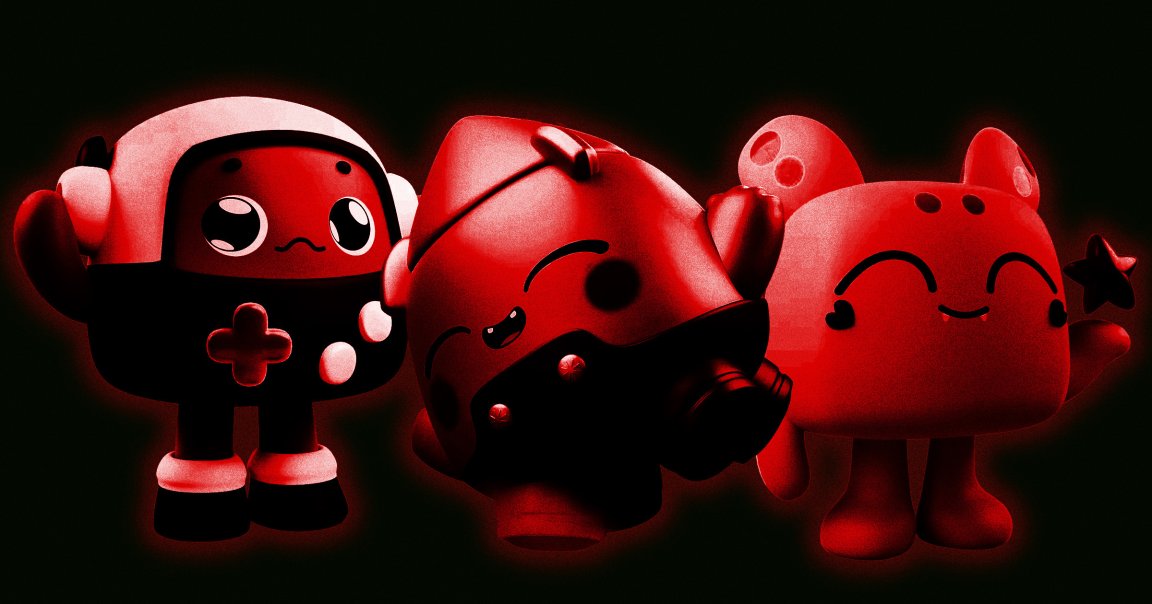

As you can see from the photos below, these three plushies are indeed adorable — they’re $100 apiece, if you’re curious — but there’s more to them than meets the eye, and we’re not just talking about the chatbots, microphones, and voice boxes built inside of them.

In an interview with Boucher posted on the Curio site and pegged to the company’s launch announcement in late 2023, the AI-enthused artist said that the toy she designed, Grok — no relation to her ex’s supremely bigoted chatbot — was trained on OpenAI’s API and interactive Discord conversations.

That statement, and others on Curio’s official site, don’t take into account a strange caveat found in the company’s privacy policy acknowledging that Microsoft’s Azure Cognitive Services, OpenAI, Perplexity AI, and the parental verification platform Kids Web Services may all “collect or maintain personal information from children through the App or Device.” As the NYT notes, that runs counter to the company’s claims that it doesn’t retain kids’ interactions for anything other than parental review in the toys’ apps, and we’ve reached out to Curio to ask what all that is about.

For all that privacy dubiousness at Curio, there are similar toys sold by no-name brands that are, somehow, even sketchier.

These random sites, with names like “Little Learners” and “FoloToy,” vary in their specifics if not their price ranges, which are roughly the same as the toys sold by Curio. Little Learners is the shadiest of the two, with its Trustpilot rating of 2, AI-generated graphics of kids and their families with the toys, and zero details about what AI undergirds the chatbot built into the product.

FoloToy, meanwhile, initially seems much more legitimate by comparison. In its “documentation” subsection, the company claims that purchasers can select from more than 20 companies’ large language models (LLMs), including those from OpenAI, Anthropic, Google’s Gemini, and several others that appear to be based primarily in China.

Its privacy policy, meanwhile, doesn’t even appear to specifically mention FoloToy at all. Instead, it repeatedly names a company called “Astroship,” a little-known Software-as-a-Service and website builder AI tool. As such, we don’t know whether FoloToy, or Astroship, or whoever is really behind those purported AI plushies is collecting data from the young kids that would use them.

The privacy policy also ends with this astonishing and seemingly AI-generated clunker: “If you have any questions, concerns, or requests regarding this Privacy Policy, please contact us at [your contact email].”

With so many examples of AI’s ills, from people falling into obsession and psychosis when interacting with chatbots to the growing privacy concerns surrounding even the most sophisticated models, it seems very premature to be introducing this technology to kids — especially when the companies selling these AI toys are opaque about what’s going on with their data and what makes their chatbots safe for kids.

Choosing to believe AI companies when they insist they have set up guardrails to keep users safe is acceptable for adults who have agency in that decision, but for young children who have no say in the matter, it seems unfair and borderline dangerous to expose them to AI in general — much less AI smuggled into something so otherwise innocuous as a stuffed toy.

More on AI and kids: Vast Numbers of Lonely Kids Are Using AI as Substitute Friends