Countless millions of people use AI assistants such as Siri or Alexa to do everything from make purchases online to control the locks in their smart homes.

Just say the command that triggers the assistant, and the sound waves from your voice will hit a part of your smart device’s microphone called the diaphragm. That causes the diaphragm to move, producing electrical signals that the device’s software can understand and respond to.

But according to new research funded in part by the U.S. Defense Advanced Research Projects Agency, cybercriminals could hack Siri, Alexa, and other voice-activated AI assistants without saying a word — they just need a line of sight to the device.

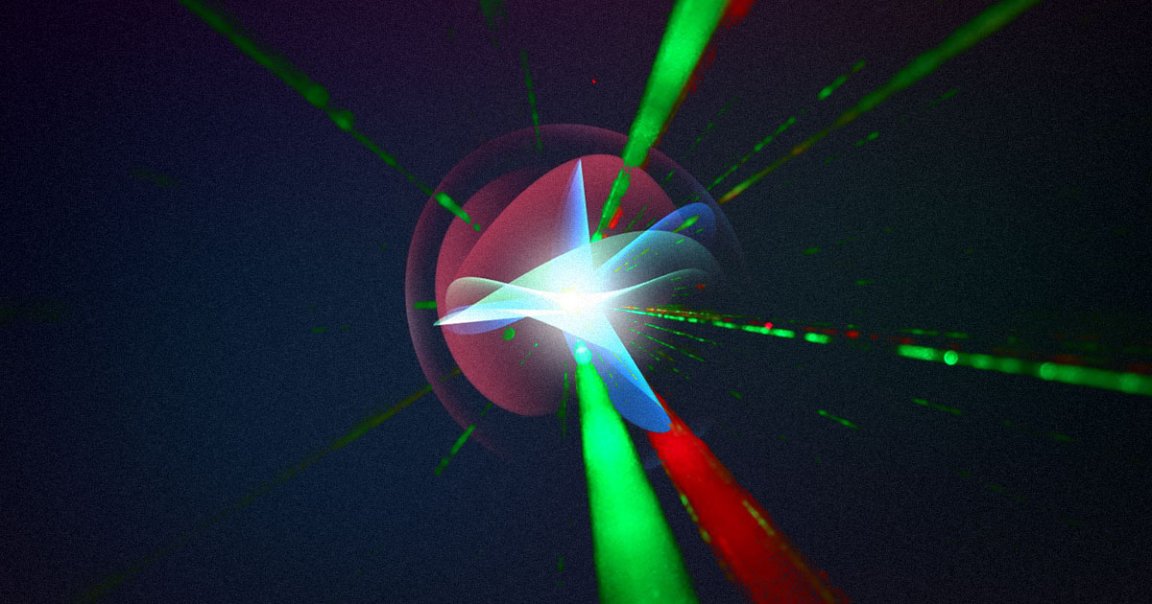

A team from Japan and the University of Michigan found that an attacker could encode a command in a beam of light rather than speak it. When they then shine the light on a device’s microphone, the diaphragm moves just as it would if hit by sound waves.

In tests, the researchers found they could hack Siri and other AI assistants from up to 110 meters (360 feet) away — that was the length of the longest hallway they had access to. They also controlled a device in one building from a bell tower 70 meters (230 feet) away by shining their light through a window.

Someone could buy the equipment needed to command an AI assistant using light for less than $400, the researchers said. The telephoto lens needed for long-range attacks bumps that up to $600, but that’s still a small price to pay for access to anything from a person’s online accounts to the locks keeping their home secure.

READ MORE: With a Laser, Researchers Say They Can Hack Alexa, Google Home or Siri [The New York Times]

More on AI assistants: United Nations: Siri and Alexa Are Encouraging Misogyny