OpenAI is a non-profit artificial intelligence research company whose goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return. Oh, and they’re hiring.

Ask Me Anything

The Open AI research team did a reddit AMA on Saturday. The members present included Greg Brockman (CTO), Ilya Sutskever (Research Director), and world-class research engineers and scientists (and some of the founding team members) Andrej Karpathy, Durk Kingma, John Schulman, Vicki Cheung, and Wojciech Zaremba. What follows are some of the best questions posed by reddit users.

Questions and answers may be edited for length and clarity.

kkastner:

How did OpenAI come to exist? Is this an idea one of you had, were you approached by one of the investors about the idea, or was it just a “meeting of the minds” that spun into an organization?

Greg Brockman:

OpenAI started as a bunch of pairwise conversations about the future of AI involving many people from across the tech industry and AI research community. Things transitioned from ideaspace to an organizational vision over a dinner in Palo Alto during summer 2015. After that, I went full-time on putting together the group, with lots of help from others. So it truly arose as a meeting of the minds.

kkastner:

For anyone who wants to answer – how did you get introduced to deep learning research in the first place?

Greg Brockman:

I’m a relative newcomer to deep learning. I’d long been watching the field, and kept reading these really interesting deep learning blog posts such as Andrej’s excellent char-rnn post. I’d left Stripe back in May intending to find the maximally impactful thing to build, and very quickly concluded that AI is a field poised to have a huge impact. So I started training myself from tutorials, blog posts, and books, using Kaggle competitions as a use-case for learning. (I posted a partial list of resources here: https://github.com/gdb/kaggle#resources-ive-been-learning-from.) I was surprised by how accessible the field is (especially given the great tooling and resources that exist today), and would encourage anyone else who’s been observing to give it a try.

Ilya Sutskever:

I got interested in neural networks, because to me the notion of a computer program that can learn from experience seemed inconceivable. In addition, the backpropagation algorithm seemed just so cool. These two facts made me want to study and to work in the area, which was possible because I was an undergraduate in the University of Toronto, where Geoff Hinton was working.

_AndrewB_ :

You say you want to create “good” AI. Are You going to have a dedicated ethics team/committee, or will you rely on researchers’ (Dr. Sutskever’s) judgments?

Ilya Sutskever:

We will build out an ethics committee (today, we’re starting with a seed committee of Elon Musk and Sam Altman, but we’ll build this out seriously over time). However, more importantly is the way in which we’ve constructed this organization’s DNA:

First, our goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole. We’ll constantly re-evaluate the best strategy. Today that’s publishing papers, releasing code, and perhaps even helping people deploy our work. But if we, for example, one day make a discovery that will enhance the capabilities of algorithms so it’s easy to build something malicious, we’ll be extremely thoughtful about how to distribute the result. More succinctly: the “Open” in “OpenAI” means we want everyone to benefit from the fruits of AI as much as possible.

We acknowledge that the AI control problem will be important to solve at some point on the path to very capable AI. To see why, consider for instance a capable robot whose reward function itself is a large neural network. It may be difficult to predict what such a robot will want to do. While such systems cannot be built today, it is conceivable that they may be built in the future.

Finally and most importantly: AI research is a community effort, and many if not most of the advances and breakthroughs will come from the wider ML community. It’s our hope that the ML community continues to broaden the discussion about potential future issues with the applications of research, even if those issues seem decades away. We think it is important that the community believes that these questions are worthy of consideration.

_AndrewB_ :

Do you already have any specific research directions that you think OpenAI will pursue? Like reasoning / reinforcement learning? Are you going to focus on basic research only, or does “creating humanity-oriented” AI mean you’ll invest time in some practical stuff like medical diagnosis?

Ilya Sutskever:

Research directions: In the near term, we intend to work on algorithms for training generative models, algorithms for inferring algorithms from data, and new approaches to reinforcement learning. We intend to focus mainly on basic research, which is what we do best. There’s a healthy community working on applying ML to problems that affect others, and we hope to enable it by broadening the abilities of ML systems and making them easier to use.

masharpe:

Is OpenAI planning on doing work related to compiling data sets that would be openly available? Data is of course crucial to machine learning, so having proprietary data is an advantage for big companies like Google and Facebook. That’s why I’m curious if OpenAI is interested in working towards a broader distribution of data, in line with its mission to broadly distribute AI technology in general.

Wojciech Zaremba:

Creating datasets and benchmarks can be extremely useful and conducive for research (e.g. ImageNet, Atari). Additionally, what made ImageNet so valuable was not only the data itself, but the additional layers around it: the benchmark, the competition, the workshops, etc.

If we identify a specific dataset that we believe will advance the state of research, we will build it. However, often very good research can be done with what currently exists out there, and data is critical much more immediately for a company that needs to get a strong result than a researcher trying to come up with a better model.

almostinvisible:

Just to add to this question: Where will the code (and possibly data) be available?

Greg Brockman:

We’ll post code on Github (https://github.com/openai), and link data from our site (https://openai.com) and/or Twitter ( Tweets by open_ai ).

teodorz:

Nowadays Deep Learning is in the minds. But even a few years back, it was graphical models, and before: other methods. Ilya is a well known researcher in Deep Learning field, but are you planning to work in other fields? Who will lead other directions?

What’s driving the work, at least now, the specific value you’re going to bring on the table in the next year?

Ilya Sutskever:

We focus on deep learning because it is, at present, the most promising and exciting area within machine learning, and the small size of our team means that the researchers need to have similar backgrounds. However, should we identify a new technique that we feel is likely to yield significant results in the future, we will spend time and effort on it.

Research-wise, the overarching goal is to improve existing learning algorithms and to develop new ones. We also want to demonstrate the capability of these algorithms in significant applications.

phulbarg:

It seems like some organizations with large amounts of proprietary useful data will supply you with data that presumably won’t be open to the public. How do you intend to keep research open when a major component, namely the data used, can’t be opened up?

Wojciech Zaremba:

We intend to conduct most of our research using publicly available datasets. However, if we find ourselves making significant use of proprietary data for our research, then we will either try to convince the company to release an appropriately anonymized version or the dataset, or simply minimize our usage of such data.

Plinz:

What is the hardest open question/problem in AI research, in your view? Which topic should be worked on first? How can we support OpenAI in its quest?

Ilya Sutskever:

The hardest problem is to “build AI”, but it is not a good problem since it cannot be worked on directly. A hard problem on which we may see progress in the next few years is unsupervised learning — recent advances in training generative models makes it likely that we will see tangible results in this area.

While there isn’t a specific topic that should be worked on first, there are many good problems on which one could make fruitful progress: improving supervised learning algorithms, making genuine progress in unsupervised learning, and improving exploration in reinforcement learning.

Read our papers and build on our work!

Programmering:

What do you believe that AI capabilities could be in the close future?

wojzaremba:

Speech recognition and machine translation between any languages should be fully solvable. We should see many more uses of computer vision applications, like for instance: – app that recognizes number of calories in food – app that tracks all products in a supermarket at all times – burglary detection – robotics

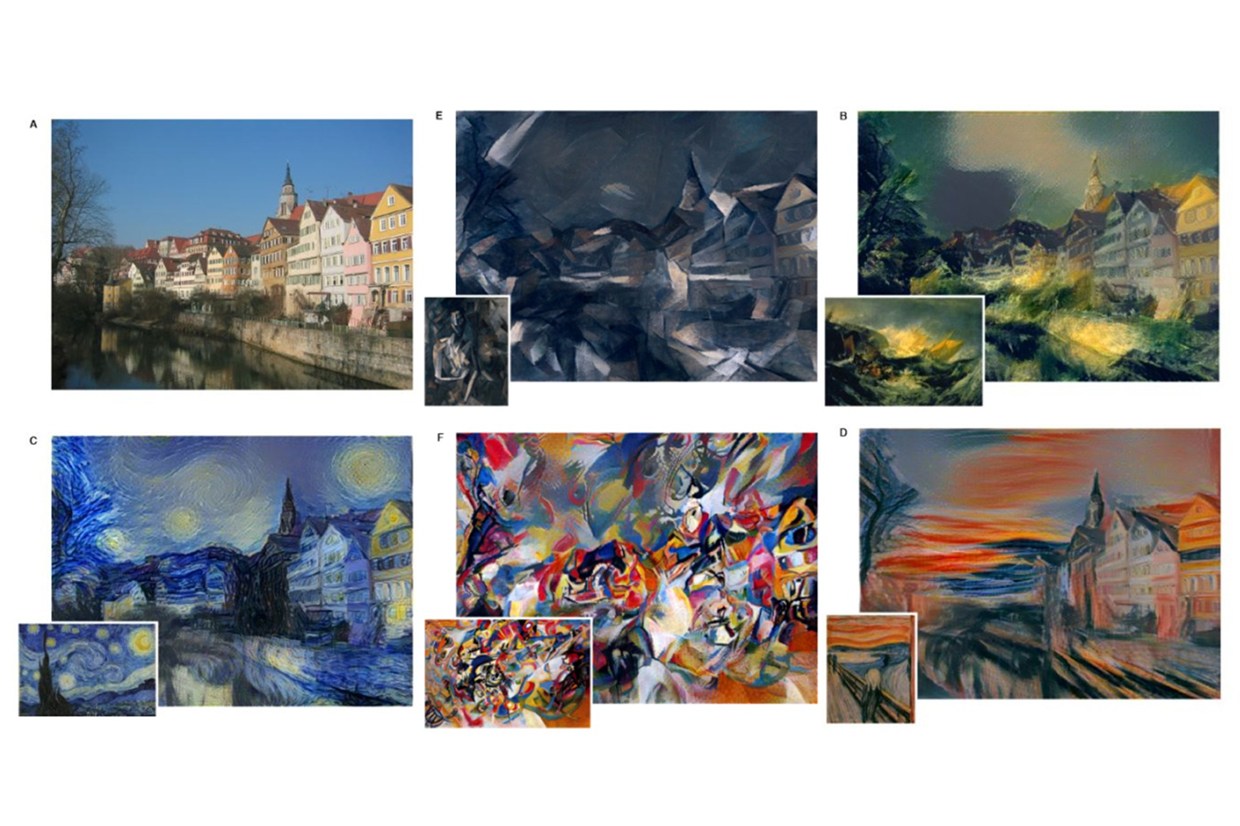

Moreover, art can be significantly transformed with current advances (http://arxiv.org/pdf/1508.06576v1.pdf). This work shows how to transform any camera picture to a painting having a given artistic style (e.g. Van Gogh painting).

It’s quite likely that the same will happen for music. For instance, take Chopin music and transform it automatically to dub-step remixed in Skrillex style. All these advances will eventually be productized.

Durk Kingma:

On the technical side, we can expect many advances in generative modeling. One example is Neural Art, but we expect near-term advances in many other modalities such as fluent text-to-speech generation.

jimrandomh:

There’s some concern that, a decade or three down the line, AI could be very dangerous, either due to how it could be used by bad actors or due to the possibility of accidents. There’s also a possibility that the strategic considerations will shake out in such a way that too much openness would be bad. Or not; it’s still early and there are many unknowns.

If signs of danger were to appear as the technology advanced, how well do you think OpenAI’s culture would be able to recognize and respond to them? What would you do if a tension developed between openness and safety?

Greg Brockman:

The one goal we consider immutable is our mission to advance digital intelligence in the way that is most likely to benefit humanity as a whole. Everything else is a tactic that helps us achieve that goal.

Today the best impact comes from being quite open: publishing, open-sourcing code, working with universities and with companies to deploy AI systems, etc.. But even today, we could imagine some cases where positive impact comes at the expense of openness: for example, where an important collaboration requires us to produce proprietary code for a company. We’ll be willing to do these, though only as very rare exceptions and to effect exceptional benefit outside of that company.

In the future, it’s very hard to predict what might result in the most benefit for everyone. But we’ll constantly change our tactics to match whatever approaches seems most promising, and be open and transparent about any changes in approach (unless doing so seems itself unsafe!). So, we’ll prioritize safety given an irreconcilable conflict.

VelveteenAmbush:

Is there any level of power and memory size of a computer that you think would be sufficient to invent artificial general intelligence pretty quickly? Like, if a genie appeared before you and you used your wish to upgrade your Titan X to whatever naive extrapolation from current trends suggests might available in the year 2050, or 2100, or 3000… could you probably slam out AGI in a few weeks? (Please don’t try to fight the hypothetical! He’s a benevolent genie; he knows what you mean and won’t ruin your wish on incompatible CUDA libraries or something.)

If yes, or generally positive to the question above, what is the closest year you could wish for and still assign it a >50% chance of success?

Andrej Karpathy:

Progress in AI is to a first approximation limited by 3 things: compute, data, and algorithms. Most people think about compute as the major bottleneck but in fact data (in a very specific processed form, not just out there on the internet somewhere) is just as critical. So if I had a 2100 version of TitanX (which I doubt will be a thing) I wouldn’t really know what to do with it right away. My networks trained on ImageNet or ATARI would converge much faster and this would increase my iteration speed so I’d produce new results faster, but otherwise I’d still be bottlenecked very heavily by a lack of more elaborate data/benchmarks/environments I can work with, as well as algorithms (i.e. what to do).

Suppose further that you gave me thousands of robots with instant communication and full perception (so I can collect a lot of very interesting data instantly), I think we still wouldn’t know what software to run on them, what objective to optimize, etc. (we might have several ideas, but nothing that would obviously do something interesting right away). So in other words we’re quite far, lacking compute, data, algorithms, and more generally I would say an entire surrounding infrastructure, software/hardware/deployment/debugging/testing ecosystem, raw number of people working on the problems, etc.